BryteFlow Ingest - Real-time data integration

BryteFlow Ingest is a real-time data replication software replicating data from various sources to destinations. It is a high-performance software that facilitates real-time change data capture from sources with zero load on the source systems. BryteFlow Ingest captures the changes and transfers them to the target system. It automates the creation of either an exact copy or a time series copy of the data source in the target. BryteFlow Ingest performs an initial full load from source and then incrementally merges changes to the destination of choice, the entire process being fully automated.

BryteFlow Ingest works with its companion softwares which are part of BryteFlow Product suite.

- BryteFlow Blend for real-time data extraction and data preparation

- BryteFlow TruData for real-time data reconciliation

- BryteFlow Ingest XL for large table extraction

Supported Database Sources

BryteFlow Ingest supports the following database sources:

- MS SQL Server

- Oracle

- MySQL

- PostgreSQL

- MariaDB

- Salesforce

- SAP S/4 HANA

- SAP ECC

- SAP HANA

- API integration with files on Amazon S3

- Any Files on Amazon S3

Supported Destinations

The supported destinations are as follows:

- S3

- Redshift

- Snowflake

- Athena

- Apache Kafka

- ADLS Gen2

- Azure Synapse SQL

- Microsoft SQL Server

- Azure SQL DB

- Oracle

- PostgreSQL

- Google Bigquery

- Databricks

Looking for a different destination?

BryteFlow does custom source/destination on customer request, please contact us directly at info@bryteflow.com.

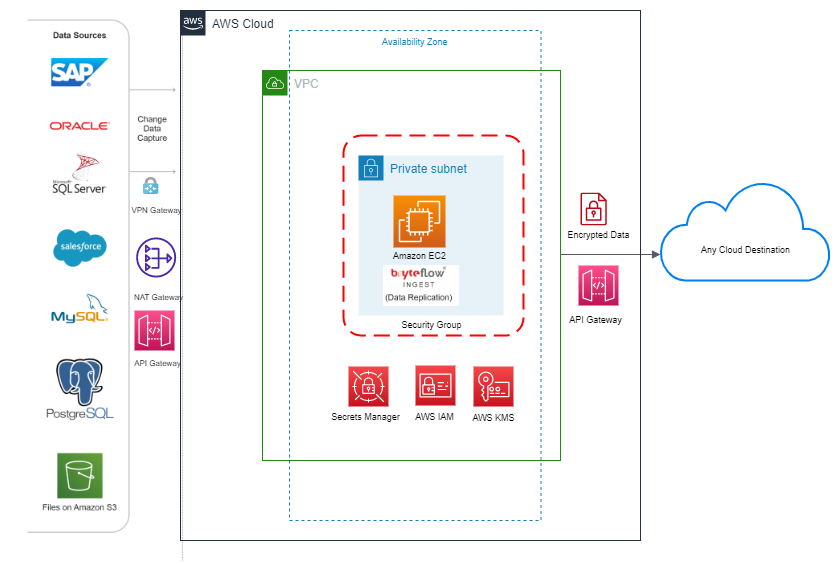

BryteFlow Ingest Architecture

BryteFlow Ingest can replicate data from any database, any API and any flat file to Amazon S3, Redshift, Snowflake, Databricks, PostgreSQL, Google Bigquery , Apache Kafka etc. through a simple point and click interface. It is an entirely self-service and automated data replication tool.

BryteFlow offers various deployment strategies to its Customers, mentioned below:

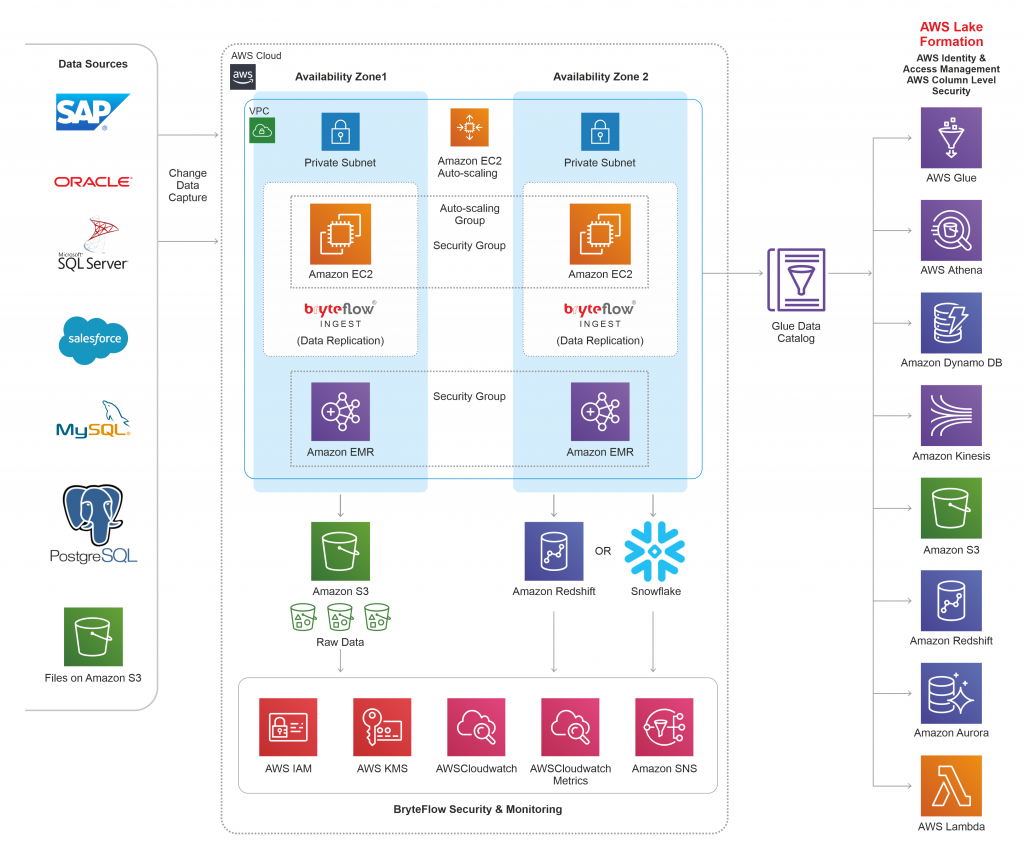

- Standard deployment on AWS Environment

- High Availability deployment in an AWS Environment

- Hybrid deployment– using On-premises and Cloud infrastructure

BryteFlow Ingest uses log-based Change Data Capture for data replication. Below is the Technical Architecture Diagram, showcasing the same for a standard setup in AWS Environment.

Below is the architecture diagram for BryteFlow Ingest in a standard deployment. Its is the reference architecture for any of the setup instructions that is provided in this user guide. For more details on setting up any optional components please contact BryteFlow support.

Estimated Deployment time : 1 hour (~Approx)

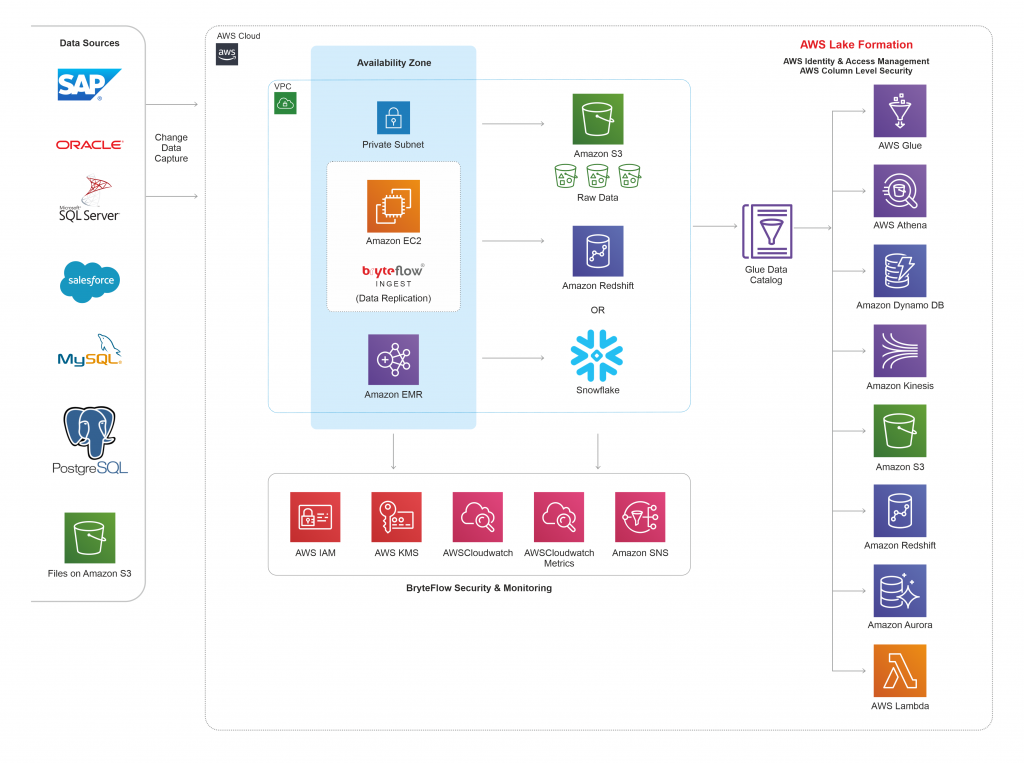

Below is the BryteFlow Ingest architecture showcasing integration with various AWS services which are optional, in a standard deployment.

The above architecture diagram describes a Standard deployment type showcasing the below features:

- AWS services running along with BryteFlow Ingest

- BryteFlow Architecture recommended for a VPC in AWS.

- Data Flow between source, AWS and destination with security and monitoring feature used.

- Security which includes IAM is in separate group and is interfaced with BryteFlow Ingest

- All supported destinations and AWS services are listed to which BryteFlow integrates

High Availability Architecture

Estimated Deployment time : 1 day (~Approx)

The high availability architecture explains the way BryteFlow is deployed in a multi-AZ setup. In case of any instance or AZ failures it can be auto scaled in another AZ, without incurring any data loss.

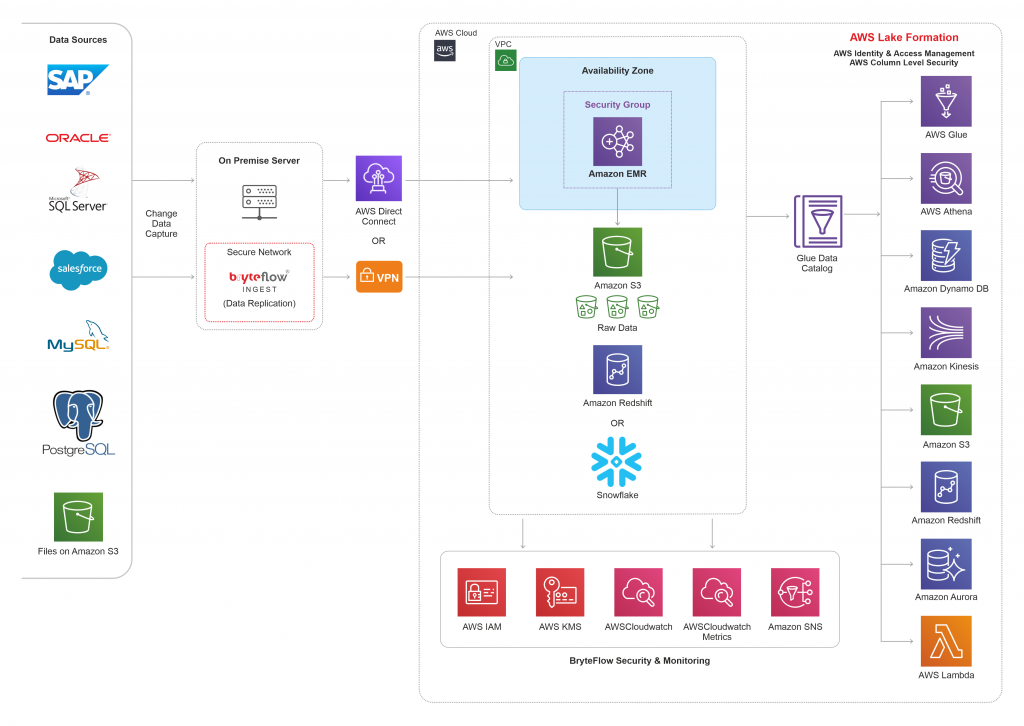

Hybrid Architecture

Estimated Deployment time : 4 hours (~Approx)

BryteFlow also offers a hybrid deployment model to its Customers, which is mix of services on-premises and in the AWS Cloud. BryteFlow Ingest can be easily setup on a Windows server which is in an on-premises environment. Whereas, all the destination end-points reside on AWS Cloud, making it a hybrid model. Its recommended to follow secure connectivity between on-premises and AWS services which can be achieved by using VPN connection or AWS Direct Connect, refer to the blog which talks about choices for hybrid cloud connectivity.

Prerequisite

Prerequisites of using Amazon Machine Image (AMI) from AWS Marketplace

Using the AMI sourced from the AWS Marketplace requires:

- Selection of BryteFlow Ingest volume

- Selection of EC2 instance type

- Ensure connectivity between the server/EC2 hosting the BryteFlow Ingest software and

- the source

- Amazon S3

- Amazon EMR

- Amazon Redshift (if needed as a destination)

- Snowflake (if needed as a destination)

- Amazon Athena (if needed as a destination)

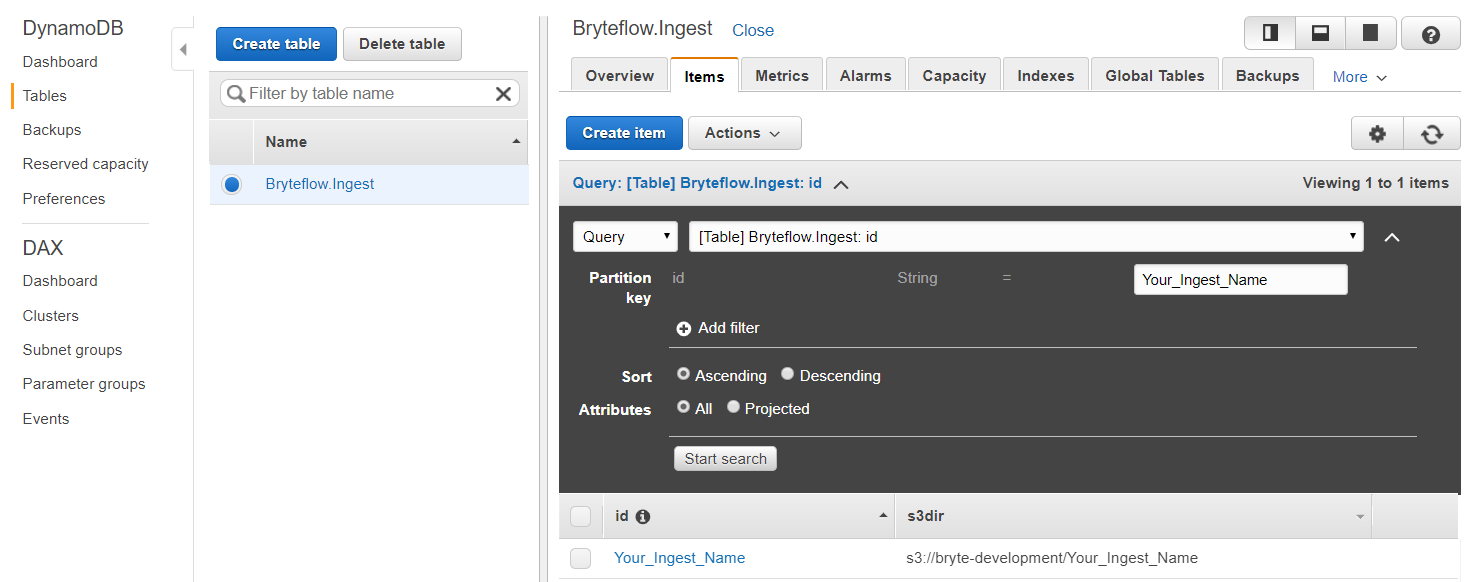

- DynamoDB (if high availability option is required)

The steps to create AWS services are mentioned in detail under the section ‘ Environment Preparation‘.

Follow below steps prior to launching BryteFlow in AWS via AMI or Custom install on an EC2:

- Create a policy giving relevant name for EC2 i.e. “BryteFlowEc2Policy”. Refer AWS guide on creating policies.

- Use the policy json provided in the below section “AWS Identity and Access Management (IAM) for BryteFlow“

- Create an IAM role “BryteFlowEc2Role”. Refer AWS guide for step-by-step instruction on creating roles .

- Attach the policy “BryteFlowEc2Policy” to the role.

- Similarly, create a Lambda policy which is required for disk checks and attach the Lambda policy json provided in below section “Recovery from faults“.

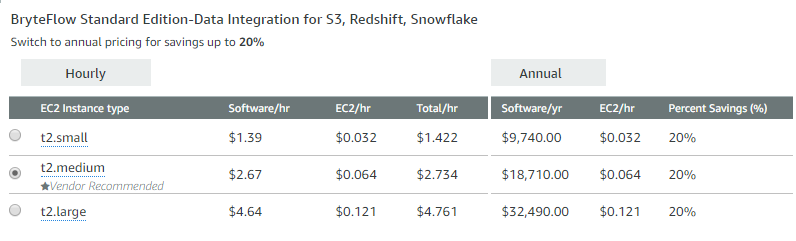

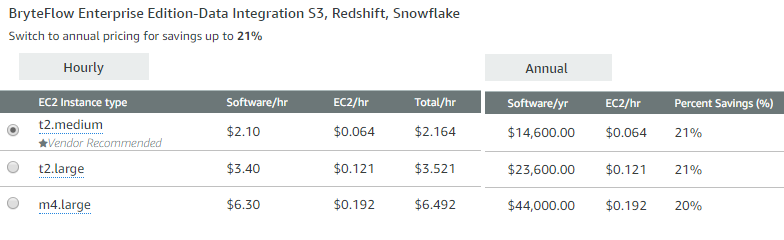

Available options with AMI are volume based, recommended options for EC2 and EMR for each of these volumes.

| Total Data Volume | EC2 Recommended | EMR Recommended |

| < 100 GB | t2.small | 1 x m4.xlarge master node 2 x c5.xlarge task nodes |

| 100GB – 300GB | t2.medium | 1 x m4.xlarge master node 2 x c5.xlarge task nodes |

| 300GB – 1TB | t2.large | 1 x m4.xlarge master node 2 x c5.xlarge task nodes |

| > 1TB | Seek expert advice from support@bryteflow.com | Seek expert advice from support@bryteflow.com |

NOTE: Evaluate the EMR configuration depending on the latency required.

These should be considered a starting point, if you have any questions please seek expert advice from support@bryteflow.com

System Requirement when not using Amazon Machine Image (AMI)

- Port 8081 should be open on the server hosting the BryteFlow Ingest software

- Google Chrome browser is required as the internet browser on the server hosting BryteFlow Ingest software

- Java version 8 or higher is required

- If using MS SQL Server as a source, please download and install the BCP utility

- Ensure connectivity between the server hosting the BryteFlow Ingest software and the source, Amazon S3, Amazon EMR, Amazon Redshift and DynamoDB (if high availability option is required)

Recommended Hardware configuration

The following describes the hardware configuration for a Windows server, assuming that there are a few sources and target combinations (3 medium ideally). It also depends on how intensively the data is being replicated from these sources, so this is a guide, but will need extra resources depending on the amount of data being replicated. The amount of disk space will also be dependent on the amount of data being replicated.

Processor: 4 core

Memory: 16GB

Disk requirements: Depend on the data being extracted, but a minimum of 300GB

Network performance: High

Prerequisites for software on the server

The following softwares are required to be installed on the server:

- The server should have 64-bit Open jdk 1.8

https://corretto.aws/downloads/latest/amazon-corretto-8-x64-windows-jre.msi

- Google Chrome

- For Oracle database as a destination ONLY:

- Please install Oracle client corresponding to the version of Oracle at destination database.

- For SQL Server sources and SQL Server destinations ONLY: Install bcp – Microsoft Utility and below drivers:

- VC++ 2017 64 bit

https://support.microsoft.com/en-in/help/2977003/the-latest- supported-visual-c-downloads - ODBC drivers 17 64 bit

https://www.microsoft.com/en-us/download/details.aspx?id= 56567 - SQL CMD version 15 64 bit

https://docs.microsoft.com/en-us/sql/tools/sqlcmd-utility? view=sql-server-ver15

Required Skills

BryteFlow is a very robust application which makes data replication to cloud very easy and smooth. It can deal with huge data volumes with ease and the process is all automated. The setup done in 3 easy steps. It doesn’t need highly technical resources, basic knowledge of the below is recommended yo deploy the software:

- AWS Cloud Fundamentals

- For RDMS endpoints, basic database skills includes writing and executing database queries.

- Able to use Microsoft windows system

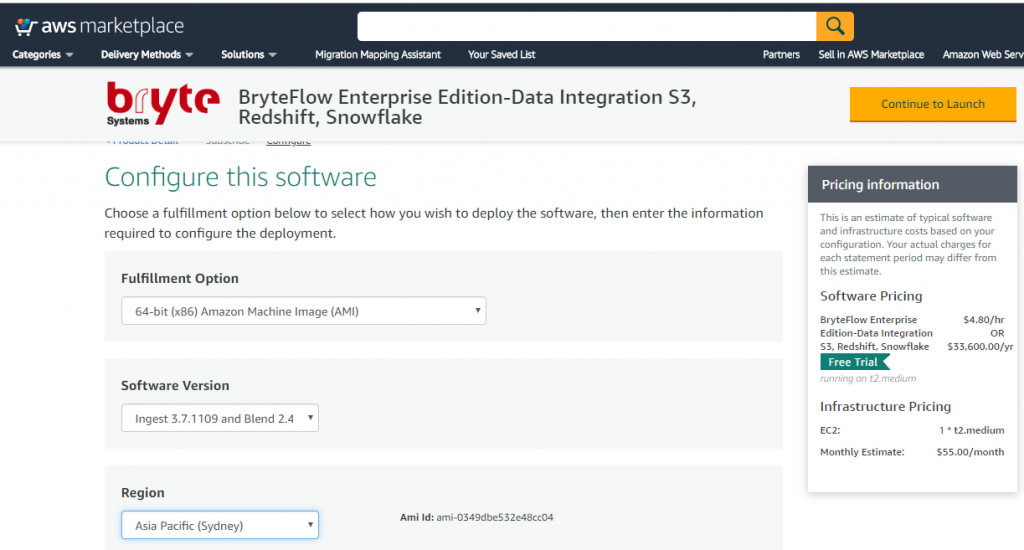

Launch BryteFlow Enterprise Edition from AWS Marketplace

Steps to launch BryteFlow from AWS Marketplace: Enterprise Edition

- Please ensure to complete the ‘Environment Preparation‘ section before proceeding to launch BryteFlow from an AMI.

- Go to the product URL https://aws.amazon.com/marketplace/pp/B079PWMJ4B

- Click ‘Continue to Subscribe’

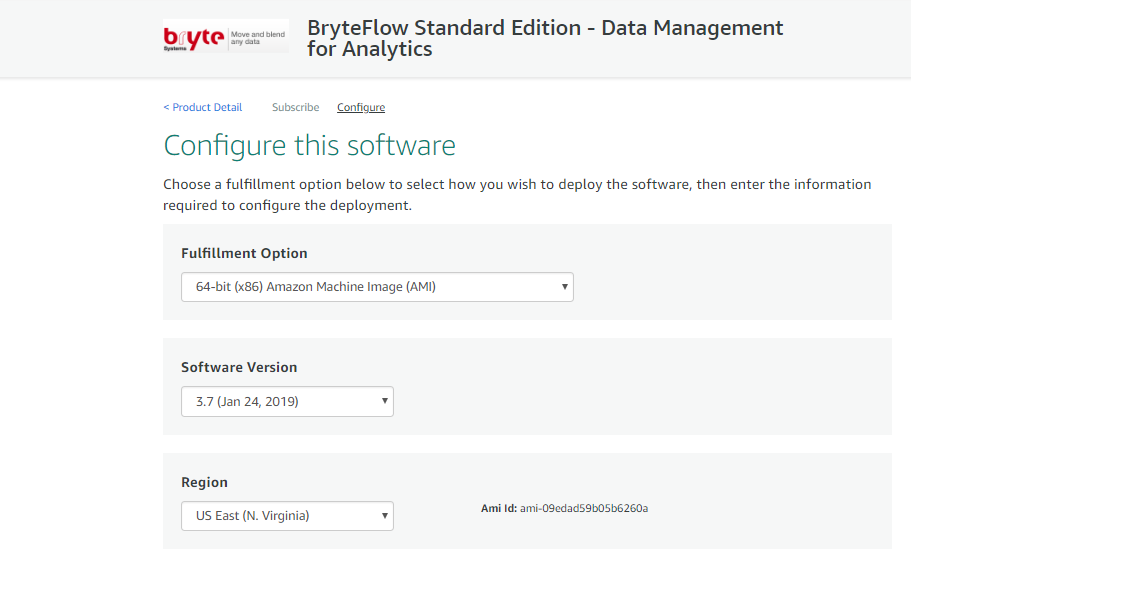

- Click ‘Continue to Configuration’. This brings up the default ‘Fulfillment Option’ with the latest software version.

- Choose the AWS Region you would like to go for or else go by the default AWS Region that is already present in the drop-down.

Supported AWS Regions:

BryteFlow Ingest is validated and supported in below AWS Regions, however it can be launched in all AWS regions.

- us-east-1

- us-east-2

- us-west-2

- ap-southeast-2

BryteFlow is available in ALL AWS Regions.

Please contact BryteFlow Support for any needed support.

- Click ‘Continue to Launch’

- Choose Action ‘Launch from Website’

- Select your EC2 instance type based on your data volume, recommendations available in the product detail page

- Choose your VPC from the dropdown or go by the default

- Please select the ‘Private Subnet’ under ‘Subnet Settings’. If none exists, its recommended to create one. Please follow detail AWS User Guide for Creating a Subnet.

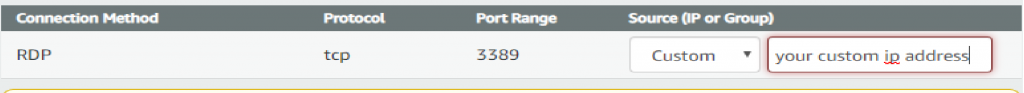

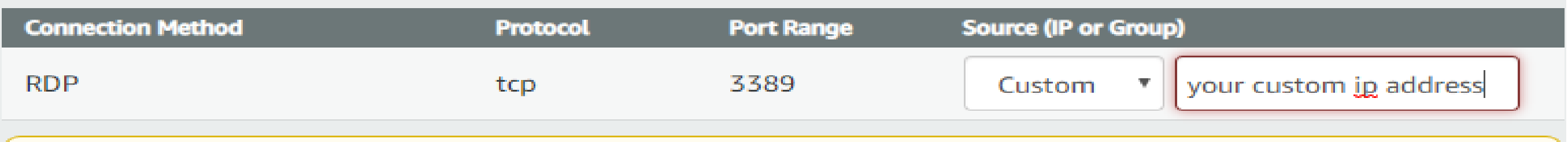

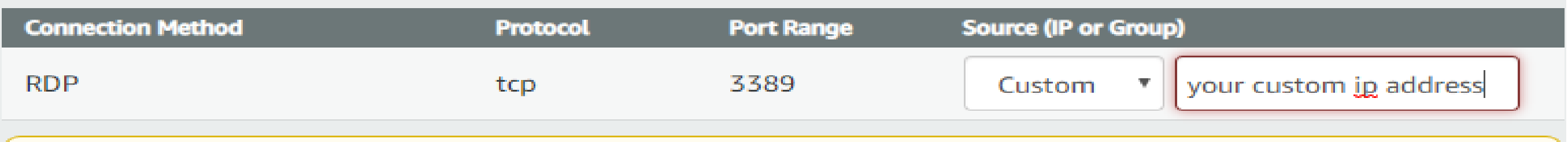

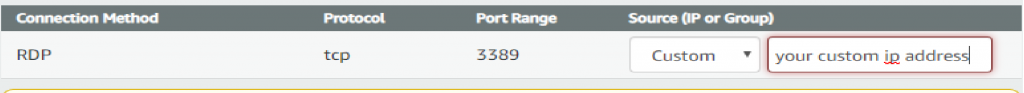

- Update the ‘Security Group Settings’ or create one based on BryteFlow recommended steps as below:

- Assign a name for the security group, for eg: BryteFlowIngest

- Enter a description of your choice

- Add inbound rule(s) to RDP the EC2 with the Custom IP address

- Add outbound rule(s) to allow the EC2 instance access the source db. DB ports will vary based on the source database , please add rules to allow the instance access to specific source database ports.

- For more details, refer to BryteFlow recommendation on Network ACLs for your VPC in the below section ‘Recommended Network ACL Rules‘

- Provide the Key Pair Settings by choosing an EC2 key pair of your own or ‘Create a key pair in EC2‘

- Click ‘Launch’ to launch the EC2 instance.

- The endpoint will be an EC2 instance running BryteFlow Ingest (as a windows service) on port 8081

Additional information regarding launching an EC2 instance can be found here

Any trouble launching or connecting to the EC2 instance, please refer to the troubleshooting guides below:

- https://docs.aws.amazon.com/AWSEC2/latest/WindowsGuide/troubleshooting-windows-instances.html#rdp-issues

- https://docs.aws.amazon.com/AWSEC2/latest/WindowsGuide/get-set-up-for-amazon-ec2.html#create-a-base-security-group

** Please note that BryteFlow Blend is a companion product to BryteFlow Ingest. In order to make the most of enterprise capabilities, first setup BryteFlow Ingest completely. Thereafter, no configuration is required in BryteFlow Blend, its all ready to go. Start with the transformations directly off AWS S3.

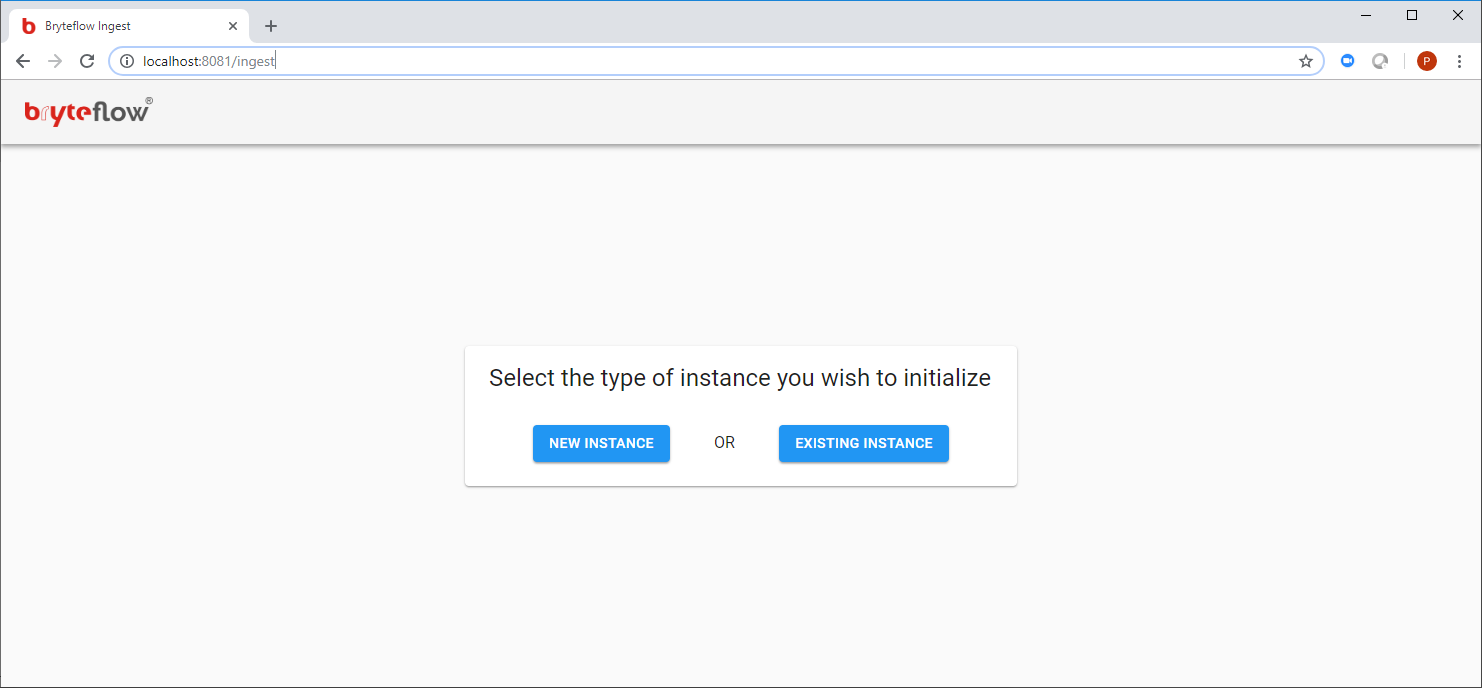

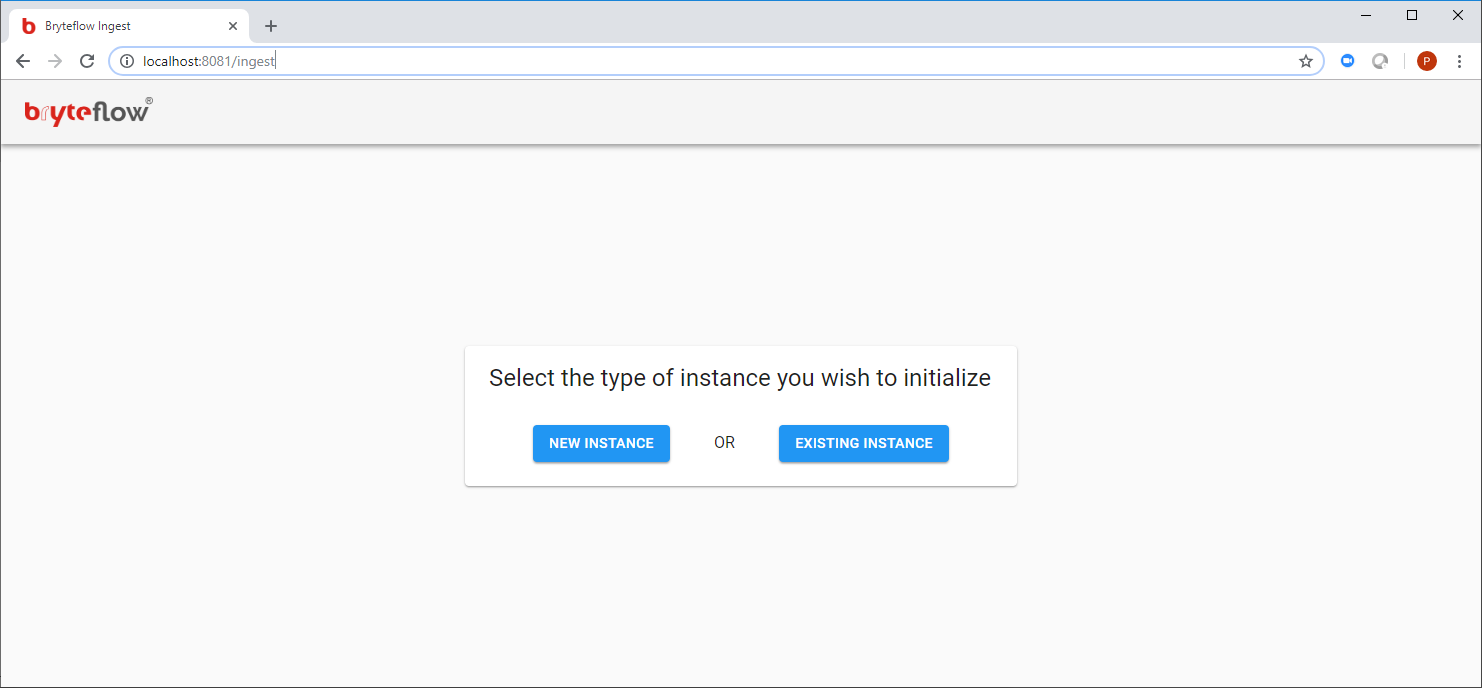

Once connected to the EC2 instance:

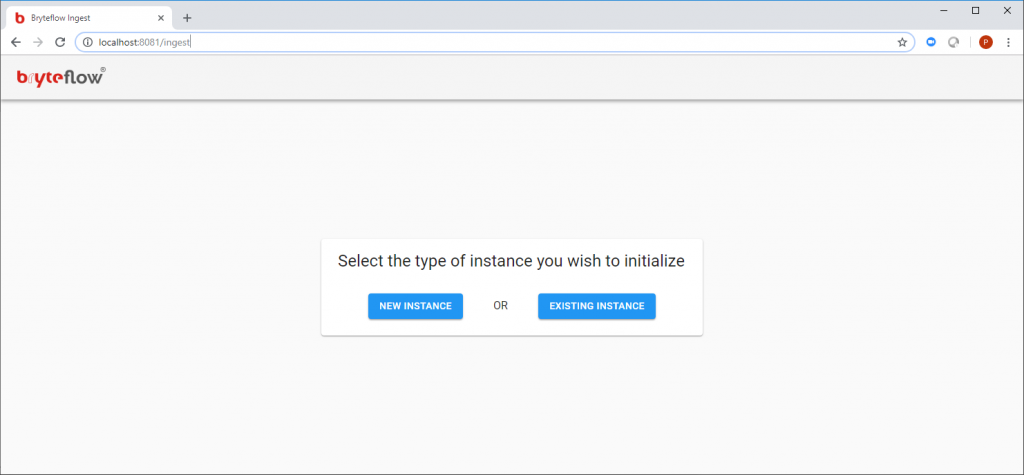

- Launch BryteFlow Ingest from the google chrome browser using bookmark ‘BryteFlow Ingest’

- Or type

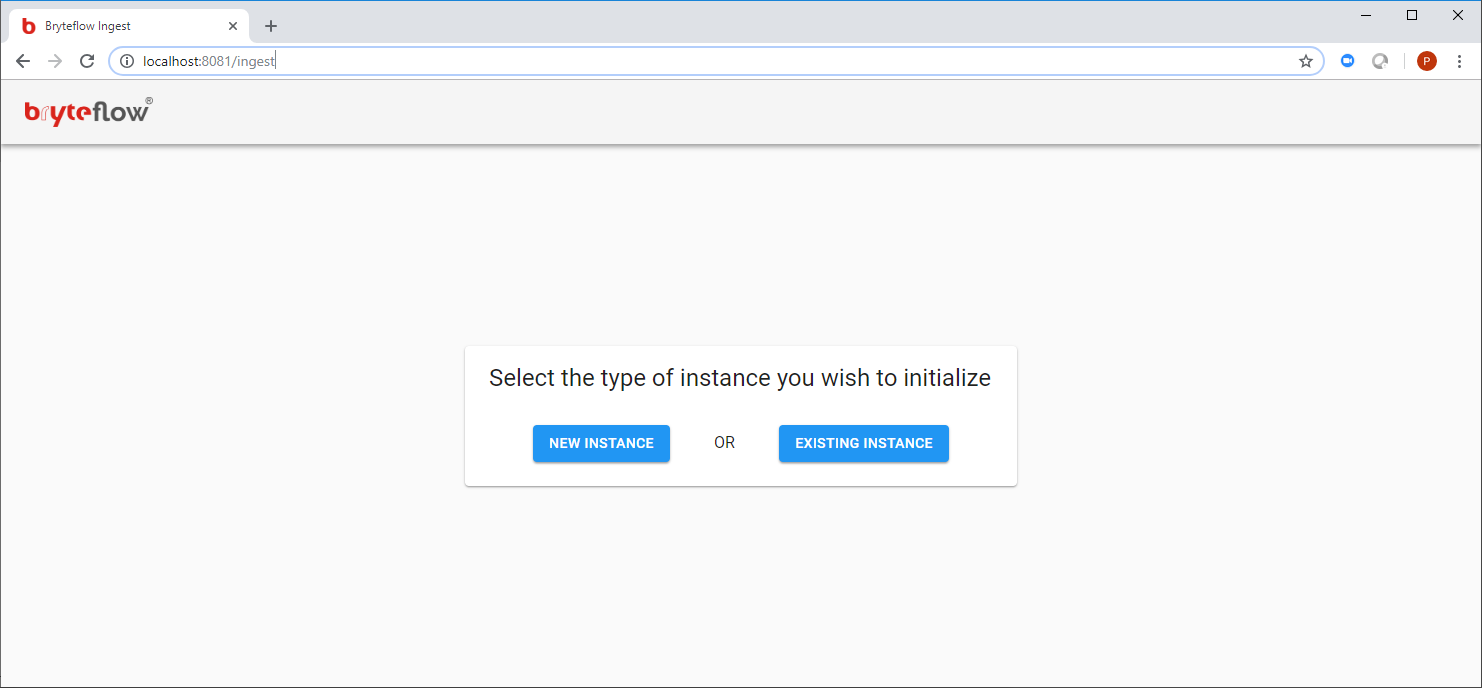

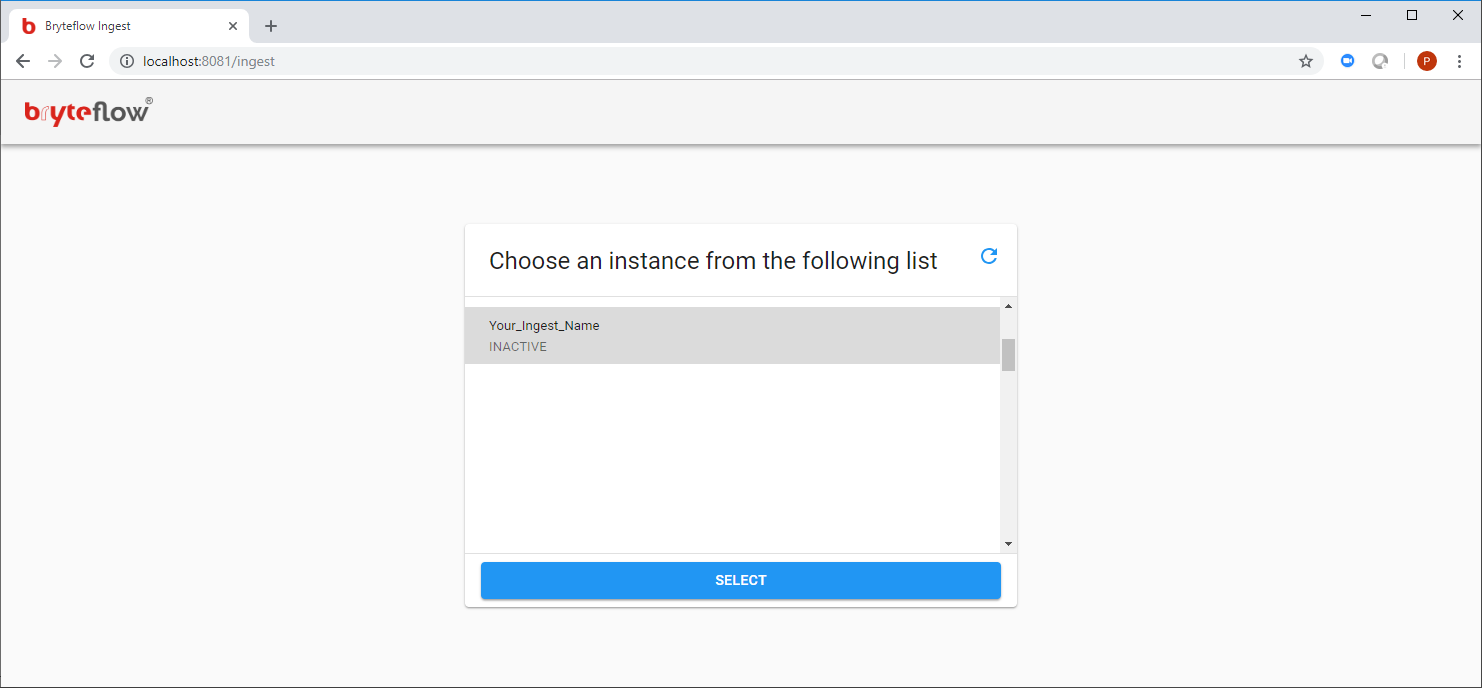

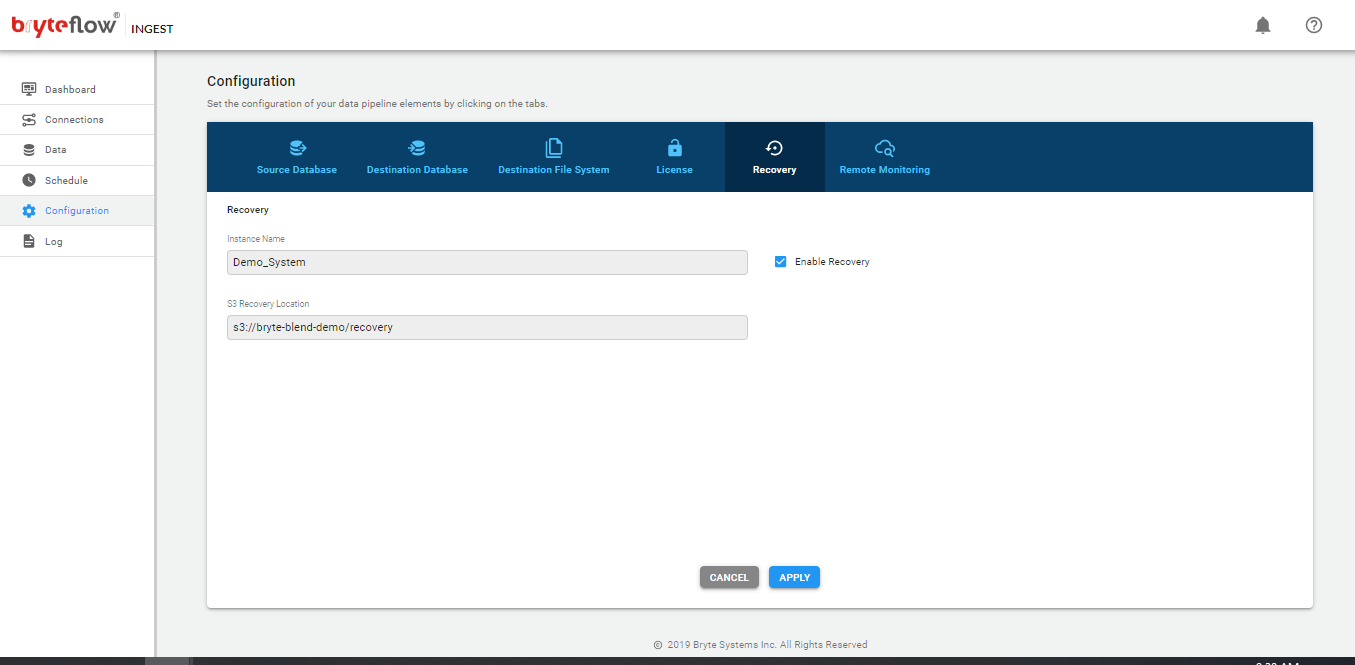

localhost:8081into the Chrome browser to open the BryteFlow Ingest web console - This will bring up a page requesting either a ‘New Instance’ or an ‘Existing Instance’

- Click on the ‘New Instance’ button and do the setup for your environment (refer to the section regarding Configuration Of BryteFlow Ingest in this document for further details)

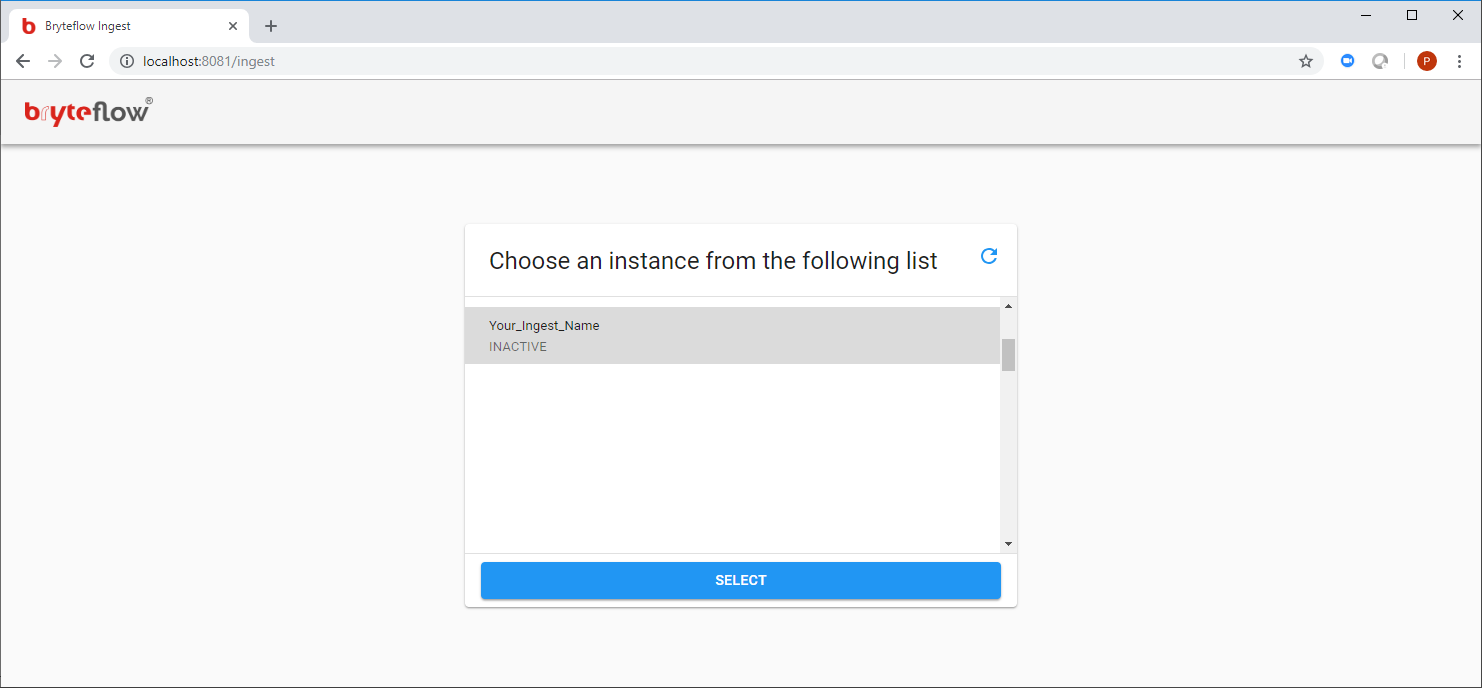

- ‘Existing Instance’ should only be clicked when recovering an instance of BryteFlow Ingest (refer to the Recovery section of this document for further details)

- Once Ingest is all setup and is replicating to the desired destination successfully

- Launch BryteFlow Blend from the Google chrome browser using bookmark ‘BryteFlow Blend’

- Or type

localhost:8082into the Google chrome browser to open the BryteFlow Blend web console - BryteFlow Blend is tied up to BryteFlow Ingest and no AWS Location configuration is required.

- This makes users ready to start their data transformations of S3.

- For details on Blend setup and Usage refer to the BryteFlow Blend User Guide: https://docs.bryteflow.com/Bryteflow-Blend-User-Guide/

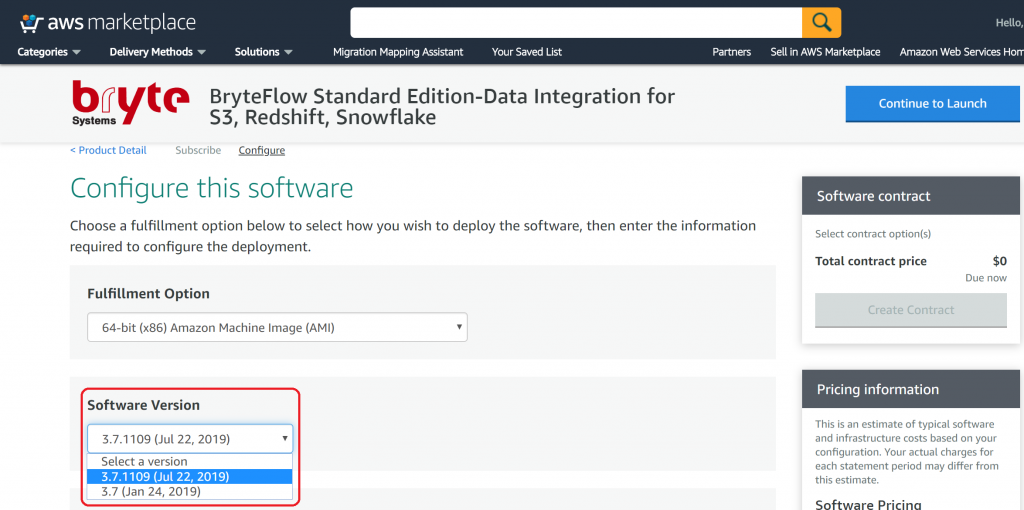

Launch BryteFlow Ingest from AWS Marketplace : Standard Edition

Steps to launch BryteFlow Ingest from AWS Marketplace: Standard Edition

- Please ensure to complete the ‘Environment Preparation‘ section before proceeding to launch BryteFlow from an AMI.

- Go to the product URL https://aws.amazon.com/marketplace/pp/B01MRLEJTK

- Click ‘Continue to Subscribe’

- Click ‘Continue to Configuration’. This brings up the default ‘Fulfillment Option’ with the latest software version.

- Choose the AWS Region you would like to go for or else go by the default AWS Region that is already present in the dropdown.

BryteFlow is available in ALL AWS Regions.

- Click ‘Continue to Launch’

- Choose Action ‘Launch from Website’

- Select your EC2 instance type based on your data volume, recommendations available in the product detail page

- Choose your VPC from the dropdown

- Please select the ‘Private Subnet’ under ‘Subnet Settings’. If none exists, its recommended to create one. Please follow detail AWS User Guide for Creating a Subnet.

- Update the ‘Security Group Settings’ or create one based on BryteFlow recommended steps as below:

- Assign a name for the security group, for eg: BryteFlowIngest

- Enter a description of your choice

- Add inbound rule(s) to RDP the EC2 with the Custom IP address

- Add outbound rule(s) to allow the EC2 instance access the source db. DB ports will vary based on the source database , please add rules to allow the instance access to specific source database ports.

- For more details, refer to BryteFlow recommendation on Network ACLs for your VPC in the below section ‘Recommended Network ACL Rules‘

- Provide the Key Pair Settings by choosing an EC2 key pair of your own or ‘Create a key pair in EC2‘

- Click ‘Launch’ to launch the EC2 instance.

- The endpoint will be an EC2 instance running BryteFlow Ingest (as a windows service) on port 8081

Additional information regarding launching an EC2 instance can be found here

Any trouble launching or connecting to the EC2 instance, please refer to the troubleshooting guides below:

- https://docs.aws.amazon.com/AWSEC2/latest/WindowsGuide/troubleshooting-windows-instances.html#rdp-issues

- https://docs.aws.amazon.com/AWSEC2/latest/WindowsGuide/get-set-up-for-amazon-ec2.html#create-a-base-security-group

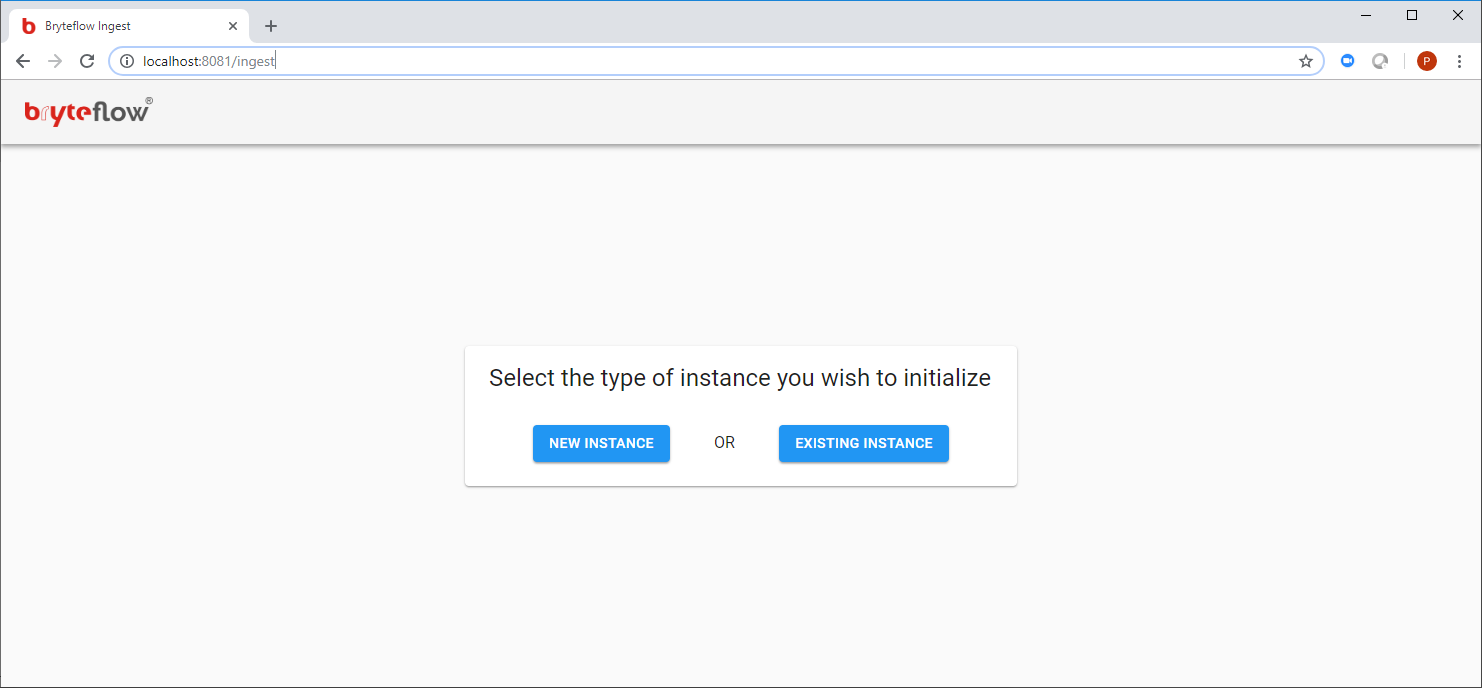

Once connected to the EC2 instance:

- Launch BryteFlow Ingest from the google chrome browser using bookmark ‘BryteFlow Ingest’

- Or type

localhost:8081into the Chrome browser to open the BryteFlow Ingest web console - This will bring up a page requesting either a ‘New Instance’ or an ‘Existing Instance’

- Click on the ‘New Instance’ button (refer to the section regarding Configuration Of BryteFlow Ingest in this document for further details)

- ‘Existing Instance’ should only be clicked when recovering an instance of BryteFlow Ingest (refer to the Recovery section of this document for further details)

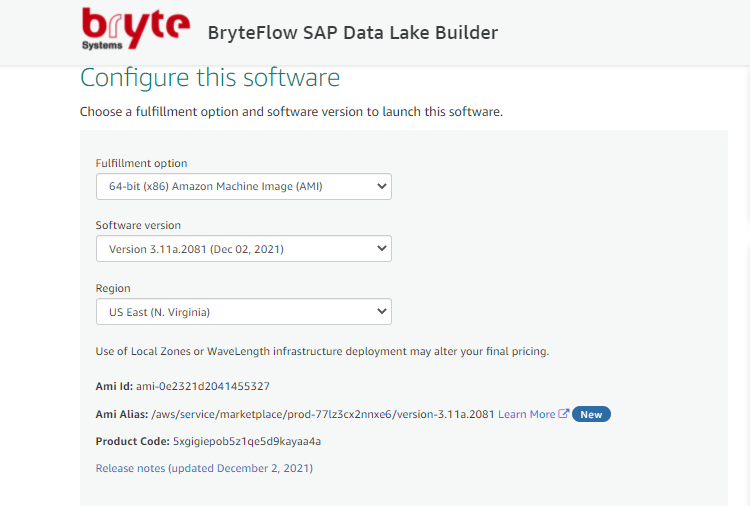

Launch BryteFlow SAP Data Lake Builder from AWS Marketplace

Steps to launch BryteFlow from AWS Marketplace: SAP Data Lake Builder

- Please ensure to complete the ‘Environment Preparation‘ section before proceeding to launch BryteFlow from an AMI.

- Go to the product URL

- Click ‘Continue to Subscribe’

- Click ‘Continue to Configuration’. This brings up the default ‘Fulfillment Option’ with the latest software version.

Choose the AWS Region you would like to go for or else go by the default AWS Region that is already present in the drop-down.

Choose the AWS Region you would like to go for or else go by the default AWS Region that is already present in the drop-down.-

- Click ‘Continue to Launch’

- Choose Action ‘Launch from Website’

- Select your EC2 instance type based on your data volume, recommendations available in the product detail page

- Choose your VPC from the dropdown

- Please select the ‘Private Subnet’ under ‘Subnet Settings’. If none exists, its recommended to create one. Please follow detail AWS User Guide for Creating a Subnet.

- Update the ‘Security Group Settings’ or create one based on BryteFlow recommended steps as below:

- Assign a name for the security group, for eg: BryteFlowIngest

- Enter a description of your choice

- Add inbound rule(s) to RDP the EC2 with the Custom IP address

- Add outbound rule(s) to allow the EC2 instance access the source db. DB ports will vary based on the source database , please add rules to allow the instance access to specific source database ports.

- For more details, refer to BryteFlow recommendation on Network ACLs for your VPC in the below section ‘Recommended Network ACL Rules‘

- Provide the Key Pair Settings by choosing an EC2 key pair of your own or ‘Create a key pair in EC2‘

- Click ‘Launch’ to launch the EC2 instance.

- The endpoint will be an EC2 instance running BryteFlow Ingest (as a windows service) on port 8081

Additional information regarding launching an EC2 instance can be found here

Any trouble launching or connecting to the EC2 instance, please refer to the troubleshooting guides below:

- https://docs.aws.amazon.com/AWSEC2/latest/WindowsGuide/troubleshooting-windows-instances.html#rdp-issues

- https://docs.aws.amazon.com/AWSEC2/latest/WindowsGuide/get-set-up-for-amazon-ec2.html#create-a-base-security-group

Once connected to the EC2 instance:

- Launch BryteFlow Ingest from the google chrome browser using bookmark ‘BryteFlow Ingest’

- Or type

localhost:8081into the Chrome browser to open the BryteFlow Ingest web console - This will bring up a page requesting either a ‘New Instance’ or an ‘Existing Instance’

- Click on the ‘New Instance’ button (refer to the section regarding Configuration Of BryteFlow Ingest in this document for further details)

- ‘Existing Instance’ should only be clicked when recovering an instance of BryteFlow Ingest (refer to the Recovery section of this document for further details)

AWS Identity and Access Management (IAM) for BryteFlow

AWS IAM roles are used to delegate access to the AWS resources. With IAM roles, you can establish trust relationships between your trusting account and other AWS trusted accounts. The trusting account owns the resource to be accessed and the trusted account contains the users who need access to the resource.

BryteFlow’s Recommendations:

- Create an IAM User for eg. ‘BryteFlow_User’. Please DO NOT use root user account to setup the application. Refer AWS guide on how to create an IAM User.

- Create an IAM role for eg. ‘BryteFlow_EC2Role’. Refer to the AWS guide on creating IAM role.

- Create an IAM Policy for eg. ‘BryteFlow_policy’ and assign custom policies provided below to the EC2 Role, for details on creating policy click here.

- Instead of defining permissions for individual BryteFlow IAM users, it’s usually more convenient to create groups that relate to job functions (administrators, developers, accounting, etc.). Next, define the relevant permissions for each group. Finally, assign IAM users to those groups. All the users in an IAM group inherit the permissions assigned to the group. That way, you can make changes for everyone in a group in just one place.

- Grant Least privilege – Its recommended to grant only minimal required permissions to the IAM role. BryteFlow User requires the basic permissions on S3, CloudWatch, Dynamodb and Redshift (if needed).

- BryteFlow needs access to S3 , EC2 , EMR, SNS and Redshift (if needed as a destination) with the below listed minimum privileges.

-

-

- Sample policy that is required for ‘BryteFlow’ EC2 Role is shared below:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "1",

"Action": [

"s3:DeleteObject",

"s3:GetObject",

"s3:ListBucket",

"s3:PutObject"

],

"Effect": "Allow",

"Resource": "arn:aws:s3:::"

},

{

"Sid": "2",

"Action": [

"ec2:AcceptVpcEndpointConnections",

"ec2:AcceptVpcPeeringConnection",

"ec2:AssociateIamInstanceProfile",

"ec2:CreateTags",

"ec2:DescribeTags",

"ec2:RebootInstances"

],

"Effect": "Allow",

"Resource": "arn:aws:ec2:"

},

{

"Sid": "3",

"Action": [

"elasticmapreduce:AddJobFlowSteps",

"elasticmapreduce:DescribeStep",

"elasticmapreduce:ListSteps",

"elasticmapreduce:RunJobFlow",

"elasticmapreduce:ListCluster",

"elasticmapreduce:DescribeCluster"

],

"Effect": "Allow",

"Resource": "arn:aws:elasticmapreduce:: : / "

},

{

"Sid": "4",

"Action": [

"sns:Publish"

],

"Effect": "Allow",

"Resource": "arn:aws:sns:: : "

},

{

"Sid": "5",

"Action": [

"redshift:ExecuteQuery",

"redshift:FetchResults",

"redshift:ListTables"

],

"Effect": "Allow",

"Resource": "arn:aws:redshift:: :cluster:mycluster*"

},

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": [

"dynamodb:CreateTable",

"dynamodb:PutItem",

"dynamodb:Update*",

"dynamodb:Get*",

"dynamodb:Scan"

],

"Resource": "arn:aws:dynamodb:: :table/BryteflowTable"

},

{

"Sid": "6",

"Effect": "Allow",

"Action": [

"secretsmanager:GetSecretValue",

"secretsmanager:DescribeSecret",

"secretsmanager:PutSecretValue",

"secretsmanager:UpdateSecret"

],

"Resource": "arn:aws:secretsmanager:*::secret:*"

},

{

"Sid": "7",

"Effect": "Allow",

"Action": "secretsmanager:ListSecrets",

"Resource": "*"

}

]

}

- Sample policy that is required for ‘BryteFlow’ EC2 Role is shared below:

-

-

- The resources used in the policy are as follows:

- bucket_name : Data Lake bucket name, its the destination S3 bucket where the User wants the replicated data to be written to.

- ec2_instance_id : its the instance id of the EC2 launched via AMI or the existing EC2 where BryteFlow is setup.

- region : its the region in which the EMR cluster is created.

- account/account_id : its the ‘BryteFlow’ user account/account_id created for BryteFlow Ingest.

- resourceType : its the EMR resource type which should be ‘Cluster’

- resource_id : is the EMR Cluster id for BryteFlow

- sns_name : is the SNS topic name used by BryteFlow

- relative-id : is the Redshift cluster identifier used in BryteFlow

- Please NOTE: Redshift and SNS policies are optional. If Redshift is not a preferred destination for ingestion or SNS alerts are not required, while implementing please ignore the respective policy section.

- For more details on setting up IAM roles and policies refer to AWS documentation : https://docs.aws.amazon.com/IAM/latest/UserGuide/getting-set-up.html

Defining Roles for BryteFlow

Below are the various roles and permissions needed for launching and managing BryteFlow application.

| Role | Type | Permissions/Policies | Purpose |

| EC2Admin | AWS Custom Role for EC2 | List-DescribeInstanceStatus Directory Service List,Write-DescribeDirectories,CreateComputer Systems Manager List,Read,Write ListAssociations, ListInstanceAssociations, DescribeAssociation, DescribeDocument, GetDeployablePatchSnapshotForInstance, GetDocument, GetManifest, GetParameters, PutComplianceItems, PutInventory, UpdateAssociationStatus, UpdateInstanceAssociationStatus, UpdateInstanceInformation |

Create and Manage EC2 instance |

| DBAdmin | AWS Custom Role | cloudwatch:DeleteAlarms, cloudwatch:Describe*, cloudwatch:DisableAlarmActions, cloudwatch:EnableAlarmActions, cloudwatch:Get*, cloudwatch:List*, cloudwatch:PutMetricAlarm, datapipeline:ActivatePipeline, datapipeline:CreatePipeline, datapipeline:DeletePipeline, datapipeline:DescribeObjects, datapipeline:DescribePipelines, datapipeline:GetPipelineDefinition, datapipeline:ListPipelines, datapipeline:PutPipelineDefinition, datapipeline:QueryObjects, dynamodb:CreateTable, dynamodb:BatchGetItem, dynamodb:BatchWriteItem, dynamodb:ConditionCheckItem, dynamodb:PutItem, dynamodb:DescribeTable, dynamodb:DeleteItem, dynamodb:GetItem, dynamodb:Scan, dynamodb:Query, dynamodb:UpdateItem, ec2:DescribeAccountAttributes, ec2:DescribeAddresses, ec2:DescribeAvailabilityZones, ec2:DescribeInternetGateways, ec2:DescribeSecurityGroups, ec2:DescribeSubnets, ec2:DescribeVpcs, iam:ListRoles, iam:GetRole, kms:ListKeys, lambda:CreateEventSourceMapping, lambda:CreateFunction, lambda:DeleteEventSourceMapping, lambda:DeleteFunction, lambda:GetFunctionConfiguration, lambda:ListEventSourceMappings, lambda:ListFunctions, logs:DescribeLogGroups, logs:DescribeLogStreams, logs:FilterLogEvents, logs:GetLogEvents, logs:Create*, logs:PutLogEvents, logs:PutMetricFilter, rds:*, redshift:CreateCluster, redshift:DeleteCluster, redshift:ModifyCluster, redshift:RebootCluster, s3:CreateBucket, sns:CreateTopic, sns:DeleteTopic, sns:Get*, sns:List*, sns:SetTopicAttributes, sns:Subscribe, sns:Unsubscribe |

Manage DB access and priviledges |

| NetworkAdmin | Custom Role | autoscaling:Describe*, cloudfront:ListDistributions, cloudwatch:DeleteAlarms, cloudwatch:DescribeAlarms, cloudwatch:GetMetricStatistics, cloudwatch:PutMetricAlarm, directconnect:*, ec2:AcceptVpcEndpointConnections, ec2:AllocateAddress, ec2:AssignIpv6Addresses, ec2:AssignPrivateIpAddresses, ec2:AssociateAddress, ec2:AssociateDhcpOptions, ec2:AssociateRouteTable, ec2:AssociateSubnetCidrBlock, ec2:AssociateVpcCidrBlock, ec2:AttachInternetGateway, ec2:AttachNetworkInterface, ec2:AttachVpnGateway, ec2:CreateCarrierGateway, ec2:CreateCustomerGateway, ec2:CreateDefaultSubnet, ec2:CreateDefaultVpc, ec2:CreateDhcpOptions, ec2:CreateEgressOnlyInternetGateway, ec2:CreateFlowLogs, ec2:CreateInternetGateway, ec2:CreateNatGateway, ec2:CreateNetworkAcl, ec2:CreateNetworkAclEntry, ec2:CreateNetworkInterface, ec2:CreateNetworkInterfacePermission, ec2:CreatePlacementGroup, ec2:CreateRoute, ec2:CreateRouteTable, ec2:CreateSecurityGroup, ec2:CreateSubnet, ec2:CreateTags, ec2:CreateVpc, ec2:CreateVpcEndpoint, ec2:CreateVpcEndpointConnectionNotification, ec2:CreateVpcEndpointServiceConfiguration, ec2:CreateVpnConnection, ec2:CreateVpnConnectionRoute, ec2:CreateVpnGateway, ec2:DeleteCarrierGateway, ec2:DeleteEgressOnlyInternetGateway, ec2:DeleteFlowLogs, ec2:DeleteNatGateway, ec2:DeleteNetworkInterface, ec2:DeleteNetworkInterfacePermission, ec2:DeletePlacementGroup, ec2:DeleteSubnet, ec2:DeleteTags, ec2:DeleteVpc, ec2:DeleteVpcEndpointConnectionNotifications, ec2:DeleteVpcEndpointServiceConfigurations, ec2:DeleteVpcEndpoints, ec2:DeleteVpnConnection, ec2:DeleteVpnConnectionRoute, ec2:DeleteVpnGateway, ec2:DescribeAccountAttributes, ec2:DescribeAddresses, ec2:DescribeAvailabilityZones, ec2:DescribeCarrierGateways, ec2:DescribeClassicLinkInstances, ec2:DescribeCustomerGateways, ec2:DescribeDhcpOptions, ec2:DescribeEgressOnlyInternetGateways, ec2:DescribeFlowLogs, ec2:DescribeInstances, ec2:DescribeInternetGateways, ec2:DescribeKeyPairs, ec2:DescribeMovingAddresses, ec2:DescribeNatGateways, ec2:DescribeNetworkAcls, ec2:DescribeNetworkInterfaceAttribute, ec2:DescribeNetworkInterfacePermissions, ec2:DescribeNetworkInterfaces, ec2:DescribePlacementGroups, ec2:DescribePrefixLists, ec2:DescribeRouteTables, ec2:DescribeSecurityGroupReferences, ec2:DescribeSecurityGroupRules, ec2:DescribeSecurityGroups, ec2:DescribeStaleSecurityGroups, ec2:DescribeSubnets, ec2:DescribeTags, ec2:DescribeVpcAttribute, ec2:DescribeVpcClassicLink, ec2:DescribeVpcClassicLinkDnsSupport, ec2:DescribeVpcEndpointConnectionNotifications, ec2:DescribeVpcEndpointConnections, ec2:DescribeVpcEndpointServiceConfigurations, ec2:DescribeVpcEndpointServicePermissions, ec2:DescribeVpcEndpointServices, ec2:DescribeVpcEndpoints, ec2:DescribeVpcPeeringConnections, ec2:DescribeVpcs, ec2:DescribeVpnConnections, ec2:DescribeVpnGateways, ec2:DescribePublicIpv4Pools, ec2:DescribeIpv6Pools, ec2:DetachInternetGateway, ec2:DetachNetworkInterface, ec2:DetachVpnGateway, ec2:DisableVgwRoutePropagation, ec2:DisableVpcClassicLinkDnsSupport, ec2:DisassociateAddress, ec2:DisassociateRouteTable, ec2:DisassociateSubnetCidrBlock, ec2:DisassociateVpcCidrBlock, ec2:EnableVgwRoutePropagation, ec2:EnableVpcClassicLinkDnsSupport, ec2:ModifyNetworkInterfaceAttribute, ec2:ModifySecurityGroupRules, ec2:ModifySubnetAttribute, ec2:ModifyVpcAttribute, ec2:ModifyVpcEndpoint, ec2:ModifyVpcEndpointConnectionNotification, ec2:ModifyVpcEndpointServiceConfiguration, ec2:ModifyVpcEndpointServicePermissions, ec2:ModifyVpcPeeringConnectionOptions, ec2:ModifyVpcTenancy, ec2:MoveAddressToVpc, ec2:RejectVpcEndpointConnections, ec2:ReleaseAddress, ec2:ReplaceNetworkAclAssociation, ec2:ReplaceNetworkAclEntry, ec2:ReplaceRoute, ec2:ReplaceRouteTableAssociation, ec2:ResetNetworkInterfaceAttribute, ec2:RestoreAddressToClassic, ec2:UnassignIpv6Addresses, ec2:UnassignPrivateIpAddresses, ec2:UpdateSecurityGroupRuleDescriptionsEgress, ec2:UpdateSecurityGroupRuleDescriptionsIngress, elasticbeanstalk:Describe*, elasticbeanstalk:List*, elasticbeanstalk:RequestEnvironmentInfo, elasticbeanstalk:RetrieveEnvironmentInfo, elasticloadbalancing:*, logs:DescribeLogGroups, logs:DescribeLogStreams, logs:GetLogEvents, route53:*, route53domains:*, sns:CreateTopic, sns:ListSubscriptionsByTopic, sns:ListTopics, ec2:AcceptVpcPeeringConnection, ec2:AttachClassicLinkVpc, ec2:AuthorizeSecurityGroupEgress, ec2:AuthorizeSecurityGroupIngress, ec2:CreateVpcPeeringConnection, ec2:DeleteCustomerGateway, ec2:DeleteDhcpOptions, ec2:DeleteInternetGateway, ec2:DeleteNetworkAcl, ec2:DeleteNetworkAclEntry, ec2:DeleteRoute, ec2:DeleteRouteTable, ec2:DeleteSecurityGroup, ec2:DeleteVolume, ec2:DeleteVpcPeeringConnection, ec2:DetachClassicLinkVpc, ec2:DisableVpcClassicLink, ec2:EnableVpcClassicLink, ec2:GetConsoleScreenshot, ec2:RejectVpcPeeringConnection, ec2:RevokeSecurityGroupEgress, ec2:RevokeSecurityGroupIngress, ec2:CreateLocalGatewayRoute, ec2:CreateLocalGatewayRouteTableVpcAssociation, ec2:DeleteLocalGatewayRoute, ec2:DeleteLocalGatewayRouteTableVpcAssociation, ec2:DescribeLocalGatewayRouteTableVirtualInterfaceGroupAssociations, ec2:DescribeLocalGatewayRouteTableVpcAssociations, ec2:DescribeLocalGatewayRouteTables, ec2:DescribeLocalGatewayVirtualInterfaceGroups, ec2:DescribeLocalGatewayVirtualInterfaces, ec2:DescribeLocalGateways, ec2:SearchLocalGatewayRoutes, s3:GetBucketLocation, s3:GetBucketWebsite, s3:ListBucket, iam:GetRole, iam:ListRoles, iam:PassRole, ec2:AcceptTransitGatewayVpcAttachment, ec2:AssociateTransitGatewayRouteTable, ec2:CreateTransitGateway, ec2:CreateTransitGatewayRoute, ec2:CreateTransitGatewayRouteTable, ec2:CreateTransitGatewayVpcAttachment, ec2:DeleteTransitGateway, ec2:DeleteTransitGatewayRoute, ec2:DeleteTransitGatewayRouteTable, ec2:DeleteTransitGatewayVpcAttachment, ec2:DescribeTransitGatewayAttachments, ec2:DescribeTransitGatewayRouteTables, ec2:DescribeTransitGatewayVpcAttachments, ec2:DescribeTransitGateways, ec2:DisableTransitGatewayRouteTablePropagation, ec2:DisassociateTransitGatewayRouteTable, ec2:EnableTransitGatewayRouteTablePropagation, ec2:ExportTransitGatewayRoutes, ec2:GetTransitGatewayAttachmentPropagations, ec2:GetTransitGatewayRouteTableAssociations, ec2:GetTransitGatewayRouteTablePropagations, ec2:ModifyTransitGateway, ec2:ModifyTransitGatewayVpcAttachment, ec2:RejectTransitGatewayVpcAttachment, ec2:ReplaceTransitGatewayRoute, ec2:SearchTransitGatewayRoutes |

Manage Network access and firewall settings |

| BryteFlowAdmin | Custom Role | elasticmapreduce:ListClusters, glue:GetDatabase, athena:StartQueryExecution, athena:ListDatabases, glue:GetPartitions, glue:UpdateTable, athena:GetQueryResults, athena:GetDatabase, glue:GetTable, athena:StartQueryExecution, glue:CreateTable, glue:GetPartitions, elasticmapreduce:ListSteps, athena:GetQueryResults, s3:ListBucket, elasticmapreduce:DescribeCluster, glue:GetTable, glue:GetDatabase, s3:PutObject, s3:GetObject, elasticmapreduce:DescribeStep, athena:StopQueryExecution, athena:GetQueryExecution, s3:DeleteObject, elasticmapreduce:AddJobFlowSteps, s3:GetBucketLocation, s3:PutObjectAcl, secretsmanager:GetSecretValue, secretsmanager:DescribeSecret, secretsmanager:PutSecretValue, secretsmanager:UpdateSecret |

Able to manage BryteFlow configurations |

| Amazon S3 | Resource Based Policy | s3:PutObject, s3:GetObject, s3:DeleteObject, s3:GetBucketLocation, s3:PutObjectAclResource: arn:aws:s3:::<bucket-name>, arn:aws:s3:::<bucket-name>/* |

To manage bucket level permissions, resource-based policy for S3 should be applied to restrict the bucket level access. The policy is attached to the bucket, but the policy controls access to both the bucket and the objects in it. |

| Amazon Ec2 | Resource Based Policy | ec2:AcceptVpcEndpointConnections, ec2:AcceptVpcPeeringConnection, ec2:AssociateIamInstanceProfile, ec2:CreateTags, ec2:DescribeTags, ec2:RebootInstancesResource: arn:aws:ec2:<ec2_instance_id> |

To manage instance level permissions, resource-based policy for EC2 should be applied to restrict the access for the EC2 instance. |

| AWS Marketplace | AWS managed policy | aws-marketplace:ViewSubscriptions, aws-marketplace:Subscribe, aws-marketplace:Unsubscribe, aws-marketplace:CreatePrivateMarketplaceRequests, aws-marketplace:ListPrivateMarketplaceRequests, aws-marketplace:DescribePrivateMarketplaceRequests |

For a user to launch BryteFlow from AWS Marketplace should have ‘AWSMarketplaceManageSubscriptions’ policy attached. |

Environment Preparation

Below is the guide provided to prepare an environment for BryteFlow in AWS :

- Create an AWS account: To prepare the environment for BryteFlow in AWS its required for the User to have an AWS account. If you already have an AWS account, skip to the next step. If you don’t have an AWS account to create one refer to the AWS Guide.

-

Create an IAM User: Its recommended to create a separate user for managing all AWS services DO NOT use root user for any task. Refer to AWS guide to create an IAM admin user.

- Create and assign policy to the User: Use the AWS Management Console to create a customer managed policy and then attach that policy to the IAM user as per their role. The policy created allows an IAM test user to sign in directly to the AWS Management Console with assigned permissions.

- Signing in to AWS:

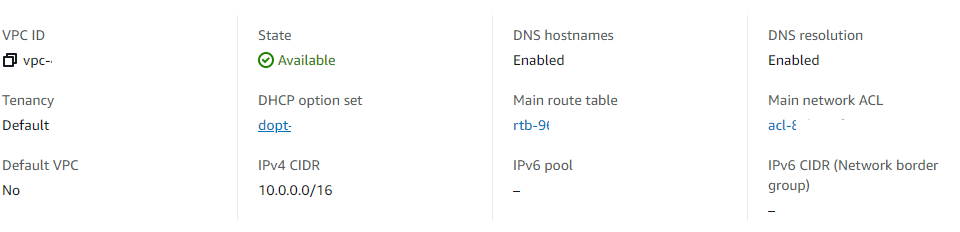

- Create a VPC: A virtual private cloud (VPC) is a virtual network dedicated to your AWS account. It is logically isolated from other virtual networks in the AWS Cloud. You can launch your BryteFlow application and all related AWS resources, such as Amazon EC2 instances, into your VPC. For details on creating a VPC refer to AWS guide.

- Creating a Private Subnet in Your VPC : Considering that BryteFlow Ingest needs to be setup in Customers VPC, its recommended to create a new Private subnet within the VPC for BryteFlow. Please follow detail AWS User Guide for Creating a Subnet.

- Creating Security Group: A security group acts as a virtual firewall for your instance to control inbound and outbound traffic. Security groups acts at the instance level, not the subnet level. Therefore, each instance in a subnet in your VPC can be assigned to a different set of security groups. If you don’t specify a particular group at launch time, the instance is automatically assigned to the default security group for the VPC which is highly not-recommended. For each security group, you add rules that control the inbound traffic to instances, and a separate set of rules that control the outbound traffic. For more details, refer to AWS guide for security Groups.

-

Security Group Rules: You can add or remove rules for a security group which is authorizing or revoking inbound or outbound access. A rule applies either to inbound traffic (ingress) or outbound traffic (egress). You can grant access to a specific CIDR range, or to another security group in your VPC or in a peer VPC (requires a VPC peering connection).

-

Creating IAM Role: BryteFlow uses IAM role assigned to the Ec2 where the application is hosted. The Ec2 role needs to have all the required policies attached. To create an IAM role for BryteFlow refer to the AWS guide. Assign the required policies to the newly created IAM role.

- Assigning Role to Users or Group: The IAM role needs to be assigned to an AWS Directory Service user or group. The role must have a trust relationship with AWS Directory Service. Refer to the AWS guide to assign users or groups to an existing IAM role.

- Creating Access Key ID and Secret Access Key, BryteFlow uses access key id and secret access key to connect to AWS services from an on-premises server. Its recommended to have a set of access keys for the BryteFlow User account. Please follow the below steps from Admin User account to create access keys:

- Sign in to the AWS Management Console and open the IAM console at https://console.aws.amazon.com/iam/.

- In the navigation pane, choose Users.

- Choose the name of the ‘separate BryteFlow‘ user whose access keys you want to manage, and then choose the Security credentials tab.

- In the Access keys section, to create an access key, choose Create access key. Then choose Download .csv file to save the access key ID and secret access key to a CSV file on your computer. Store the file in a secure location. You will not have access to the secret access key again after this dialog box closes. After you download the CSV file, choose Close. When you create an access key, the key pair is active by default, and you can use the pair right away.

For more information on secret keys refer to AWS documentation here.

For security reasons, when using access keys its recommended to rotate all keys after certain time, say at a period of 90 days. More details mentioned in the section ‘Managing Access Keys‘

5. Creating Auto-Scaling Group, When BryteFlow Ingest needs to be deployed in a HA environment, its recommended to have your EC2 alongwith an Auto Scaling Group.

Please follow the steps here to launch the same via AWS console. When launching an Auto-Scaling group via the console below are the recommended parameters that needs to be specified :

-

- When choosing an Amazon Machine Image in step 3, please select BryteFlow Standard or Enterprise Edition AMI option based on your requirement.

- In ‘Configure Instance Details’ choose the instance type referring to BryteFlow’s recommendations under the ‘Prerequisite’ section

- For ‘Number of Instances’, its recommended to have minimum of 2 for HA type of deployment.

- Under ‘Create Launch Configuration’ select the IAM Role as ‘BryteFlowEc2Role’

- Add Storage as per the recommendations under ‘Additional AWS Services’

- Choose the ‘Security Group’ created for BryteFlow in the previous steps.

- Choose a key pair to ‘Create Launch Configuration’

- Follow the remaining steps as mentioned here.

Creating An EC2 System

Please refer AWS documentation on how to create EC2 System.

Recommended Network ACL Rules for EC2

The following table shows the rules we recommend for your EC2. They block all traffic except that which is explicitly required.

The EC2 security group should have the required inbound and outbound rules as per below:

| Inbound | |||||

| Rule # | Source IP | Protocol | Port | Allow/Deny | Comments |

| 1 | Custom IP which requires access to BryteFlow Application | TCP | 80 | ALLOW | Allows inbound HTTP traffic from only known/ custom IPv4 address. |

| 2 | Public IPv4 address range of your home network | TCP | 22 | ALLOW | Allows inbound SSH traffic from your home network (over the Internet gateway). |

| 3 | Public IPv4 address range of your home network | TCP | 3389 | ALLOW | Allows inbound RDP traffic from your home network (over the Internet gateway). |

| 4 | 0.0.0.0/0 | all | all | DENY | Denies all inbound IPv4 traffic not already handled by a preceding rule (not modifiable). |

| Outbound | |||||

| Rule # | Dest IP | Protocol | Port | Allow/Deny | Comments |

| 1 | Source DB Host IP address | TCP | Custom port( port specific to source database ports) | ALLOW | Allows connections to Source database. |

| 2 | Redshift Cluster Host IP address | TCP | 5439 ( port specific to destination database i.e. Redshift ) | ALLOW | Allows connection to destination database, if Redshift is a preferred destination database. (not required if AWS S3, is a preferred destination) |

| 3 | 0.0.0.0/0 | all | all | DENY | Denies all outbound IPv4 traffic not already handled by a preceding rule (not modifiable). |

To open ports on Amazon Console

Please perform the steps to allow the inbound traffic to your Amazon instance, mentioned in the following link:

To open ports On Windows Server

Please perform the steps to allow the inbound traffic to your server, mentioned in the following link:

VPC Details:

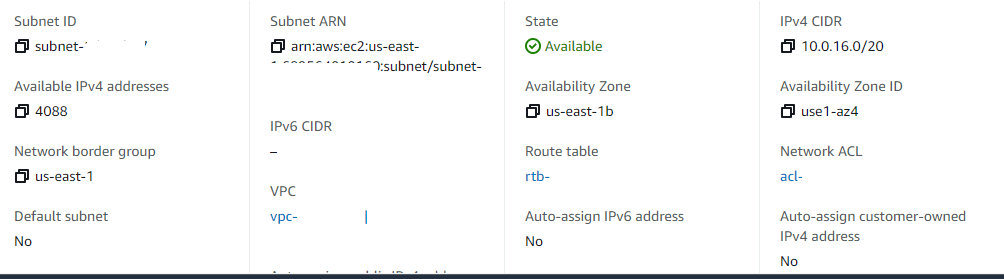

Related Subnet details:

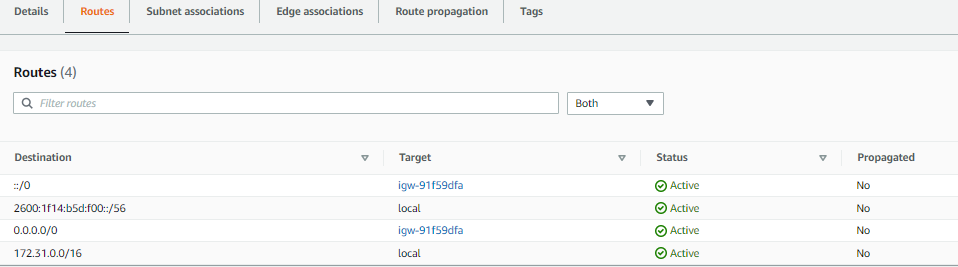

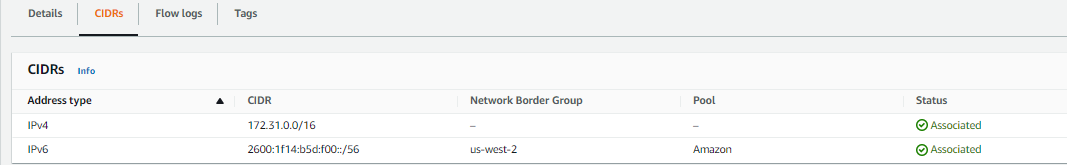

Below is the reference for Route table and CIDRs :

Outbound Connections

BryteFlow connects to any source and destination endpoints outside of its VPC using NAT/VPN or API Gateways.

A NAT gateway is a Network Address Translation (NAT) service. You can use a NAT gateway so that instances in a private subnet can connect to services outside your VPC but external services cannot initiate a connection with those instances. For more details refer to AWS guide.

To connect the VPC to remote network for enabling source/destination endpoint connections, use AWS VPN. For more details refer to AWS guide.

Creating S3 Bucket

Please refer AWS documentation for creating S3 bucket.

- http://docs.aws.amazon.com/AmazonS3/latest/gsg/CreatingABucket.html

- Also, its highly recommended to block all public access to S3, refer Setting Permissions: Block Public Access for more details.

Configuring EMR Cluster

Prior to launching an EMR cluster its recommended to verify the service limits for EMR within your AWS region.

When using BryteFlow in,

- Standard or Hybrid environment its recommended to have 3 instances for the EMR cluster(1 master and 2 core nodes)

- High Availability mode its recommended to have 6 instances. 3 additional are for DR mode whenever any failure occurs.

To know more about AWS service limits and how to manage service limits click on the respective links.

Launch EMR Cluster from AWS console :

Login to your AWS account and select the correct AWS region where your S3 bucket and EC2 container are located.

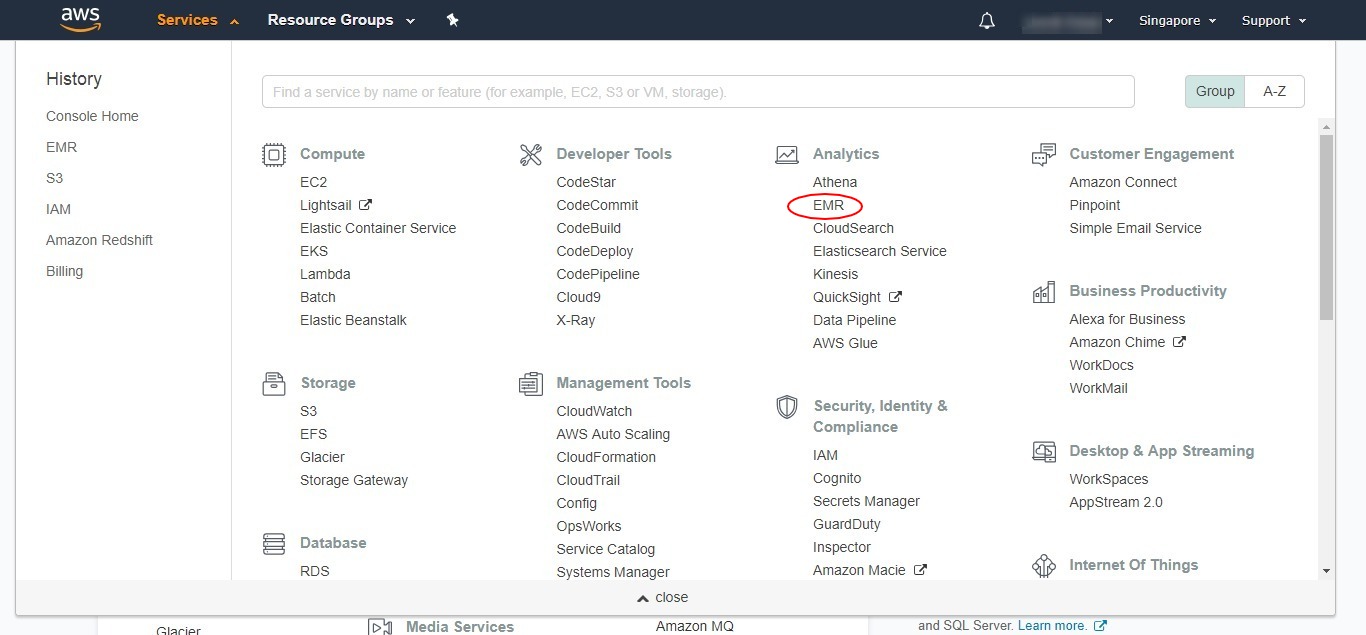

- Click on the services drop down in the header.

- Select EMR under Analytics or you can search for EMR.

- Click on the ‘Create cluster’ button

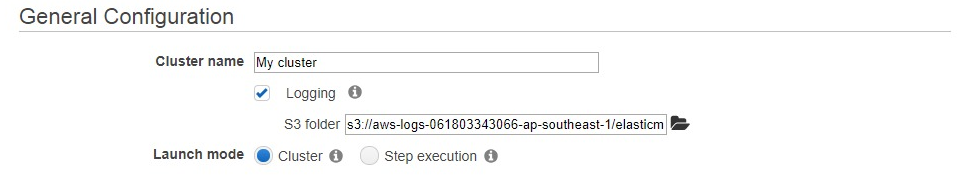

- In Create Cluster – Quick Options please type in Cluster Name (Name you will identify the Cluster with)

keep the Logging check box selected, the S3 folder will be selected by default. Launch mode should be Cluster.

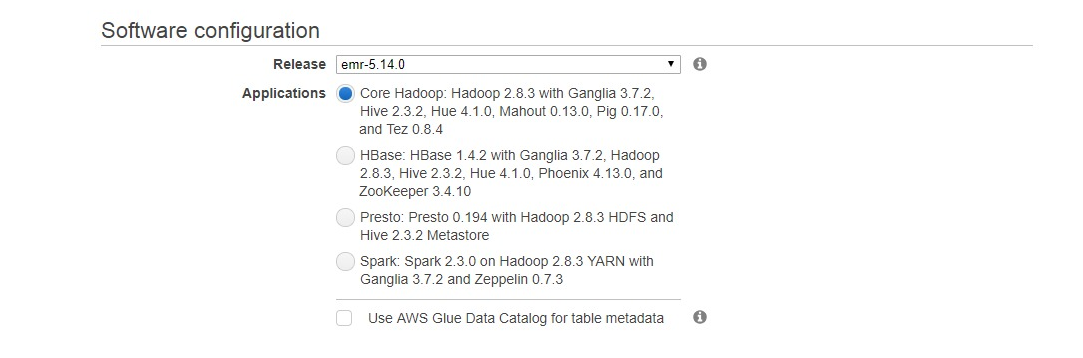

- Under Software configuration select release emr-5.14.0 and in Applications select Core Hadoop: Hadoop 2.8.3 with Ganglia 3.7.2, Hive 2.3.2, Hue 4.1.0, Mahout 0.13.0, Pig 0.17.0, and Tez 0.8.4

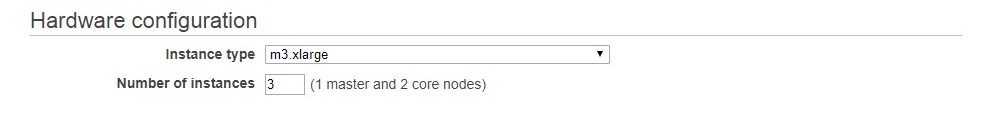

- Hardware configuration- Please select Instance type and number of Instances you want to run.

- Security and access –

Please select the EC2 key pair that you want to use with the EMR Cluster. This key will be used to SSH into the Cluster. Permission should be set to the ‘BryteFlowEc2Role’ created earlier. - You can add tags to your EMR cluster and configure the tag in Ingest to avoid the re-configuration in the software in case you plan to terminate the cluster and create a new. This helps user to keep control of their clusters and save cost on AWS resources.

- Click on the ‘Create cluster’ button (provisioning of a cluster can take up to 15-20 min).

Additional AWS services

As BryteFlow uses several AWS resources to fulfill user requirements, the cost of these services are separate to BryteFlow charges and are charged by AWS for your account. If you are using Snowflake as a destination the cost of Snowflake Data warehouse is separate to BryteFlow.

Below list provides list of other billable services within BryteFlow. Please use AWS Pricing calculator to estimate AWS cost of additional resources.

A sample estimate for a high availability setup with a source data volume of 100 GB is provided for reference here. Please note not all services are mandatory, the size and no. of services will vary for each customer environment. The sample is just for reference purposes.

Please note: ALL AWS Services has a service limit, do check for sufficient resources before launching the services, if needed request for increase in quota following AWS guidelines. Please refer to the AWS guide to check the service limit corresponding to each service.

| Service | Mandatory | Billing Type | Service Limits |

| AWS EC2 | Y | Pay-as-you-go | check EC2 quota here |

| Additional EBS storage attached to EC2 | Y | Based on size | |

| AWS S3 | N | Pay-as-you-go | check Amazon S3 quota here |

| AWS EMR | N (only required for S3 as a destination ) | Pay-as-you-go | check EMR quota here |

| AWS Redshift | N | Pay-as-you-go | check Amazon Redshift quota here |

| AWS CloudWatch Logs and metrics | N | Pay-as-you-go | check EC2 quota here |

| AWS SNS | N | Pay-as-you-go | check AWS SNS quota here |

| AWS Dynamo DB

(5 WCUs /5 RCUs) |

N | Pay-as-you-go | check Dynamo DB quota here |

| Snowflake DW | N | Pay-as-you-go | |

| AWS Lambda | N | Pay-as-you-go | check AWS Lambda quota here |

| AWS KMS | N | Pay-as-you-go | check Amazon KMS quota here |

| AWS Athena | N | Pay-as-you-go | check Amazon Athena quota here |

| AWS Kinesis | N | Pay-as-you-go | check Amazon Kinesis quota here |

BryteFlow recommends to use below mentioned instance types for EC2 with EBS volumes attached:

| EC2 Instance Type | BryteFlow Standard Edition | BryteFlow Enterprise Edition | Recommended EBS volumes | EBS Volume Type |

| t2.small | Volume < 100 GB | NA | 50 GB | General Purpose SSD (gp2) Volumes |

| t2.medium | Volume >100 and < 300 GB | Volume < 100 GB | 100 GB | General Purpose SSD (gp2) Volumes |

| t2.large | Volume > 300 GB and < 1 TB | Volume >100 and < 300 GB | 500 GB | General Purpose SSD (gp2) Volumes |

| m4.large | NA | Volume > 300 GB and < 1 TB | 500 GB | General Purpose SSD (gp2) Volumes |

IMDS Settings and Recommendations

–instance-id <instance id> \

–http-endpoint disabled

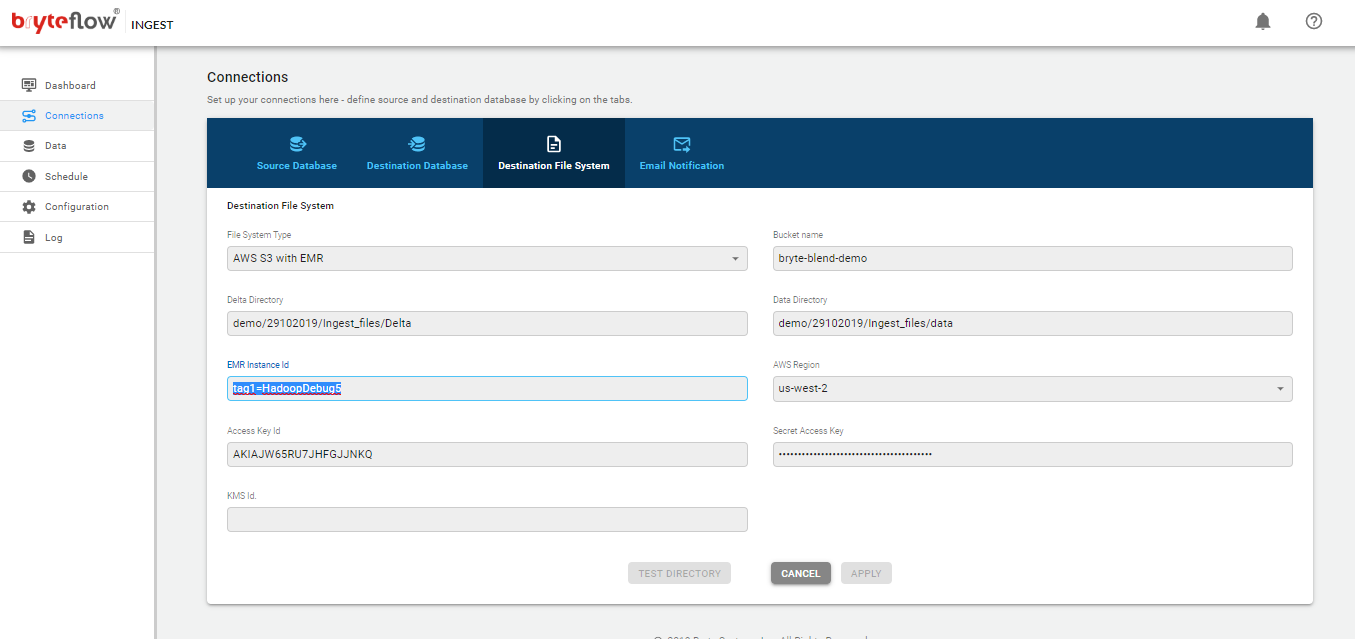

Managing Access Keys

BryteFlow uses Access key and secret key to authenticate to AWS services like S3, Redshift etc. It requires AWS access key id and AWS secret key for accessing the S3 and other services from on-premises. AWS IAM Roles are used when using an AMI or an EC2 server.

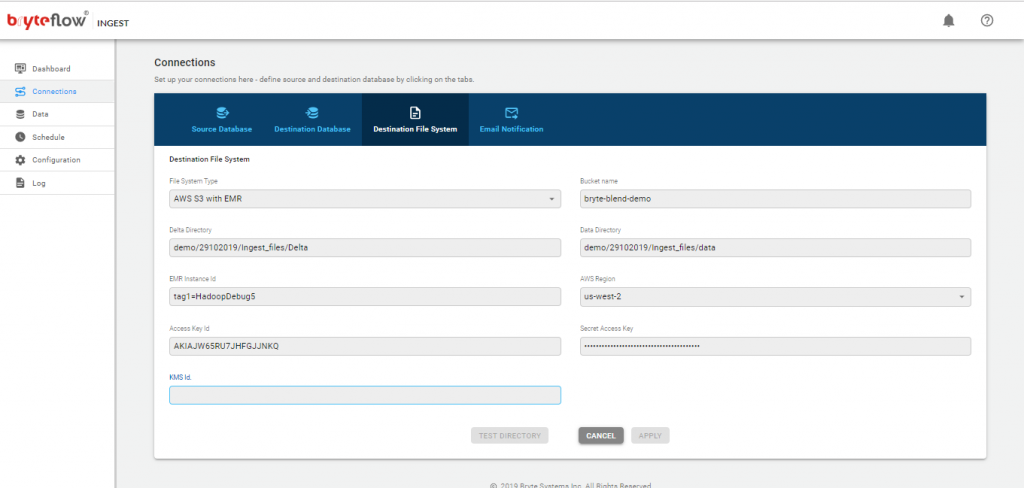

For security reasons, when using access keys or KMS Keys its recommended to rotate keys after certain time, say at a period of 90 days. After the new keys are generated it needs to updated in Ingest’s configuration. Please follow below mentioned steps:

- Open Ingest instance which needs to be updated with new key in the web browser

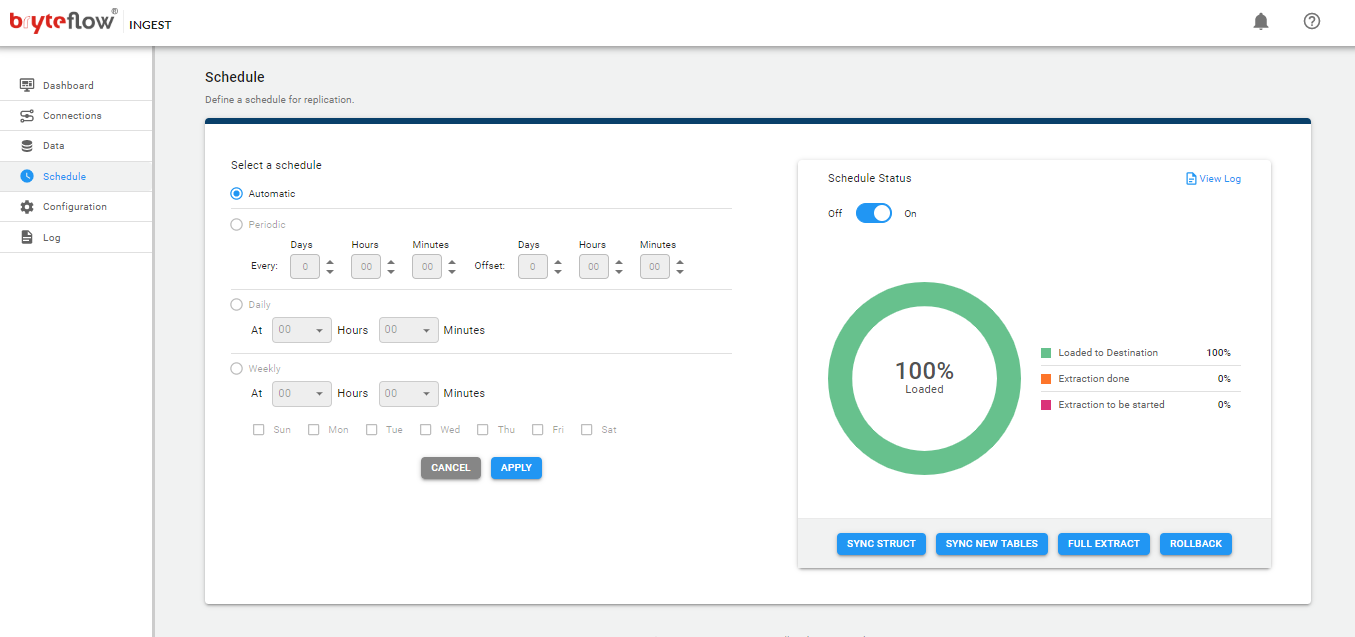

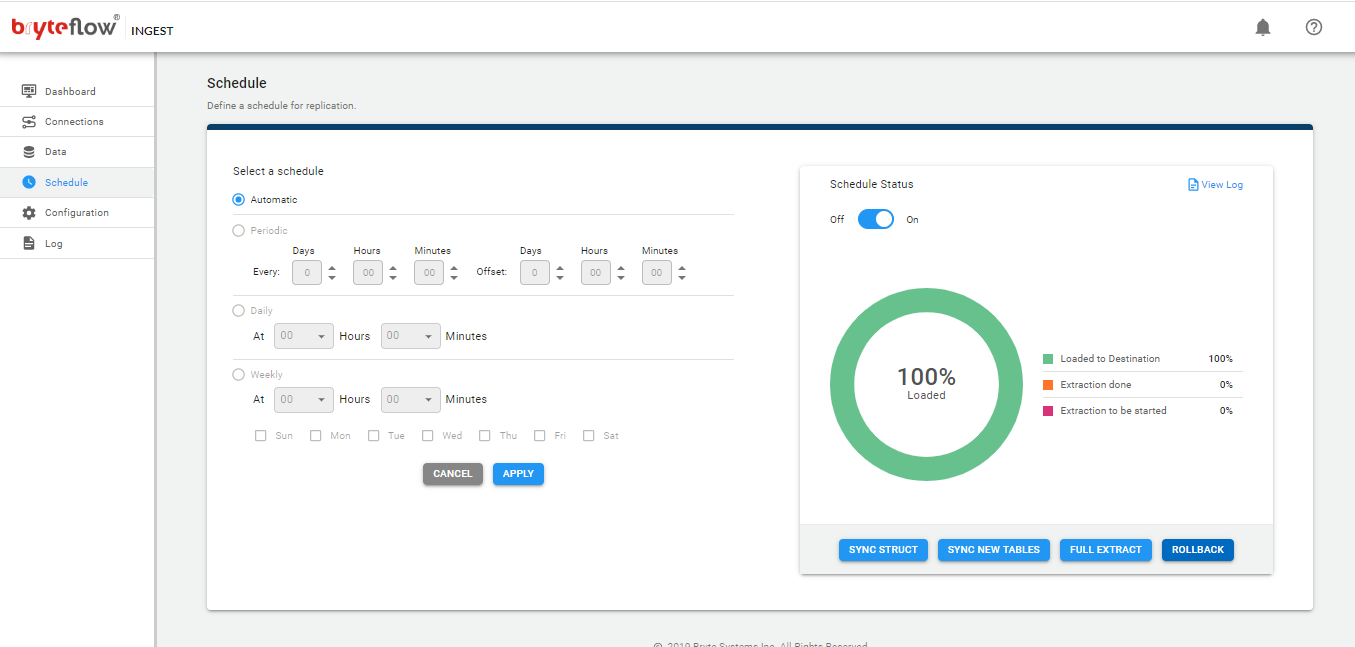

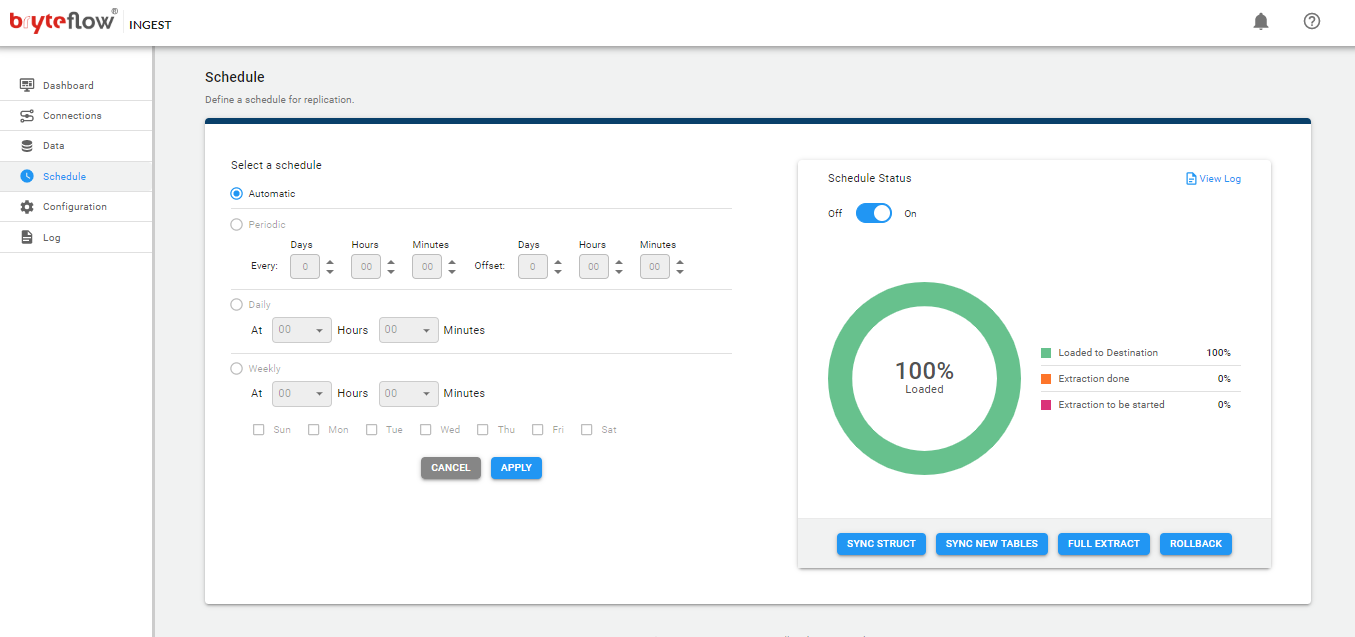

- Go to ‘Schedule’ tab. Stop the replication schedule for BryteFlow Ingest by turning ‘OFF’ the Schedule button.

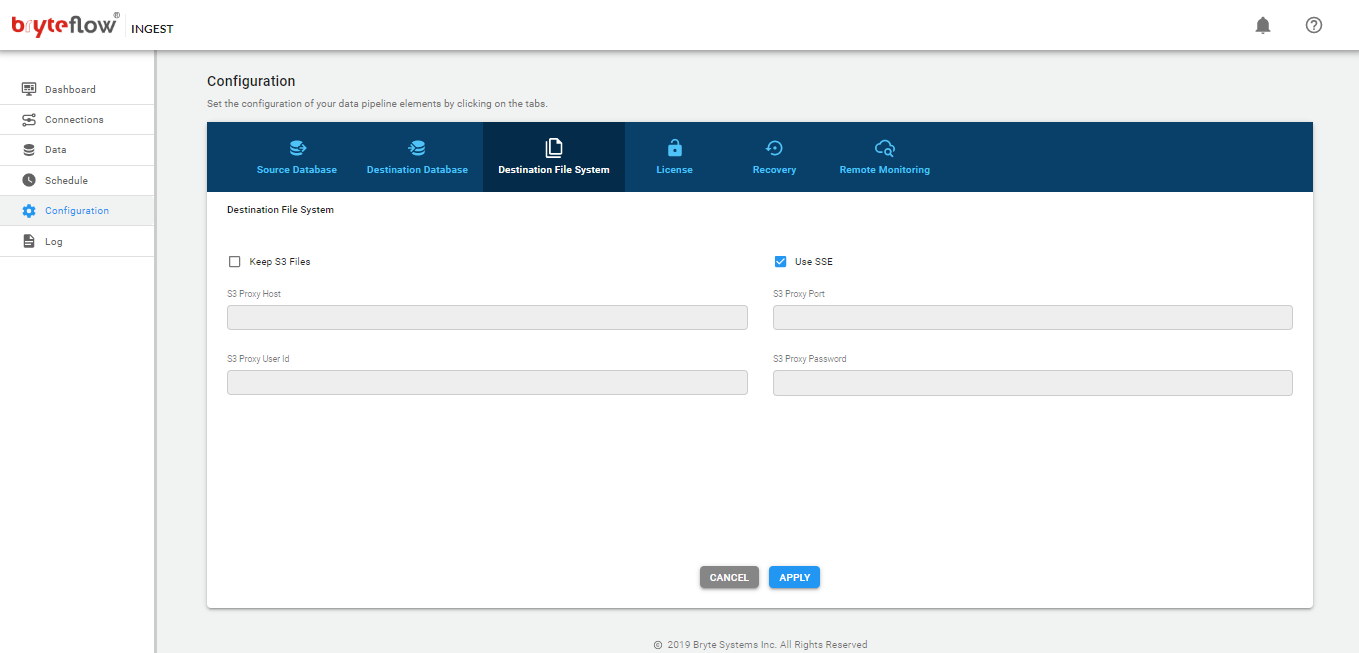

- Go to ‘Connections’-> ‘Destination File System’

- Enter the new ‘Access key’ and ‘Secret access key’ in the respective text box and hit ‘Apply’

- Once the keys are saved, resume replication by turning ‘ON’ the schedule.

Details of key rotation can be found in AWS documentation https://docs.aws.amazon.com/kms/latest/developerguide/rotate-keys.html

IAM role for ‘BryteFlow’ should have recommended policies attached. Please refer to section ‘AWS Identity and Access Management (IAM) for BryteFlow‘ for the list of policies and permissions.

Data Security and Encryption

BryteFlow ensures various mechanisms for data security by applying encryption,

- With KMS, BryteFlow Ingest uses customer specified KMS key to encrypt customer data on AWS S3, Secrets Manager, DynamoDB. Configure the customer KMS id in BryteFlow, which is used to encrypt data stored on various AWS services.

- With AES-256, BryteFlow Ingest supports server side encryption. Amazon S3 server-side encryption uses one of the strongest block ciphers available, 256-bit Advanced Encryption Standard (AES-256), to encrypt your data, which is supported by BryteFlow by default.

BryteFlow Ingest doesn’t store any data outside of Customer’s designated environment. It can store data into the below AWS services depending on the customer requirements:

- Amazon EC2, only for temporary staging and pipeline configuration, refer the AWS guide for enabling encryption.

- EBS Storage, only for temporary staging and pipeline configuration, refer the AWS guide for enabling encryption.

- Amazon S3, only when S3 is a destination endpoint. Refer the AWS guide for enabling encryption

- Amazon Redshift, only when Redshift is a destination endpoint. Refer the AWS guide for enabling encryption.

- Amazon Athena, only when Athena is a destination endpoint. Refer the AWS guide for enabling encryption.

- Amazon Aurora DB, only when S3 is a destination endpoint. Refer the AWS guide for enabling encryption.

- Amazon DynamoDB, when configured for High Availability. Refer the AWS guide for enabling encryption.

- AWS Secrets Manager, for storing all credentials setup for the pipeline. Refer the AWS guide for details on encryption.

Also, all Non-AWS destination endpoints:

- Snowflake – Data encryption on Snowflake

- Kafka – BryteFlow uses TLS encryption to load data to Kafka streams. Setup encryption in Kafka streams refer the user guide.

As a best practice BryteFlow recommends to enable encryption on all the services wherever the data is getting stored.

Key Rotation

BryteFlow recommends to rotate all keys that are being configured in Ingest, every 90 days period for security reasons. This includes all the sources and destination endpoints credentials. Below are some references for AWS services for more details.

For AWS KMS key rotation refer to the AWS guide.

For AWS Redshift key rotation refer to the AWS Guide.

Follow the recommendations as below for all Non-AWS sources and destinations:

| External Applications | Reference for Key Rotation |

| SAP | SAP password rotation |

| Oracle | Oracle Password Rotation |

| MS SQL Server | MS SQL Server password rotation |

| Salesforce | Salesforce password rotation |

| MySQL | MySQL password rotation |

| PostgreSQL | PostgreSQL password rotation |

Configure Data Encryption

BryteFlow adheres to AWS recommendation of applying encryption of data at rest and in transit. It can be achieved by creating the keys and certificates that are used for encryption.

For more information, refer to AWS documentation on Providing Keys for Encrypting Data at Rest with Amazon EMR and Providing Certificates for Encrypting Data in Transit with Amazon EMR Encryption.

For Amazon Redshift destination, its recommended to enable database encryption to protect data at-rest. Refer to AWS guide for more details.

AWS Secrets Manager uses encryption via AWS KMS, for more details refer to AWS Guide.

Specifying Encryption Options Using the AWS Console

Choose options under Encryption according to the following guidelines :

- Choose options under At rest encryption to encrypt data stored within the file system.

Under S3 data encryption, for Encryption mode, choose a value to determine how Amazon EMR encrypts Amazon S3 data with EMRFS. BryteFlow Ingest supports the below encryption mechanism.

- SSE-S3

- SSE-KMS or CSE-KMS

Encryption in-transit

BryteFlow uses SSL to establish any connection(AWS services, databases etc.) for data flow, ensuring secure communication in-transit.

SSL involves complexity of managing security certificates and its important to keep the certificates active all the time for uninterrupted service.

AWS Certificate Manager handles the complexity of creating and managing public SSL/TLS certificates. Customers can have settings to get notified before the expiry date is approaching and can renew upfront, so that the services run uninterruptedly. Refer AWS guide to manage ACM here.

Storing and Managing Credentials

BryteFlow uses AWS Secrets Manager to store any/all credentials. This includes both source and destination endpoint credentials for Databases and APIs. All the secrets are encrypted using the KMS encryption. BrytFlow creates a secret in AWS secrets Manager for all credentials alongwith BryteFlow admin user details and also allows to modify the secret from the GUI as well. Go to the respective setup page in BryteFlow application to update the secret details. Its recommended to rotate all keys stored in Secrets Manager, refer to AWS guide for the same.

Testing The Connections

Verify if the connectivity to remote services is available.

To test the remote connections you would need telnet utility. Telnet has to be enabled from the control panel in Turn on Windows Feature.

- Go to start and then Run and type CMD, and click OK.

- Type the following at the command prompt.

telnet <IP address or Hostname> Port number

For example

telnet 192.168.1.1 8081

If the connection is unsuccessful then an error will be shown.

If the command prompt window is blank only with the cursor, then the connection is successful and the service is available.

Connection error to source or destination database server.

In case of any connectivity issue to source or destination database, please check if the BryteFlow server is able to reach the remote host:port.

You can test the connection to the IP address and port using the telnet command.

telnet <IP address or Hostname> Port number

Or you can use the PowerShell command to verify the connection.

tnc <IP address or Hostname> Port number

Unable to start windows service

Error: Unable to start Windows service ‘BryteFlow Ingest’

Resolution: If Java is not installed or the system path is not updated, then Ingest service will throw an error on startup. Install Java 1.8 or add the java path to the system path. To verify the same, goto CMD and type: java -version

If the response is ‘unable to recognize command’, please check the java path in the Environment variables ‘path’ and update to correct path.

Application not able to launch

Once the BryteFlow Ingest service is installed and started, but the application is not launching on the browser

Resolution: BryteFlow application requires java 1.8 to function. Please install the correct version of java and restart the service.

If Java 11 is installed, then Ingest service will startup up, but the page will display an error message.

To verify the version, goto CMD and type: java -version

Expected result : java 1.8 <any build>

For Example: java version “1.8.0_171”

Java(TM) SE Runtime Environment (build 1.8.0_171-b11)

Java HotSpot(TM) 64-Bit Server VM (build 25.171-b11, mixed mode)

If the java version is higher please uninstall java and install the required version.

Grants not available on the database

Error: ‘Cannot Open database ‘demo’ requested by the login’

Resolution: The user does not have the grants to connect to the database. Apply the correct grants to the user and try again.

Login failed for user

Error: ‘Login failed for User ‘Demo’

Resolution: The user does not exist or there is a typo in the username or the password is incorrect.

MS SQL Server as a source connector

Please follow below recommended steps to setup your MS SQL source connector.

Preparing MS SQL Server

SQL Server setup depends on the replication option chosen, Change Tracking OR Change Data Capture. Prerequisites for each option are described in detail. Follow the link for details.

Security for MS SQL Server

The BryteFlow Ingest database replication login user should have VIEW CHANGE TRACKING permission to view the Change Tracking information.

--Review all change tracking tables that are = 1 enabled, or = 0 disabled

SELECT *

FROM sys.all_objects

WHERE object_id IN (SELECT object_id

FROM sys.change_tracking_tables

WHERE is_track_columns_updated_on = 1);

Verification of MS SQL Server source

To verify if change tracking is already enabled on the database run the following SQL queries. If a row is returned then Change Tracking has been enabled for the database

SELECT *

FROM sys.change_tracking_databases

WHERE database_id = DB_ID('databasename');

The following SQL will list all the tables for which Change Tracking has been enabled for the selected database

USE databasename;

SELECT sys.schemas.name as schema_name,

sys.tables.name as table_name

FROM sys.change_tracking_tables

JOIN sys.tables ON sys.tables.object_id = sys.change_tracking_tables.object_id

JOIN sys.schemas ON sys.schemas.schema_id = sys.tables.schema_id;

Data Types in MS SQL Server

BryteFlow Ingest source supports most MS SQL Server data types, see the following table for the supported list:

MS SQL Server Data Types

| BIGINT | REAL | VARCHAR (max) |

| BIT | FLOAT | NCHAR |

| DECIMAL | DATETIME | NVARCHAR (length) |

| INT | DATETIME2 | NVARCHAR (max) |

| MONEY | SMALLDATETIME | BINARY |

| NUMERIC (p,s) | DATE | VARBINARY |

| SMALLINT | TIME | VARBINARY (max) |

| SMALLMONEY | DATETIMEOFFSET | TIMESTAMP |

| TINYINT | CHAR | UNIQUEIDENTIFIER |

| VARCHAR | HIERARCHYID | XML |

Oracle DB as a source connector

Please follow below recommended steps to setup your Oracle source connector.

Preparing Oracle on Amazon RDS

Enable Change Tracking for a database on Amazon Oracle RDS

- In Oracle on Amazon RDS, the supplemental logging should be turned on at the database level.

- Supplemental logging is required so that additional details are logged in the archive logs.

To turn on supplemental logging at the database level, execute the following queries.exec rdsadmin.rdsadmin_util.alter_supplemental_logging('ADD','ALL');

- To retain archived redo logs on your DB instance, execute the following command (example 24 hours)

exec rdsadmin.rdsadmin_util.set_configuration('archivelog retention hours',24); - To turn on supplemental logging at the table level, execute the following statement

ALTER TABLE <schema>.<tablename> ADD SUPPLEMENTAL LOG DATA (ALL) COLUMNS;

Preparing On-premises Oracle

Enable Change Tracking for an On-Premises Oracle Server

Execute the following queries on Oracle Server to enable change tracking.

- Oracle database should be in ARCHIVELOG mode.

- The supplemental logging has to be turned on at the database level. Supplemental logging is required so that additional details are logged in the archive logs.

To turn on supplemental logging at the database level, execute the following statements:ALTER DATABASE ADD SUPPLEMENTAL LOG DATA (ALL) COLUMNS;

- Alternatively to turn on minimal database supplemental logging execute the following statements:

ALTER DATABASE ADD SUPPLEMENTAL LOG DATA; ALTER DATABASE FORCE LOGGING;

- In Oracle, ensure that supplemental logging is turned on at the table level. To turn on supplemental logging at the table level, execute the following statement:

ALTER TABLE <schema>.<tablename> ADD SUPPLEMENTAL LOG DATA (ALL) COLUMNS;

Security for Oracle

The Oracle user running BryteFlow Ingest must have the following security privileges:

SELECT access on all tables to be replicated

The following statement should return records…

SELECT * FROM V$ARCHIVED_LOG;

If no records are returned, select access on V_$ARCHIVED_LOG should be provided, or check if the database is in ACHIVELOG mode.

The following security permissions should be assigned to the user

CREATE SESSION

SELECT access on V_$LOGMNR_CONTENTS

SELECT access on V_$LOGMNR_LOGS

SELECT access on ANY TRANSACTION

SELECT access on DBA_OBJECTS

EXECUTE access on DBMS_LOGMNR

Run the following grant statements for <user> for the above requirements

GRANT SELECT ON V_$ARCHIVED_LOG TO <user>; GRANT SELECT ON V_$LOGMNR_CONTENTS TO <user>; GRANT EXECUTE ON DBMS_LOGMNR TO <user>; GRANT SELECT ON V_$LOGMNR_LOGS TO <user>; GRANT SELECT ANY TRANSACTION TO <user>; GRANT SELECT ON DBA_OBJECTS TO <user>;

Verification of Oracle source

To verify if Oracle is setup correctly for change detection execute the following queries.

| Condition to be checked | SQL to be executed | Result expected |

|---|---|---|

| Is ArchiveLog mode enabled? |

SELECT log_mode FROM V$DATABASE; |

ARCHIVELOG |

| Is Supplemental logging turned on at database level? |

SELECT supplemental_log_data_min FROM V$DATABASE; |

YES |

| Is Supplemental Logging turned on at table level? |

SELECT log_group_name,

table_name,

always,

log_group_type

FROM dba_log_groups;

|

RESULT <log group name>, <table name>, ALWAYS, ALL COLUMN LOGGING |

Data Types in Oracle

BryteFlow Ingest source supports most Oracle data types, see the following table for the supported list:

Oracle Data Types

| BINARY_DOUBLE | BINARY_FLOAT | CHAR |

| DATE | INTERVAL DAY TO SECOND | LONG |

| LONG RAW | NCHAR | NUMBER |

| NVARCHAR | RAW | REF |

| TIMESTAMP | TIMESTAMP WITH LOCAL TIME ZONE | VARCHAR2 |

Preparing On-premises MySQL

To prepare MySQL for change tracking perform the following steps.

To enable binary logging, the following parameters need to be configured as below in my.ini file on MySQL on Windows or in my.cnf file on MySQL on UNIX:

| Parameter | Value |

|---|---|

| server_id | Any value from 1. E.g. server_id = 1 |

| log_bin=<path> | Path to the binary log file. E.g. log_bin = D:\MySQLLogs\BinLog |

| binlog_format | binlog_format=row |

| expire_logs_days | To avoid disk space issues it is strongly recommended not to use the default value (0). E.g. expire_log_days = 4 |

| binlog_checksum | This parameter can be set to binlog_checksum=none. BryteFlow does support CRC32 as well |

| binlog_row_image | binlog_row_image=full |

Preparing MySQL on Amazon RDS

Enabling Change tracking on MySQL on Amazon RDS

To enable change tracking MySQL on Amazon RDS perform the following steps.

- In the AWS management console, for MySQL on Amazon RDS create a new DB parameter group and the following parameters should be configured as shown.

- The MySQL RDS DB instance should use the newly created DB parameter group for binary logging to be enabled.

binlog_format: binlog_format=row binlog_checksum : binlog_checksum=none OR CRC32.

Security for MySQL

The Ingest user id must have the following privileges:

- Replication client, and Replication Slave.

- Select privileges on the source tables designated for replication.

- Execute the following queries to grant permissions to a MySQL user.

CREATE USER 'bflow_ingest_user' IDENTIFIED BY '*****';

GRANT SELECT, REPLICATION CLIENT, SHOW DATABASES ON *.* TO bflow_ingest_user;

GRANT SELECT, REPLICATION slave, SHOW DATABASES ON *.* TO bflow_ingest_user; P.S. If the source DB type is Amazon RDS MySQL DB, please download 'mysqlbinlog.exe' and add its directory path in Windows 'Environment variable' 'PATH' on the Client machine(BryteFlow Server)

To enable change tracking MariaDB on Amazon RDS perform the following steps.

- In the AWS management console, for MariaDB on Amazon RDS create a new DB parameter group and the following parameters should be configured as shown.

- The MariaDB RDS DB instance should use the newly created DB parameter group for binary logging to be enabled.

binlog_format: binlog_format=row binlog_checksum : binlog_checksum=none OR CRC32.

Preparing PostgreSQL DB

- Use a PostgreSQL database that is version 9.4.x or later

- The IP address of the BryteFlow machine must be added to the pg_hba.conf

configuration file with the “replication” keyword in the database field.

Example:

host replication all 189.452.1.212/32 trust - Set the following parameters and values in the postgresql.conf configuration file:Set wal_level = logicalSet max_replication_slots to a value greater than 1.

The max_replication_slots value should be set according to the number of tasks that you want to run. For example, to run four tasks you need to set a minimum of four slots. Slots open automatically as soon as a task starts and remain open even when the task is no longer running. You need to manually delete open slots.

Set max_wal_senders to a value greater than 1.

The max_wal_senders parameter sets the number of concurrent tasks that can run.

Set wal_sender_timeout =0

The wal_sender_timeout parameter terminates replication connections that are inactive longer than the specified number of milliseconds. Although the default is 60 seconds, we recommend that you set this parameter to zero, which disables the timeout mechanism.

Note:- After changing these parameters, a restart is needed for PostgreSQL

- Grant superuser permissions for the user account specified for the PostgreSQL source database. Superuser permissions are needed to access replication-specific functions in the source.

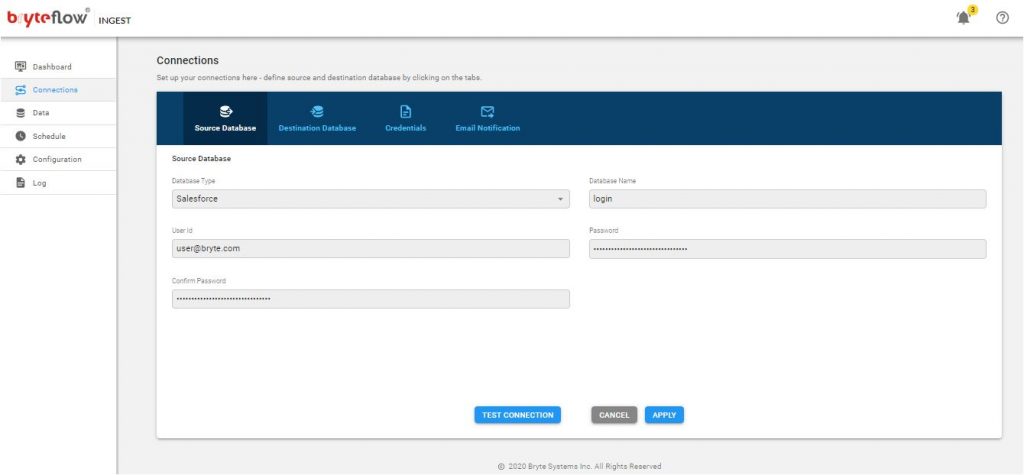

Preparing Salesforce account for Bryteflow Ingest

On Salesforce Change Data Capture is turned on by default, please do not turn it off.

You would need to generate a security token to be used with Bryteflow Ingest.

A security token is a case-sensitive alphanumeric key that appended to your Salesforce password.

eg. Your Salesforce password to be used with Ingest will be “<your Salesforce password ><security_token>”

A token can be generated by following these steps:

1. Log in to your salesforce account and go to My Setting > Personal > Reset my security token.

2. Click on Reset Security Token button. The token will be emailed to the email account associated with your salesforce account.

Preparing for BryteFlow Trigger solution

For the databases such as DB2, Firebird or for any RDBMS where there are no access to archive logs to get the change data, BryteFlow has the trigger option to get the change data.

For this solution there are certain prerequisites which needs to be implemented:

- BryteFlow replication user should have ‘select‘ access on the tables to be replicated

- BryteFlow replication user should have access to ‘create triggers’ to be replicated

- BryteFlow replication user should have access to ‘create tables’ on the source database

Please provide relevant grants to BryteFlow replication user in order to proceed with the Trigger Solution.

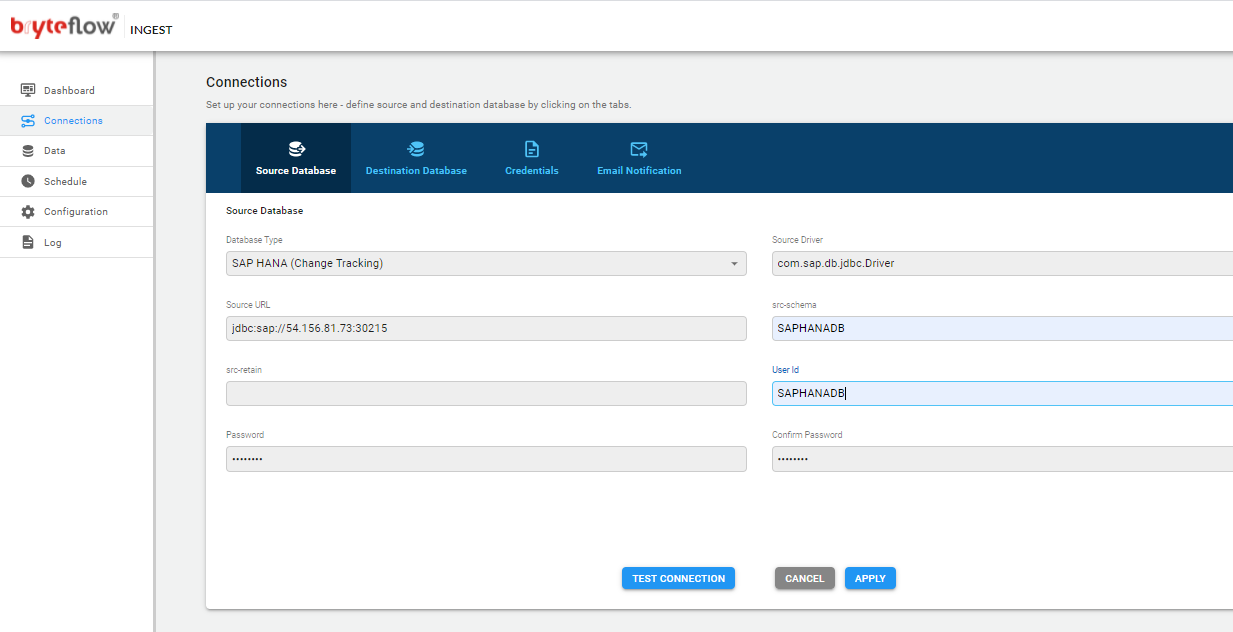

Pre-Requisites for SAP HANA (Change tracking) :

1.Create a user account for BryteFlow.

CREATE USER <USERNAME> PASSWORD <PASSWORD>;

2. BryteFlow replication user should have ‘select‘ access on the tables to be replicated.

BryteFlow replication user should have access to ‘create triggers’ to be replicated

Grant below priviledges to BryteFlow user created above.

GRANT SELECT, TRIGGER ON SCHEMA <YOURSCHEMA> TO <USERNAME>;

3. BryteFlow replication user should have access to a schema where it can create a table on the source database.

This is used to store transactions for restart and recoverability

Grant below priviledges to BryteFlow user created above.

GRANT CREATE ANY ON SCHEMA <YOURSCHEMA> TO <USERNAME>;

Starting & Stopping BryteFlow Ingest

If you are using the AMI from AWS Marketplace, BryteFlow Ingest will be preinstalled as a service in Windows.

Alternatively, you can install the service by executing the following command using the Command Prompt(Admin).

- Navigate to the directory of the installation.

-

service.exe --WinRun4J:RegisterService

To Start BryteFlow Ingest

- Start the BryteFlow Ingest service using Windows Services or Windows Task Manager

- Type the URL in the Chrome browser

localhost:8081

To Stop Bryteflow Ingest

- Stop the BryteFlow Ingest service

- Replication processes can also be aborted immediately by going to Task Manager

-> Processes -> service.exe – and selecting “End Task”

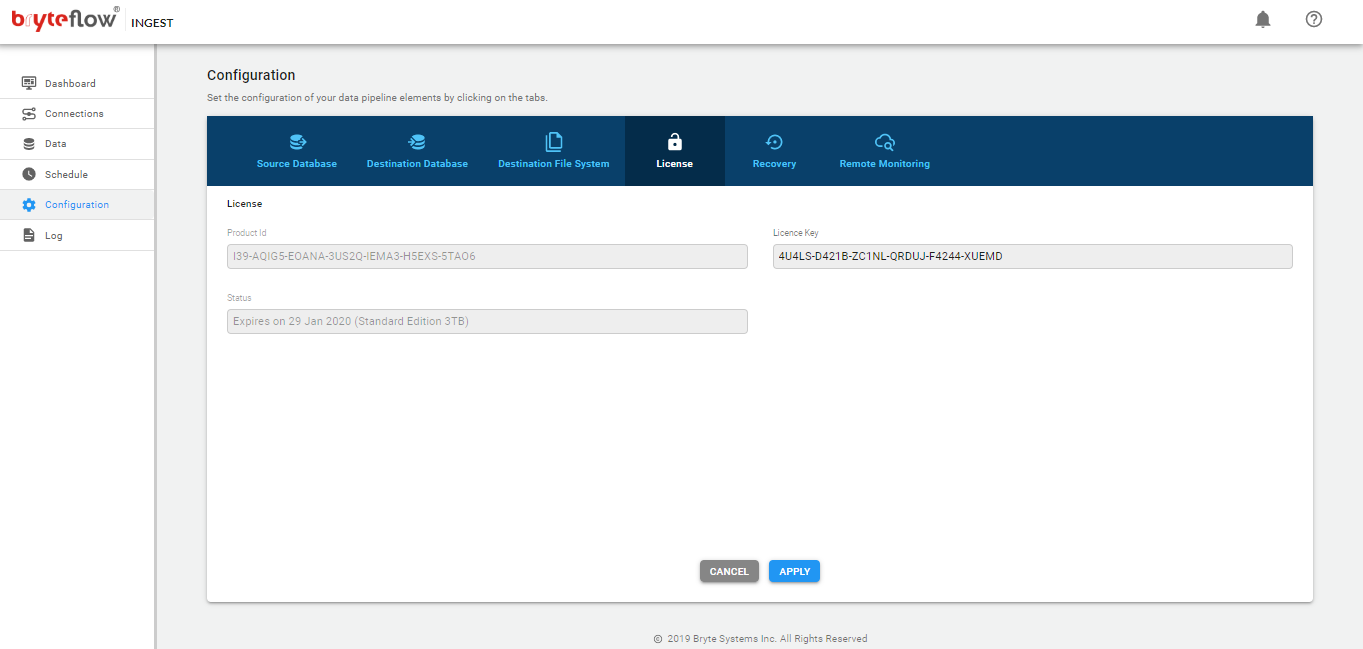

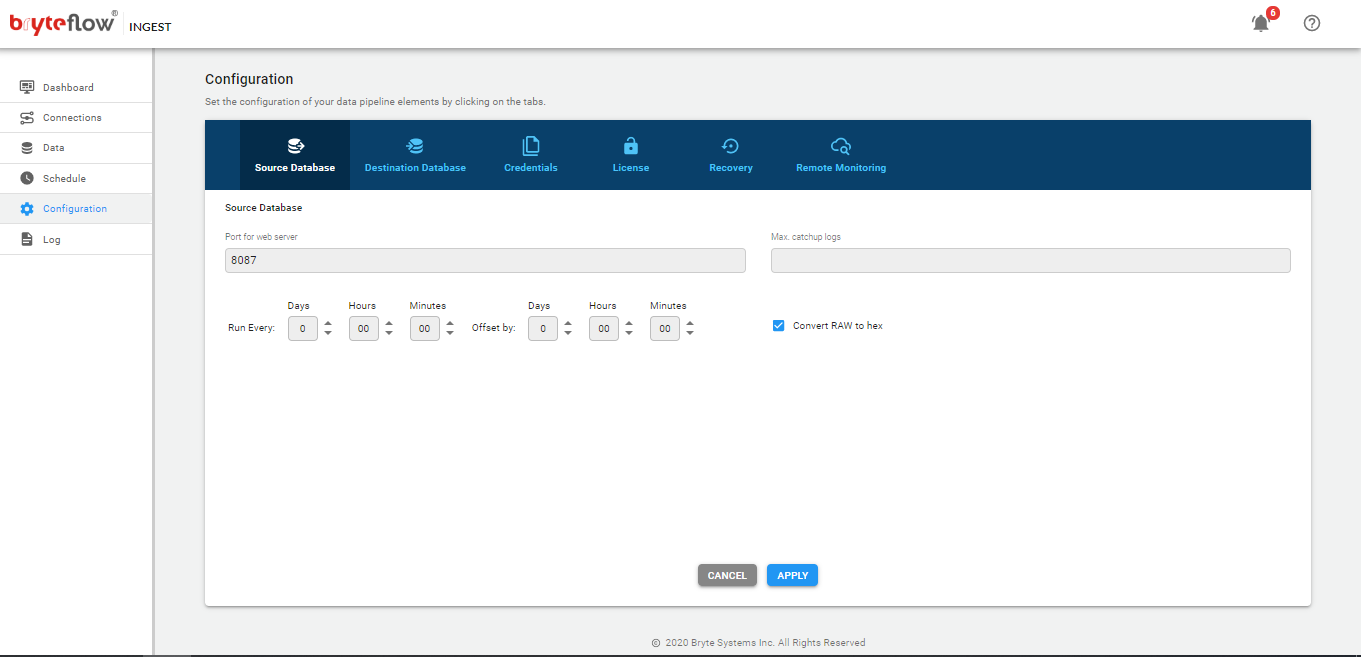

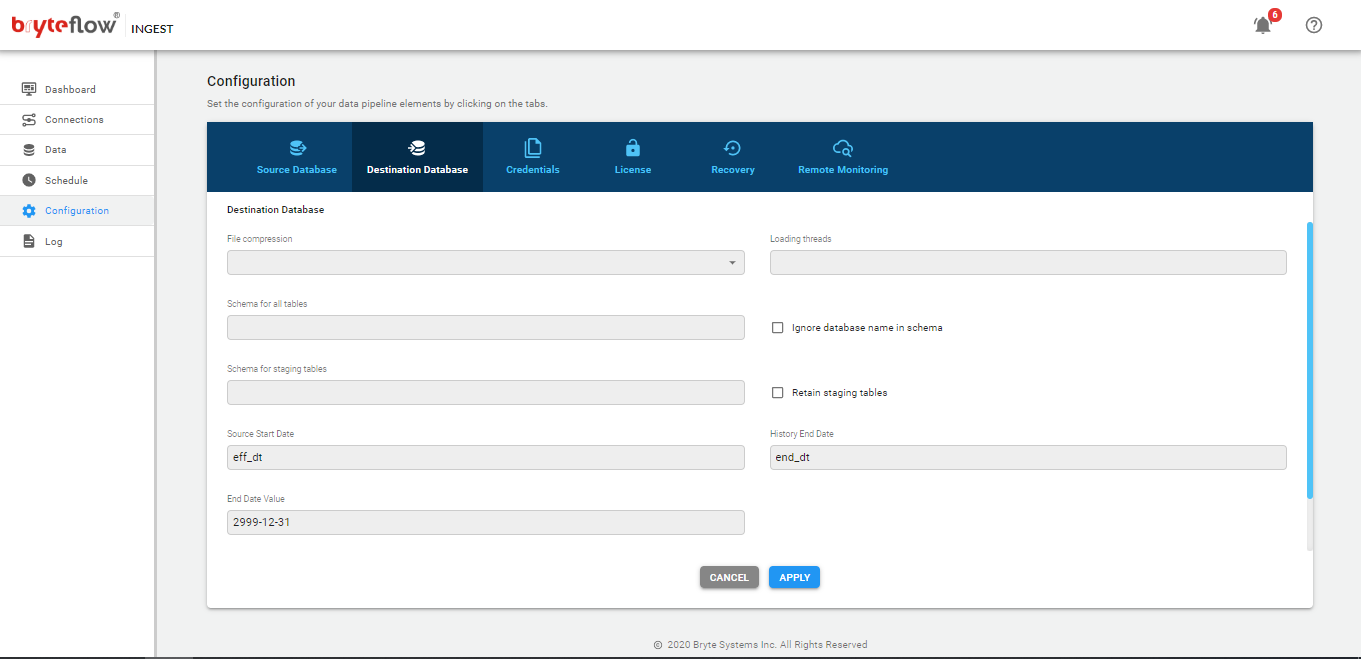

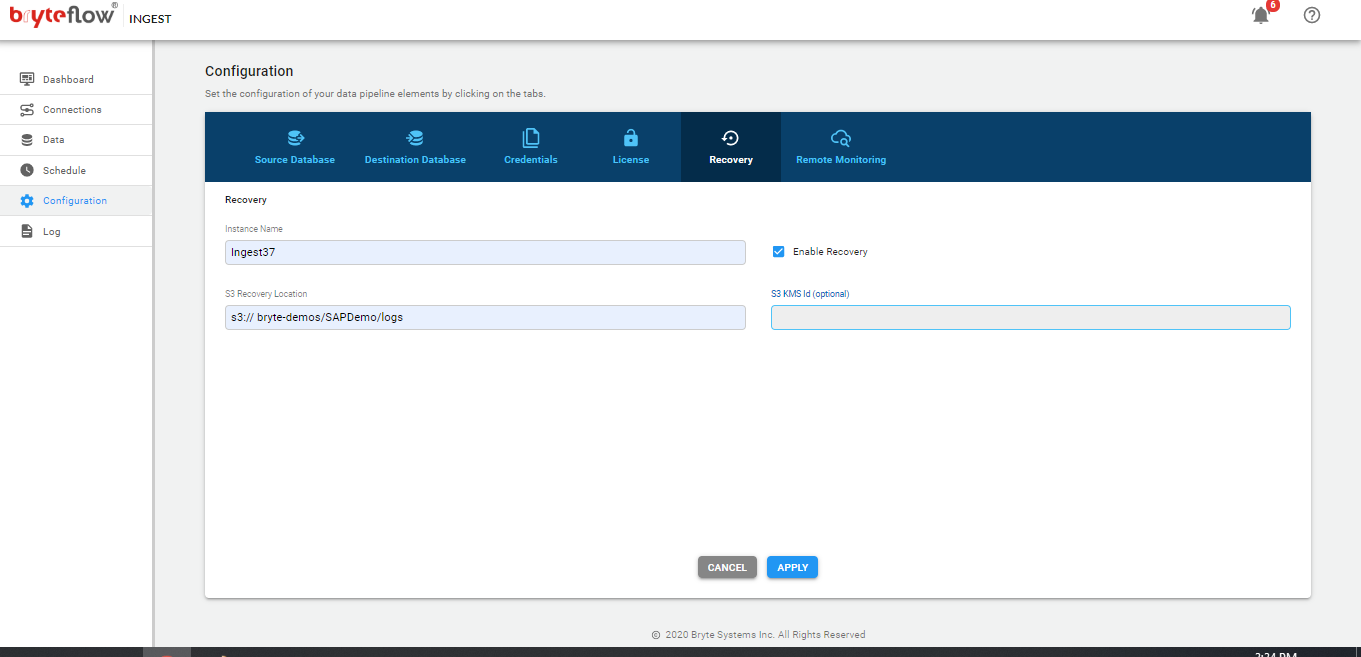

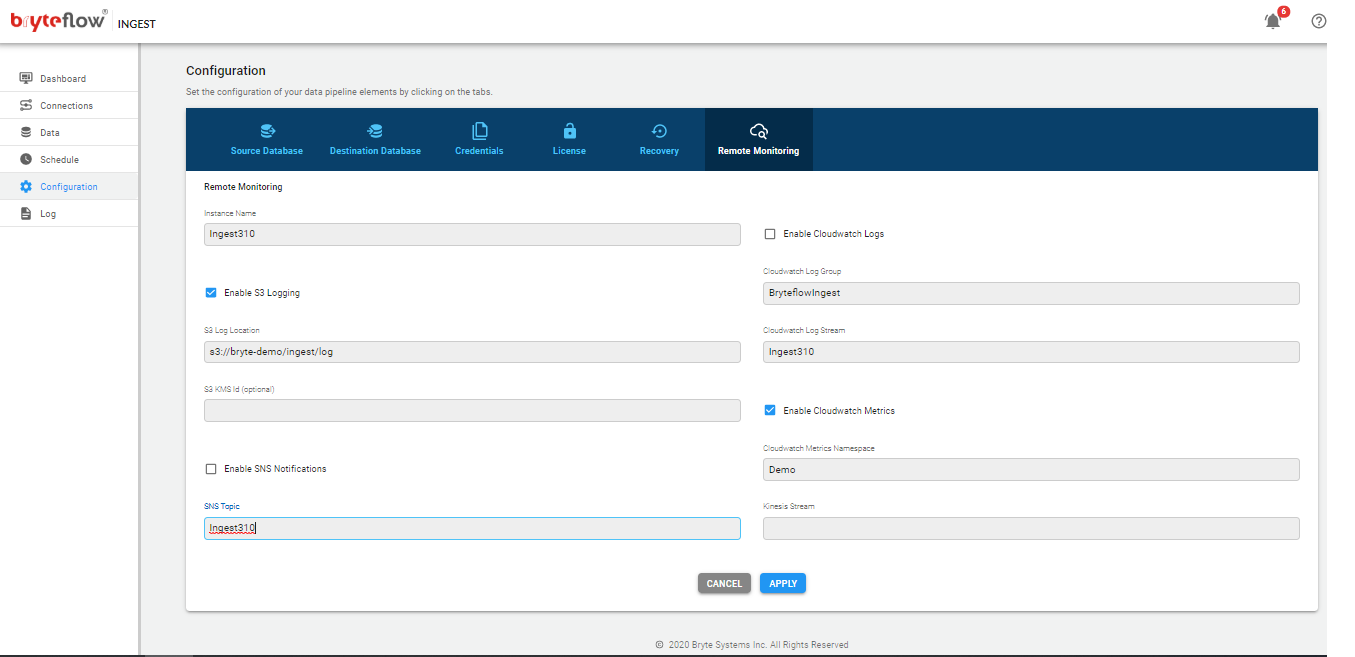

Configuration of BryteFlow Ingest

The configuration of BryteFlow Ingest is performed though the web console

- Type the URL in the Chrome browser

localhost:8081

The screen will then present the following tabs (left side of the screen)

- Dashboard

- Connections

- Data

- Schedule

- Configuration

- Log

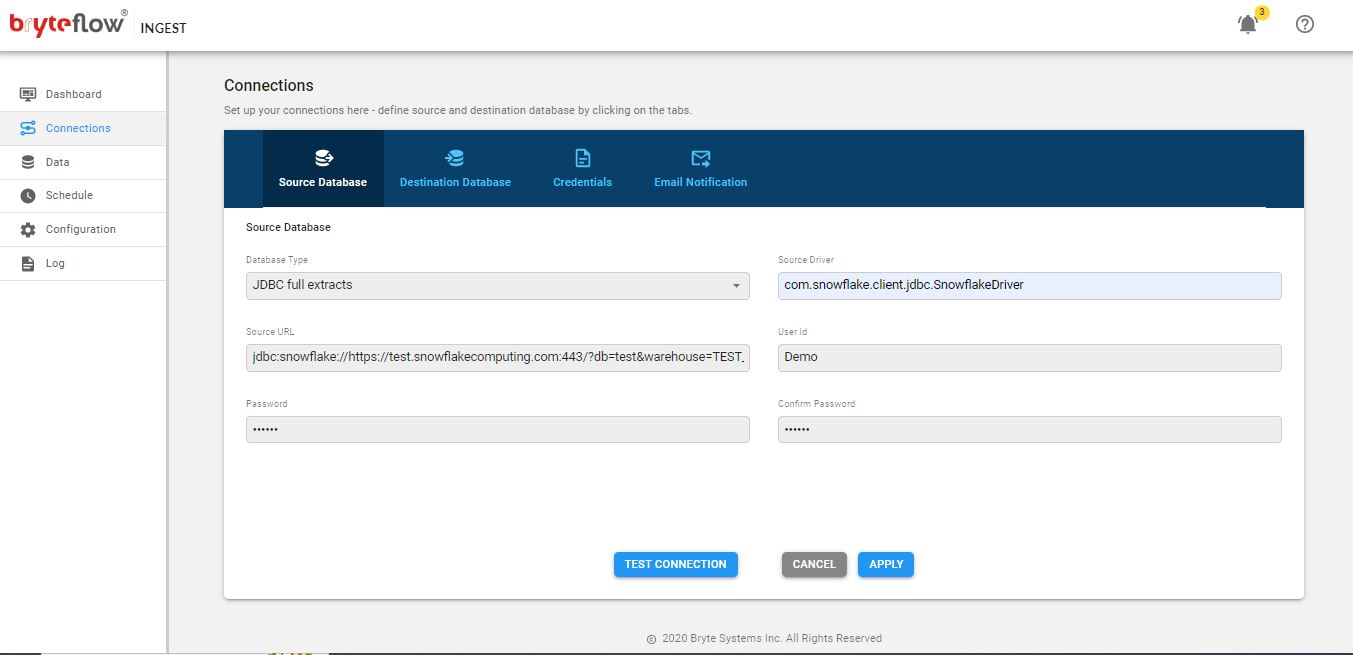

Configure Source Databases using below API :

POST http://host:port/ingest/api/ingest?cmd=conn&conn=s

Body:

func=save&src-db=<database name>&src-host=<database host>&src-options=&src-port=<database port>&src-pwd=<database password>&src-pwd2=<database password>&src-type=rds.oracle11lm&src-uid=<database user id>&type=src

Configure Destination Databases using below API :

POST http://host:port/ingest/api/ingest?cmd=conn&conn=d

Body:

dst-bucket=<S3 bucket>&dst-db=<database name>&dst-dir=<S3 work directory>&dst-host=<Redshift host>&dst-iam_role=<IAM Role>&dst-options=&dst-port=<Redshift port>&dst-pwd=<Redshift password>&dst-pwd2=<Redshift password>&dst-type=rds.redmulti&dst-uid=<Redshift user id>&func=save&type=dst

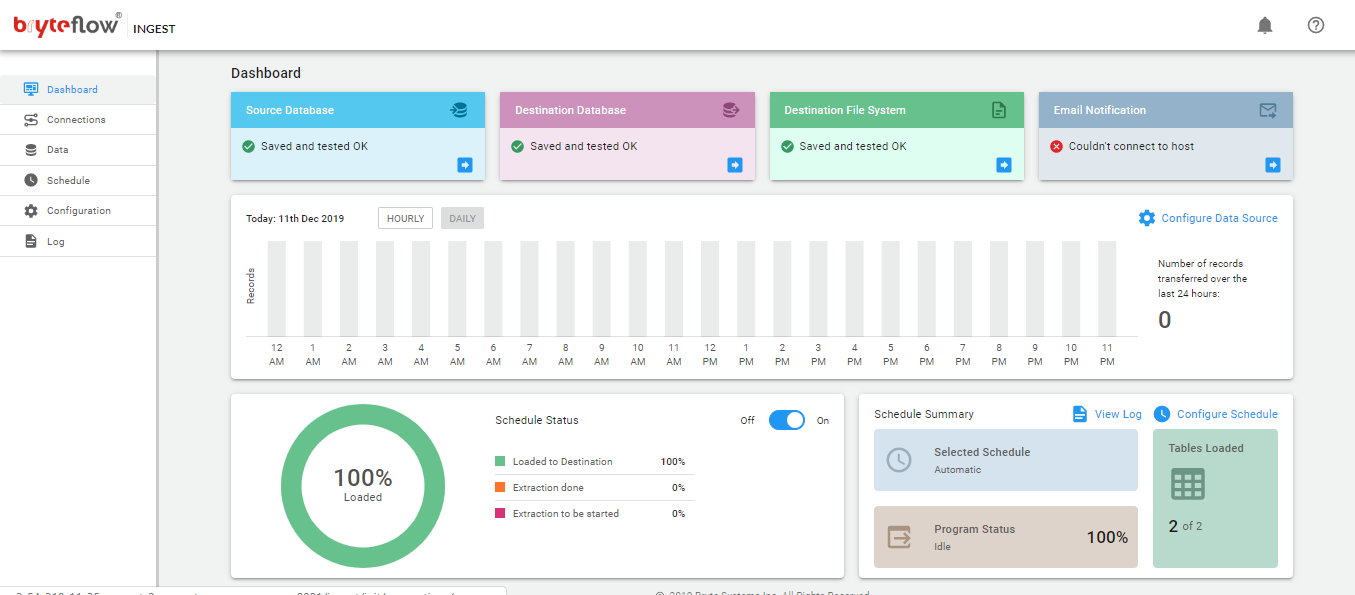

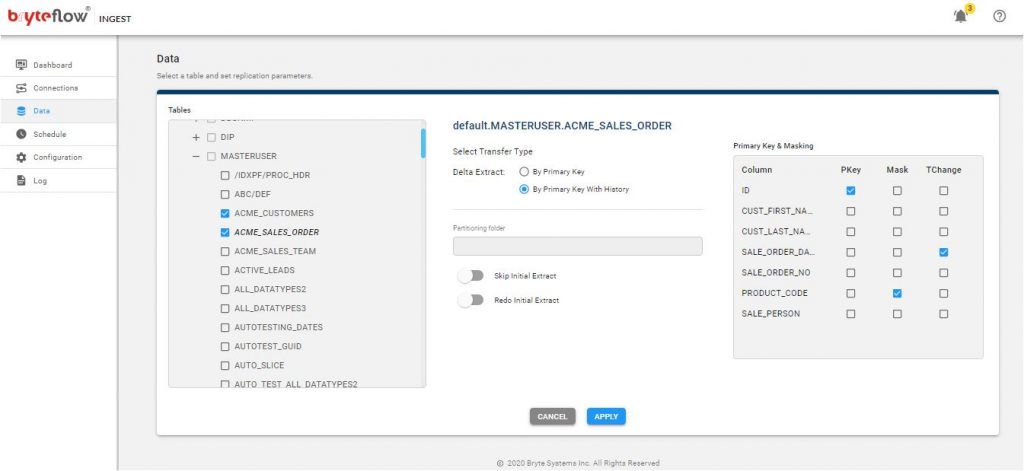

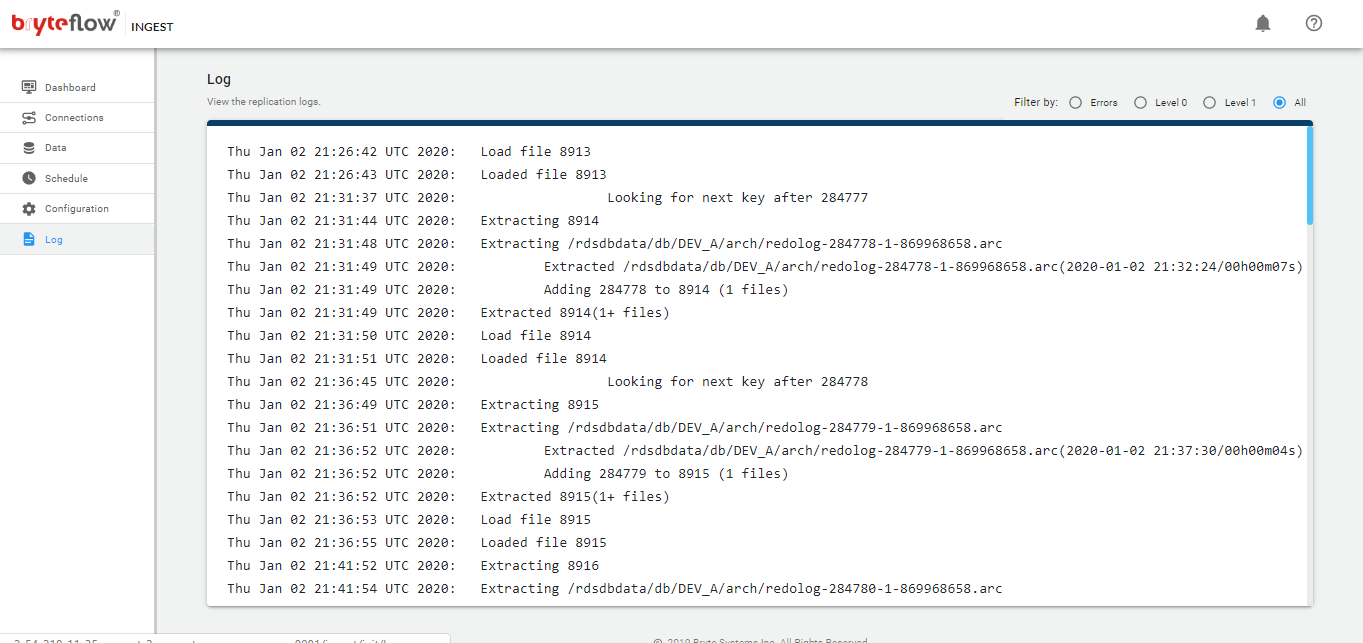

Dashboard

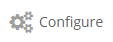

The dashboard provides a central screen when the overall status of this instance of BryteFlow Ingest can be monitored

- The Data Sources Transfer Summary shows the number of records transferred. When hourly is selected you can view the transfer statistics for 24 hours, if daily is selected the monthly statistics are displayed.

- The pie chart displays the status of the process

- Extraction, denoted by red

- Loading, denoted by orange

- Loaded, denoted by green

- Hovering on the bar graph gives the exact number of records transferred.

- The pie chart displays the status of the process

- Schedule Extract Status displays the schedule status.

- The Configure icon will take you to the configuration of the source tables, specifically the table, type of transfer, table primary key(s) and the selection of masked columns.

- The Dashboard provides quick access for configuration of BryteFlow Ingest (Source, Destination Database, Destination File System and Email Notification)

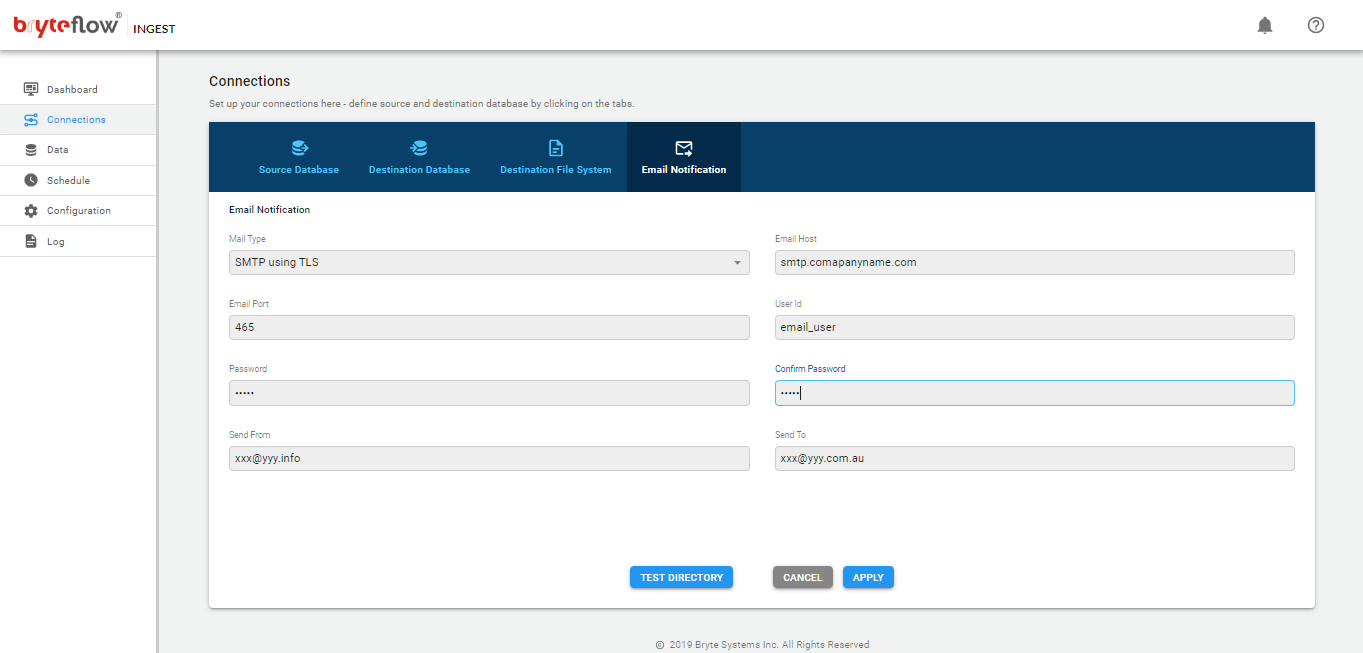

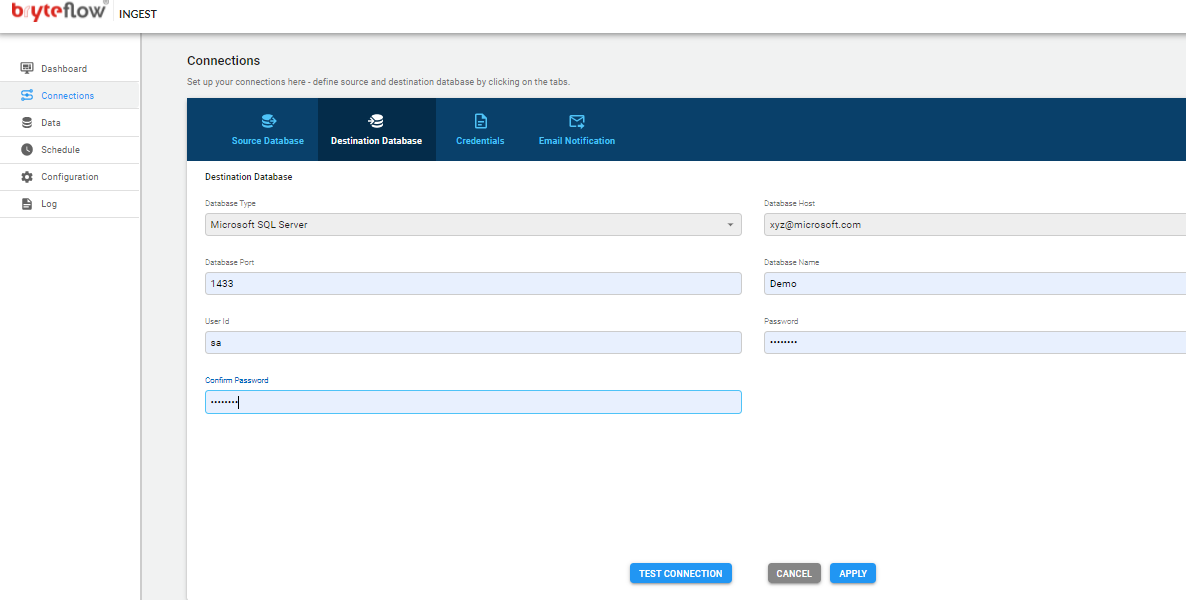

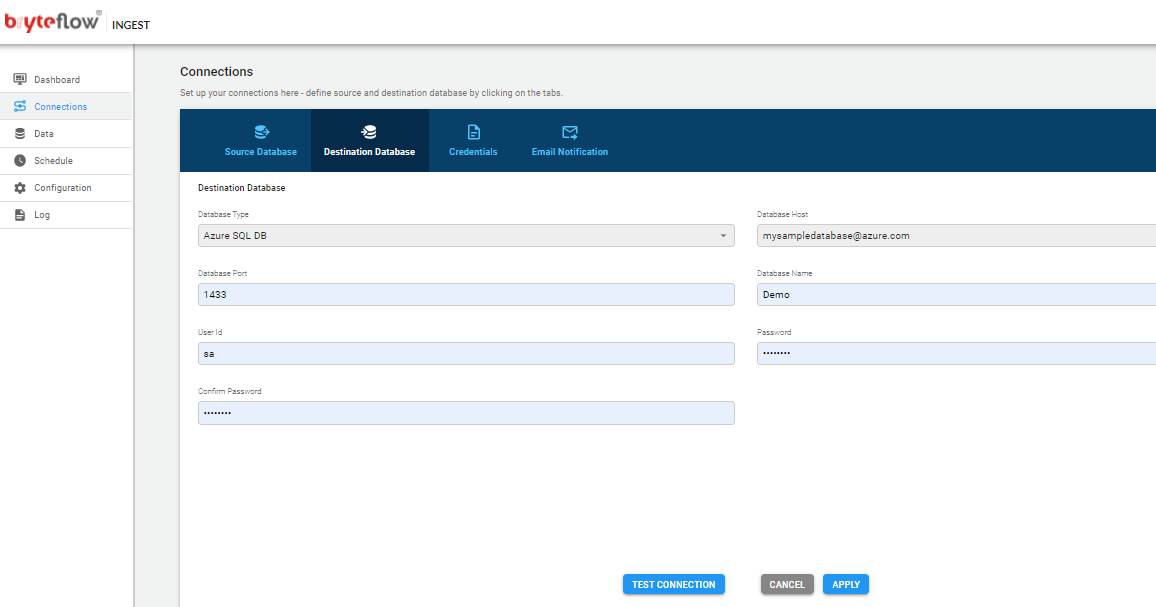

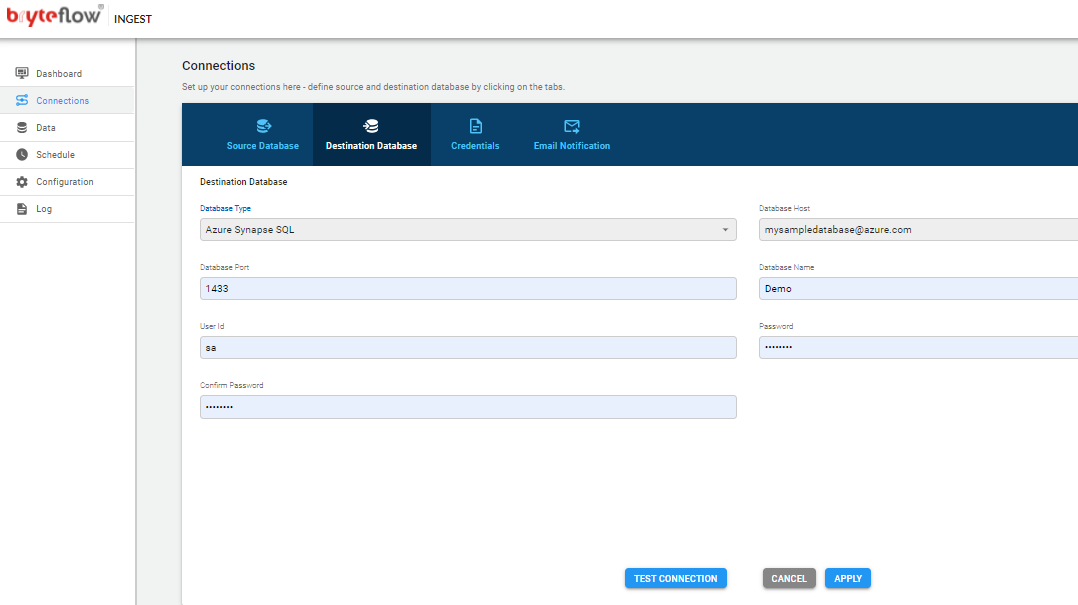

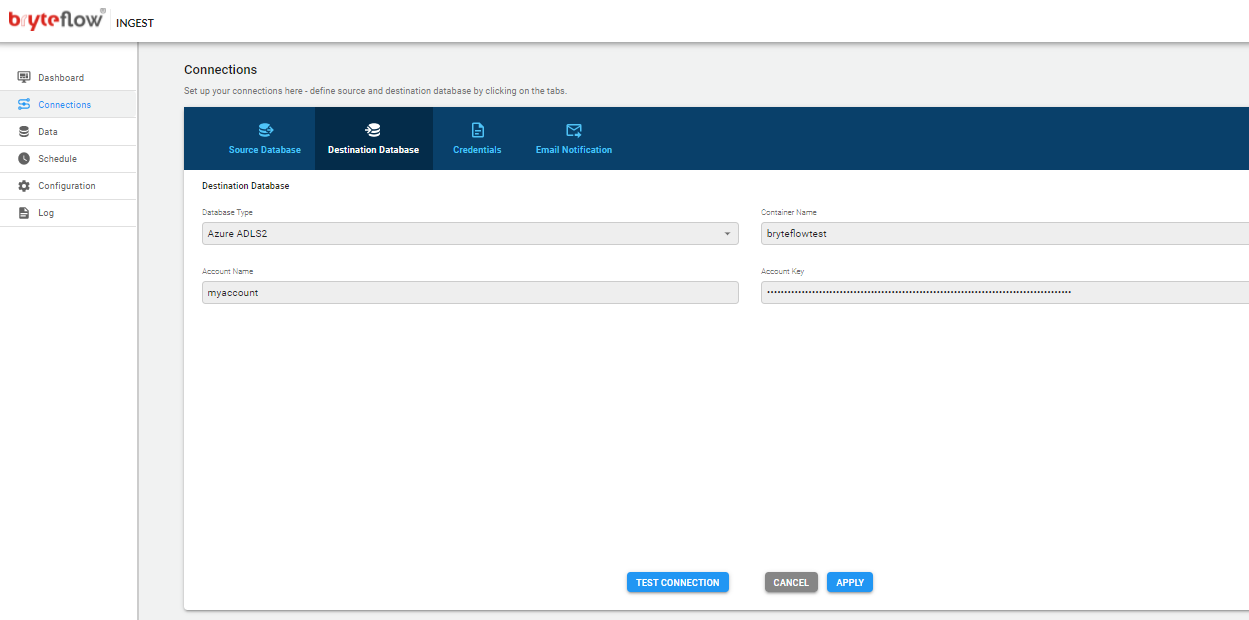

Connections

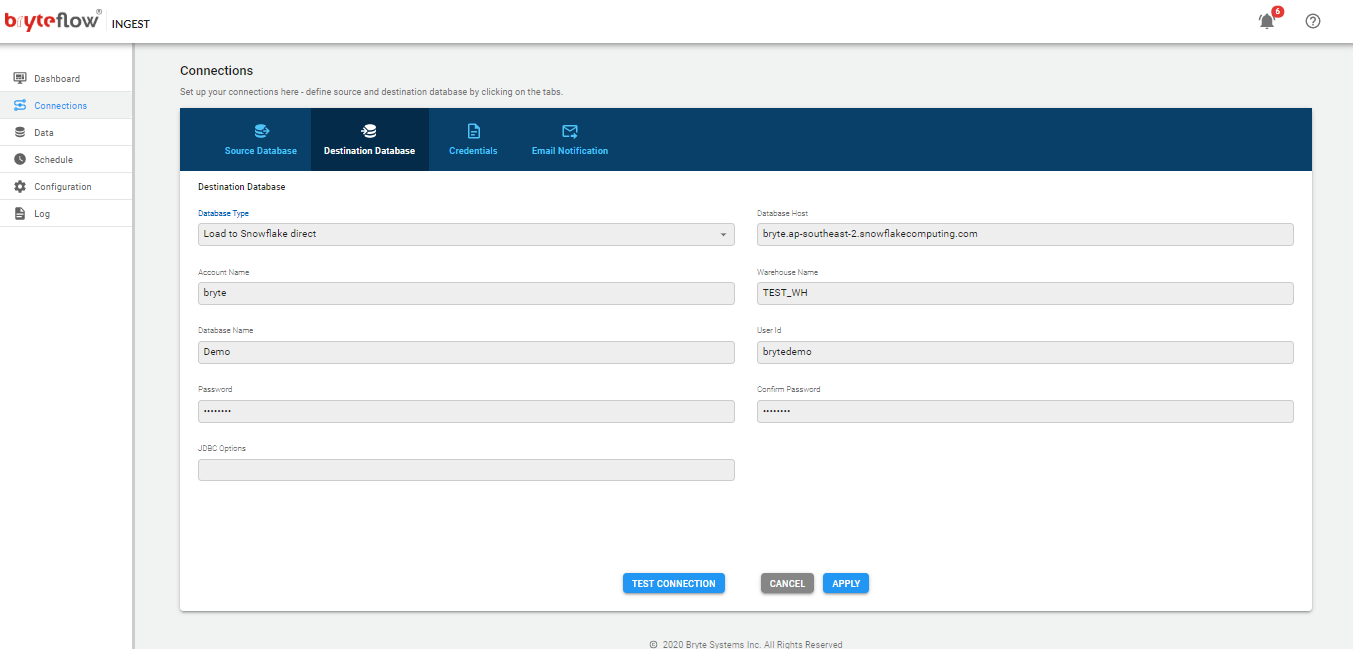

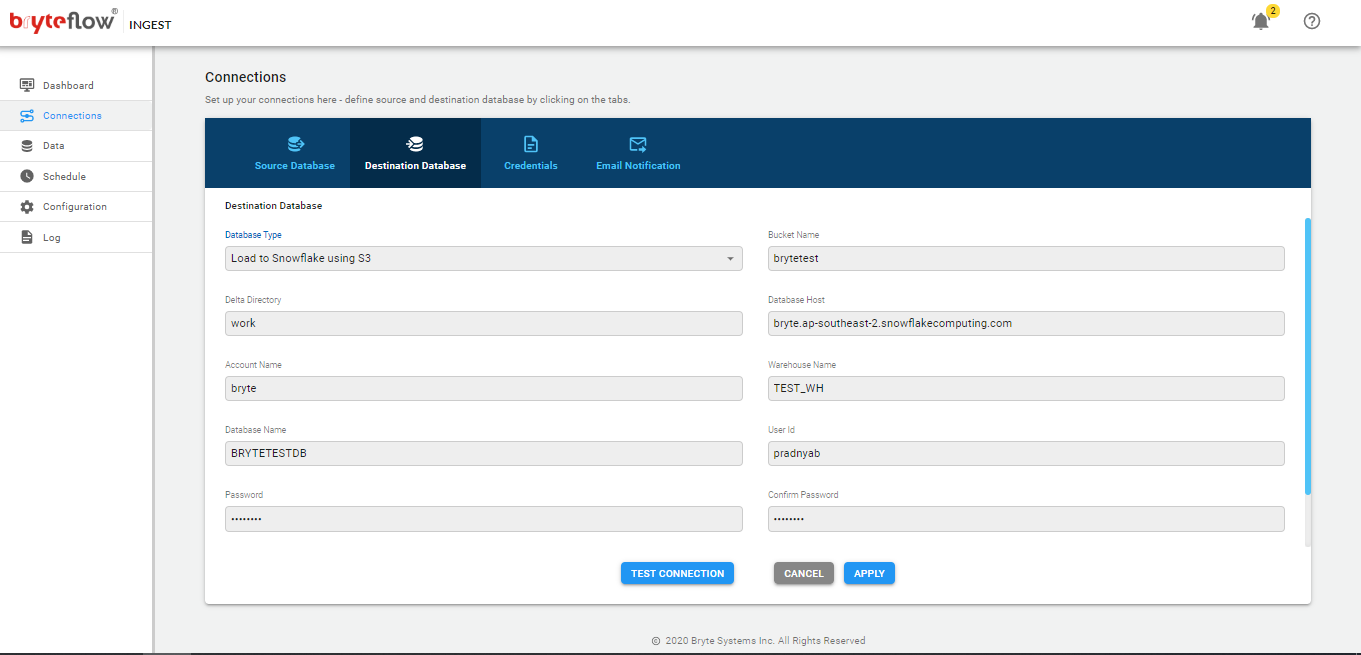

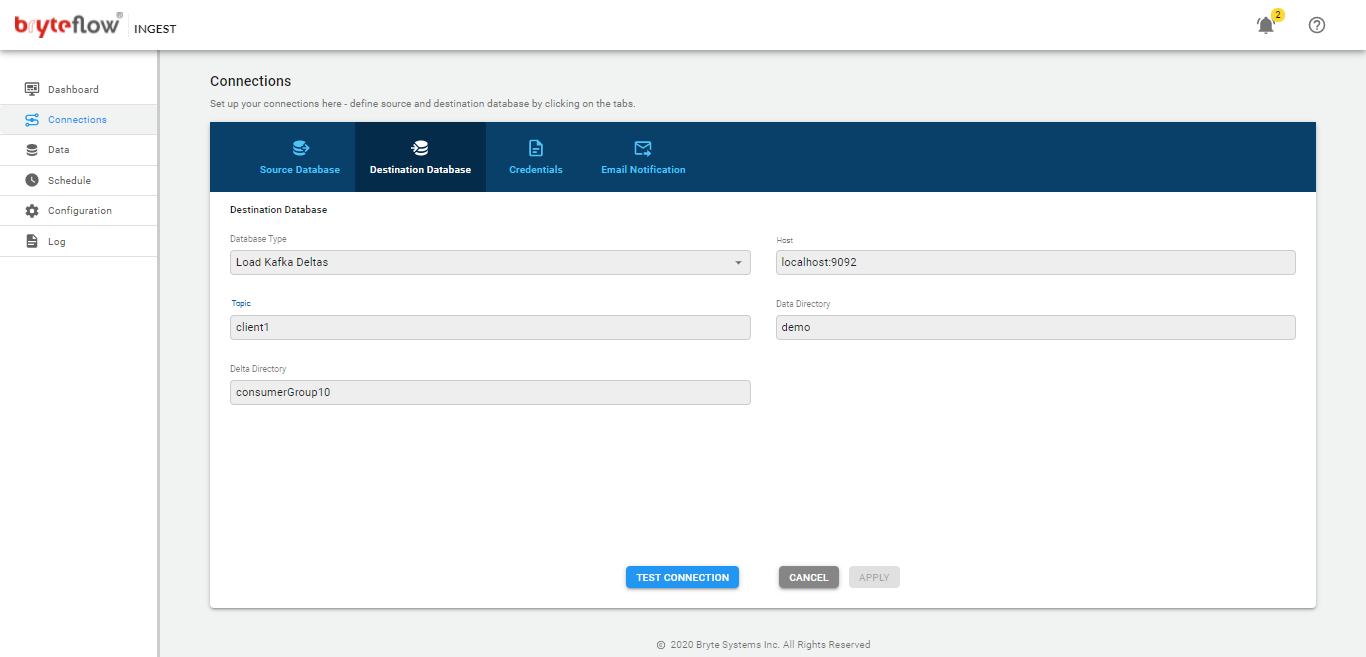

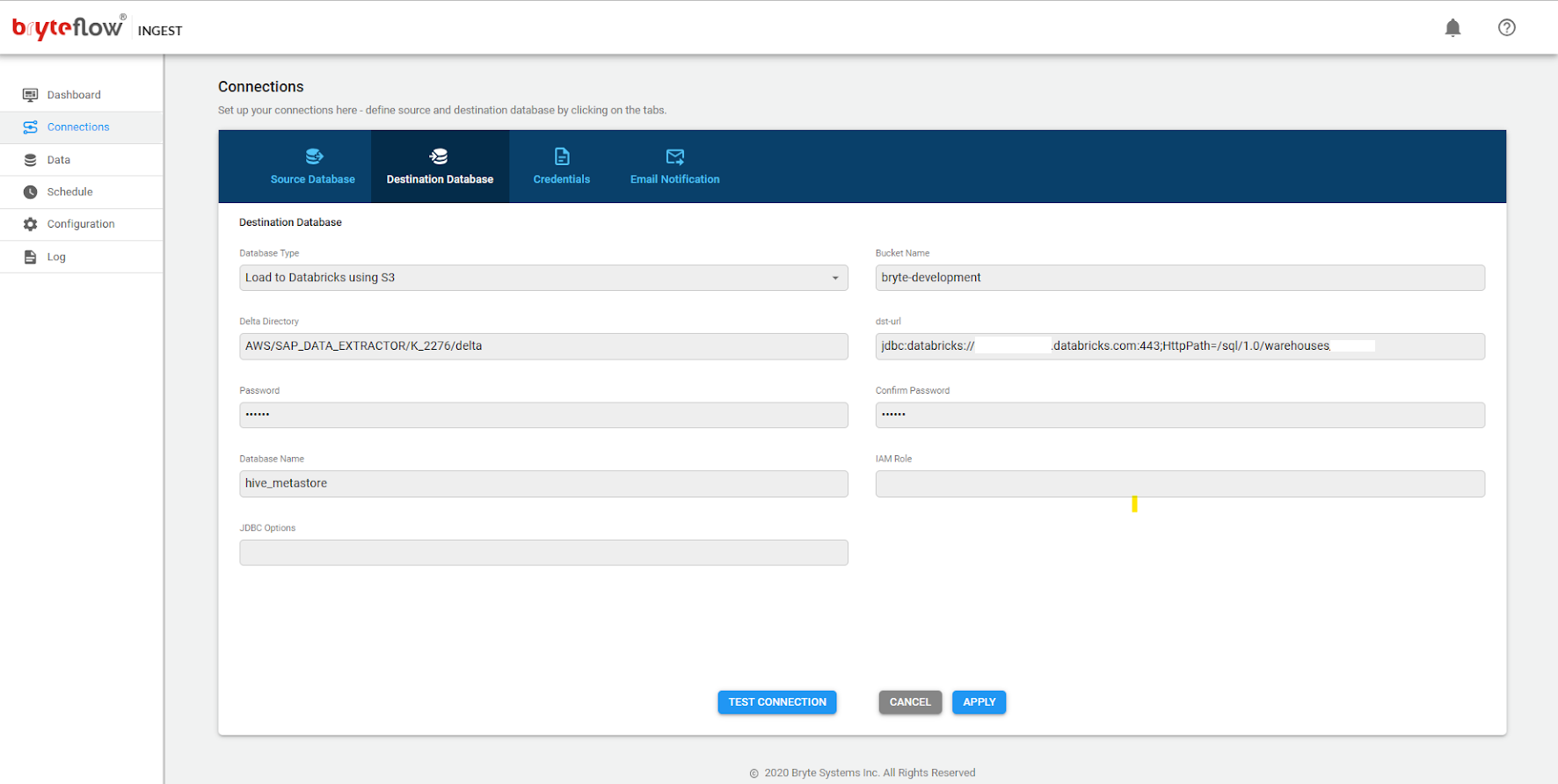

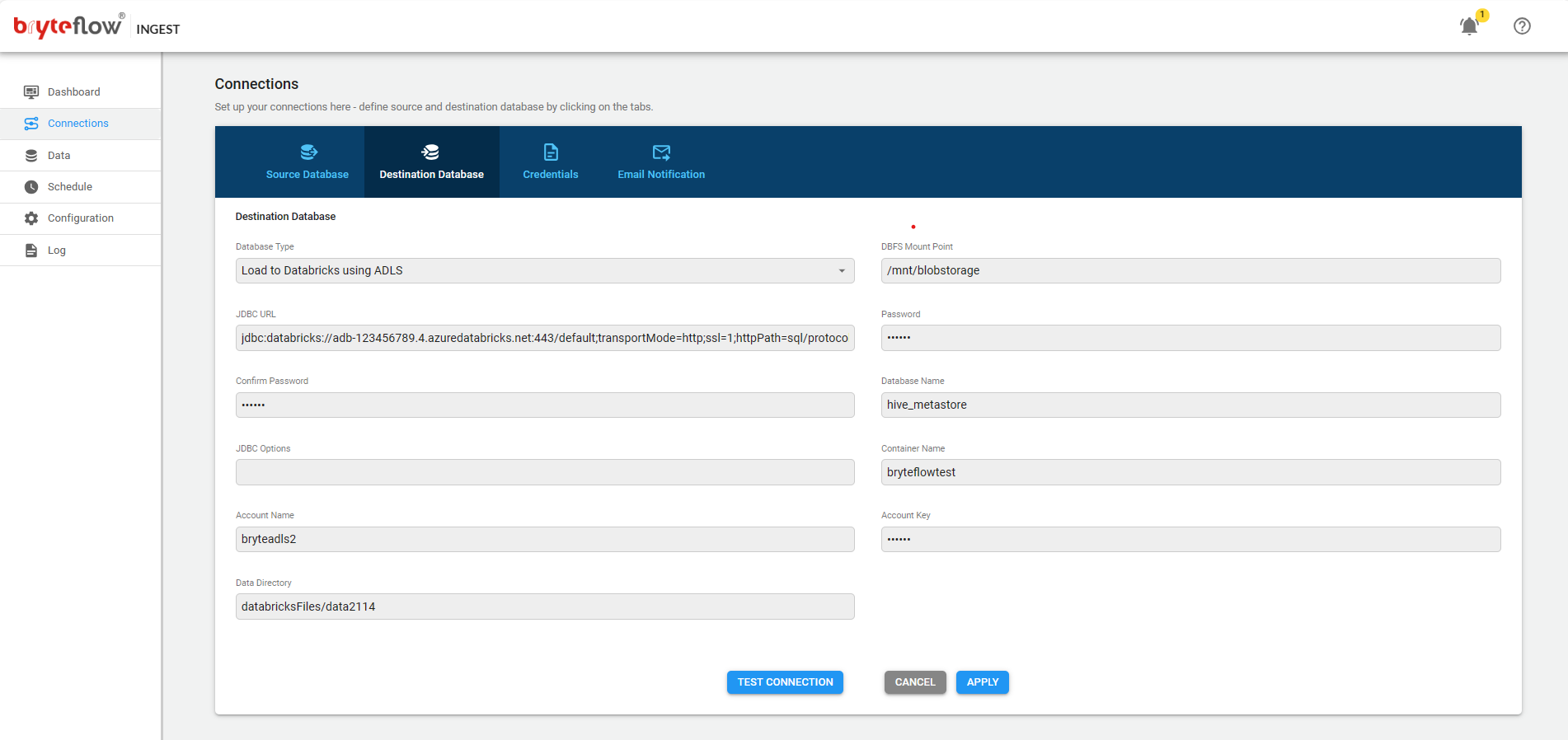

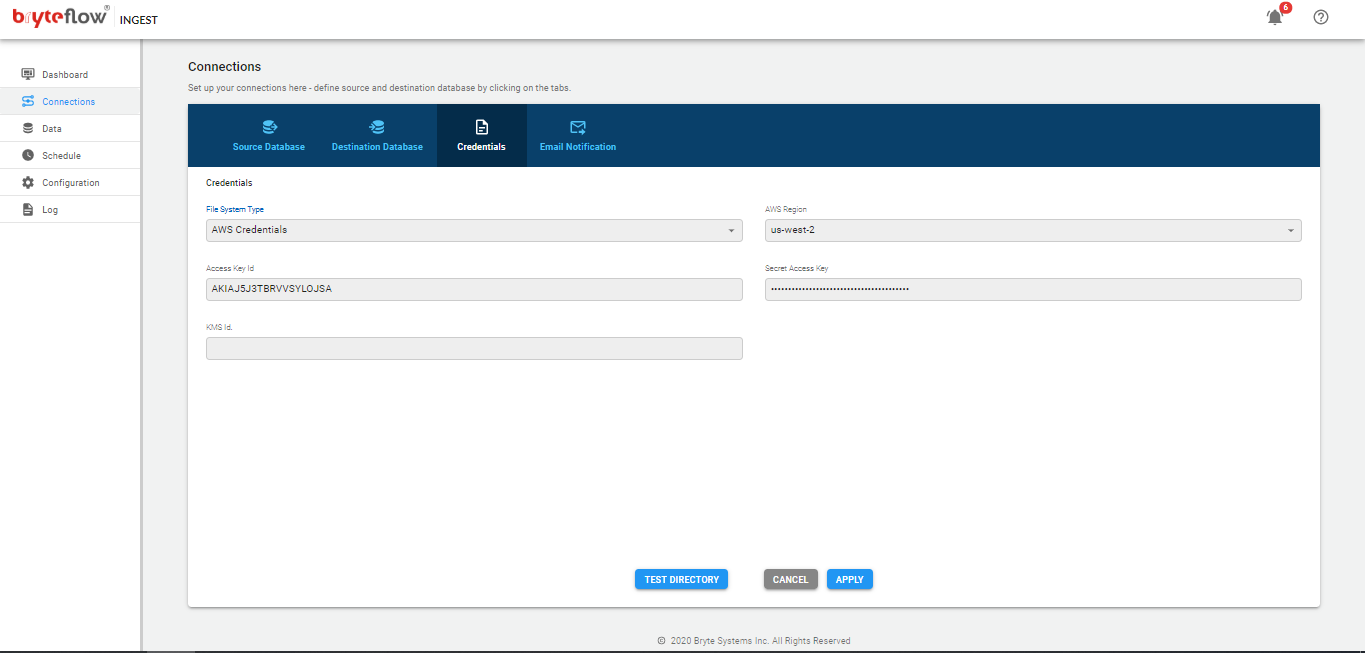

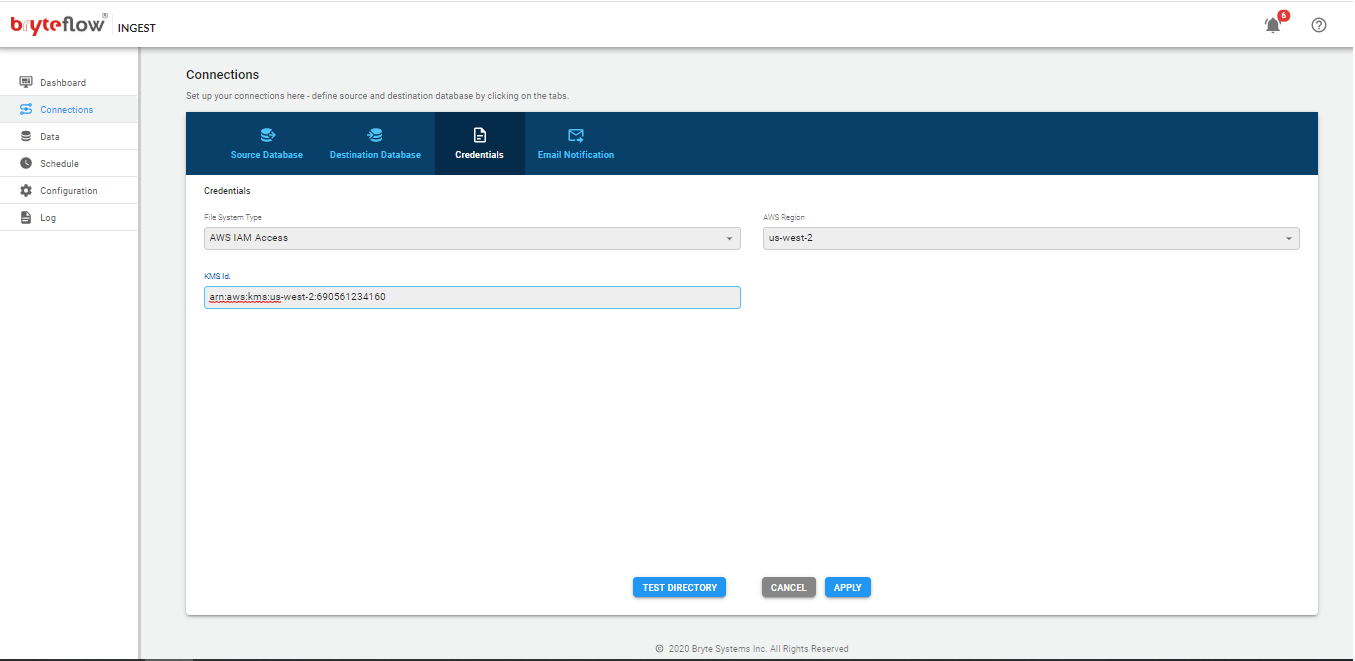

The connections tab provides access to the the following sub-tabs

- Source Database

- Destination Database

- Credentials

- Email Notification

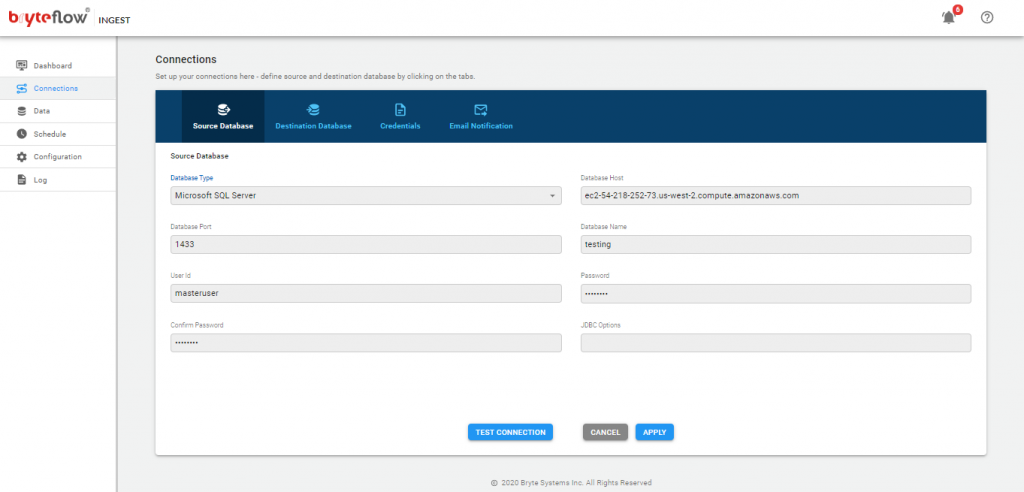

Source Database

Configuration of MS SQL Server, Oracle, SAP (MS SQL Server), SAP (Oracle), MySQL, Salesforce, PostgreSQL, S/4 HANA, SAP ECC and others as a source database.

MS SQL Server DB

- In the Database Type select “Microsoft SQL Server Change Tracking” from the drop-down list.

- In the Database Host field please enter the IP address or hostname of the database server

- In the Database Port field please enter the port number on which the database server is listening on. The default port for MS SQL Server is 1433

- In the Database Name field please enter the name of your database e.g. BryteMSSQL

- Enter a valid MS SQL Server database user Id that will be used with BryteFlow Ingest. If a Windows user is required, please contact BryteFlow support info@bryteflow.com to understand how to configure this

- Enter Password; then confirm it by re-entering in Confirm Password

- Please note, passwords are encrypted within BryteFlow Ingest

- JDBC options are optional, can be used in order to extend the JDBC URL used to access the databases.

- Click on the ‘Test Connection’ button to test connectivity

- Click on the ‘Apply’ button to confirm and save the details

Available source connectors for Oracle database

- Oracle Logminer: Available for all versions of Oracle database. Extracts changed data from Oracle database archived logs only.

- Oracle Logminer (Pluggable DB): Available for all versions of Oracle Pluggable databases. Extracts changed data from Oracle database archived logs only.

- Oracle Remote Logminer: Available for all versions of Oracle database. Extracts changed data from a remote Oracle database for archived logs only.

- Oracle Remote Logminer (Pluggable DB): Available for all versions of Oracle Pluggable databases. Extracts changed data from a remote Oracle database for archived logs only.

- Oracle Continuous Logminer: Available for Oracle database versions 18c and below. Extracts changed data from Oracle database Redo logs as well as Archived logs.

- Oracle Continuous Logminer (Pluggable DB): Available for Oracle Pluggable database versions 18c and below. Extracts changed data from Oracle database Redo logs as well as Archived logs.

- Oracle 19c Continuous Logminer: Available for Oracle 19c database. Extracts changed data from Oracle database Redo logs as well as Archived logs.

- Oracle 19c Continuous Logminer (Pluggable DB): Available for Oracle 19c Pluggable database. Extracts changed data from Oracle database Redo logs as well as Archived logs.

- Oracle Fast Logminer : Available for all versions of Oracle database. Allows multi-threaded extraction of changed data from Oracle database archived logs only.

- Oracle RAC : Available for all Oracle database versions. Extracts changed data from Oracle RAC archive logs, it uses Oracle logmining.

- Oracle RAC (Pluggable DB): Available for all Oracle Pluggable database versions. Extracts changed data from Oracle RAC archive logs, it uses Oracle logmining.

- Oracle (Full Extracts): Available for all versions of Oracle database. It performs ‘Full Refresh’ of data in every delta load.

- Oracle (Timestamps): Available for all versions of Oracle database. It gets incremental data based on the timestamp columns.

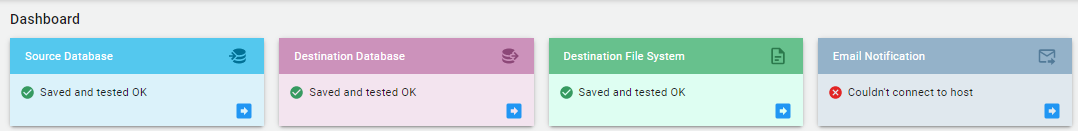

Oracle DB

- In the database type select the preferred connector from the drop-down list.

- In the database host field please enter the IP address or hostname of the database server.

- In the Database Port field please enter the port number on which the database server is listening on. The default port for Oracle is 1521

- In the database name field please enter Oracle SID

- Enter a valid Oracle database user id that will be used with Bryteflow Ingest.

- Enter Password; then confirm it by re-entering in Confirm Password

- Please note, passwords are encrypted within BryteFlow Ingest

- JDBC options are optional, can be used in order to extend the JDBC URL used to access the databases.

- Click on the ‘Test Connection’ button to test connectivity

- Click on the ‘Apply’ button to confirm and save the details

Please note: When using SID to connect to a dedicated Oracle server instance use ‘:SID’ in the Database Name of source configuration.

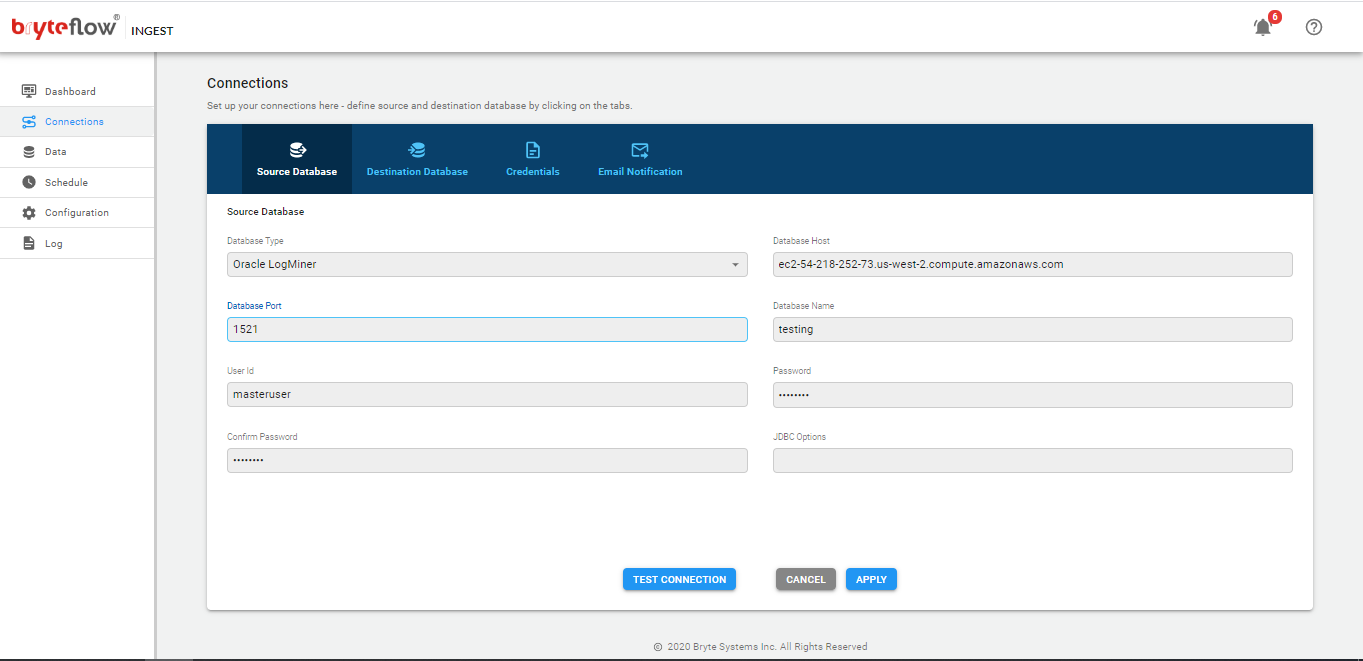

Oracle Pluggable DB:

- In the database type select appropriate option ‘Oracle Log Miner (Pluggable DB)’ , ‘Oracle Continuous LogMiner (Pluggable DB)’ from the drop-down list.

- In the database host field please enter the IP address or hostname of the PDB container.

- In the Database Port field please enter the port number on which the pluggable database is listening on. The default port for Oracle is 1521

- In the database name field please enter Oracle SID

- Enter a valid Oracle database user id for the pluggable db that will be used with Bryteflow Ingest.

- Enter Password; then confirm it by re-entering in Confirm Password

- Please note, passwords are encrypted within BryteFlow Ingest

- Enter the root container database name in ‘Remote DB Name’

- Enter root container user id in the ‘Remote User ID’

- Enter Password for the root user in ‘Remote Password’ ; then confirm it by re-entering in Confirm Password

- Please note, passwords are encrypted within BryteFlow Ingest

- JDBC options are optional, can be used in order to extend the JDBC URL used to access the databases.

- Click on the ‘Test Connection’ button to test connectivity

- Click on the ‘Apply’ button to confirm and save the details

Please note: When using SID to connect to a dedicated Oracle server instance use ‘:SID’ in the Database Name of source configuration.

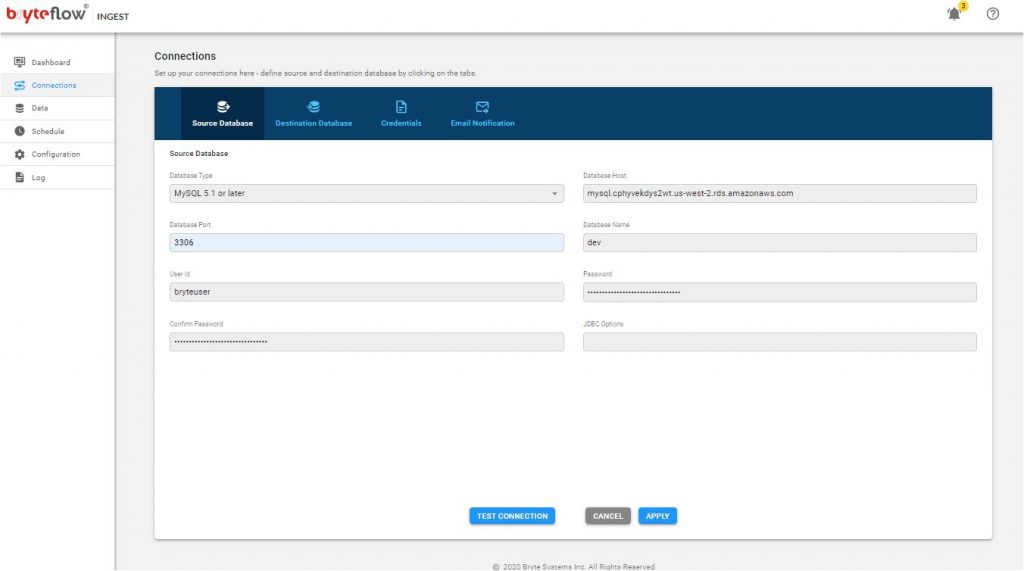

MySQL

- In the Database Type select “ MySQL 5.1 or later” from the drop-down list.

- In the Database Host field please enter the IP address or hostname of the database server.

- In the Database Port field please enter the port number on which the database server is listening on. The default port for MySQL Server is 3306.

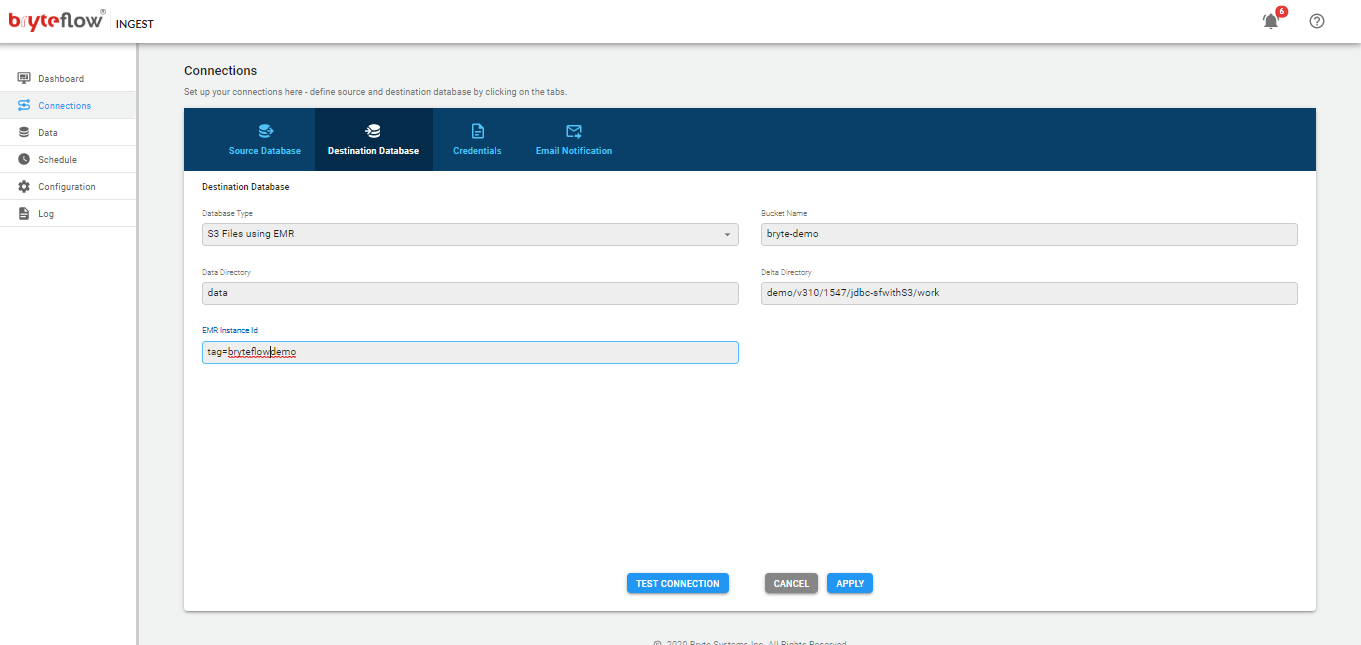

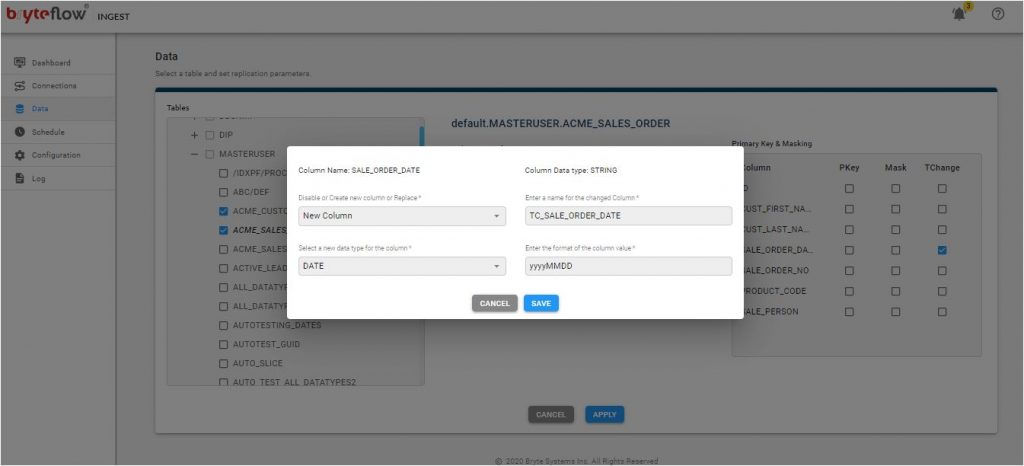

- In the Database Name field please enter the name of your database e.g. BryteMySQL.