Bryte named as the AWS Technology Partner of the Year 2018

November 10, 2018

Headache points – Liberating your data from SAP, Oracle, SQL Server and other complex application environments

November 10, 2018Executives and business leaders often ask me about data security for their Amazon S3 Data Lakes. Here, I’ll provide a brief and easy digestible overview on my team’s approach – designed to be read and consumed in under 5 minutes 🙂

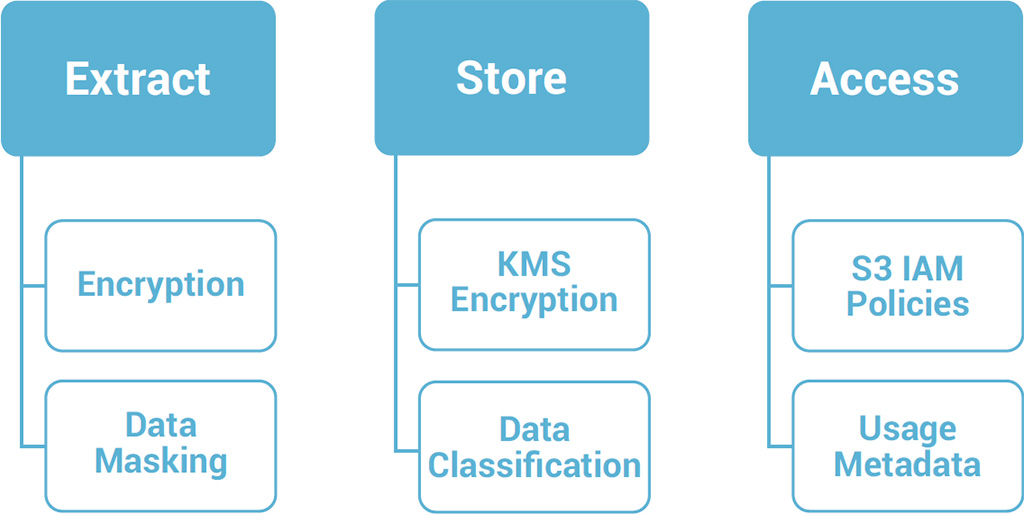

We start by looking at data across key stages of its lifecycle and then leverage proprietary technologies with native AWS components to secure data across each stage from extraction through to eventual access and consumption.

Extraction security measures

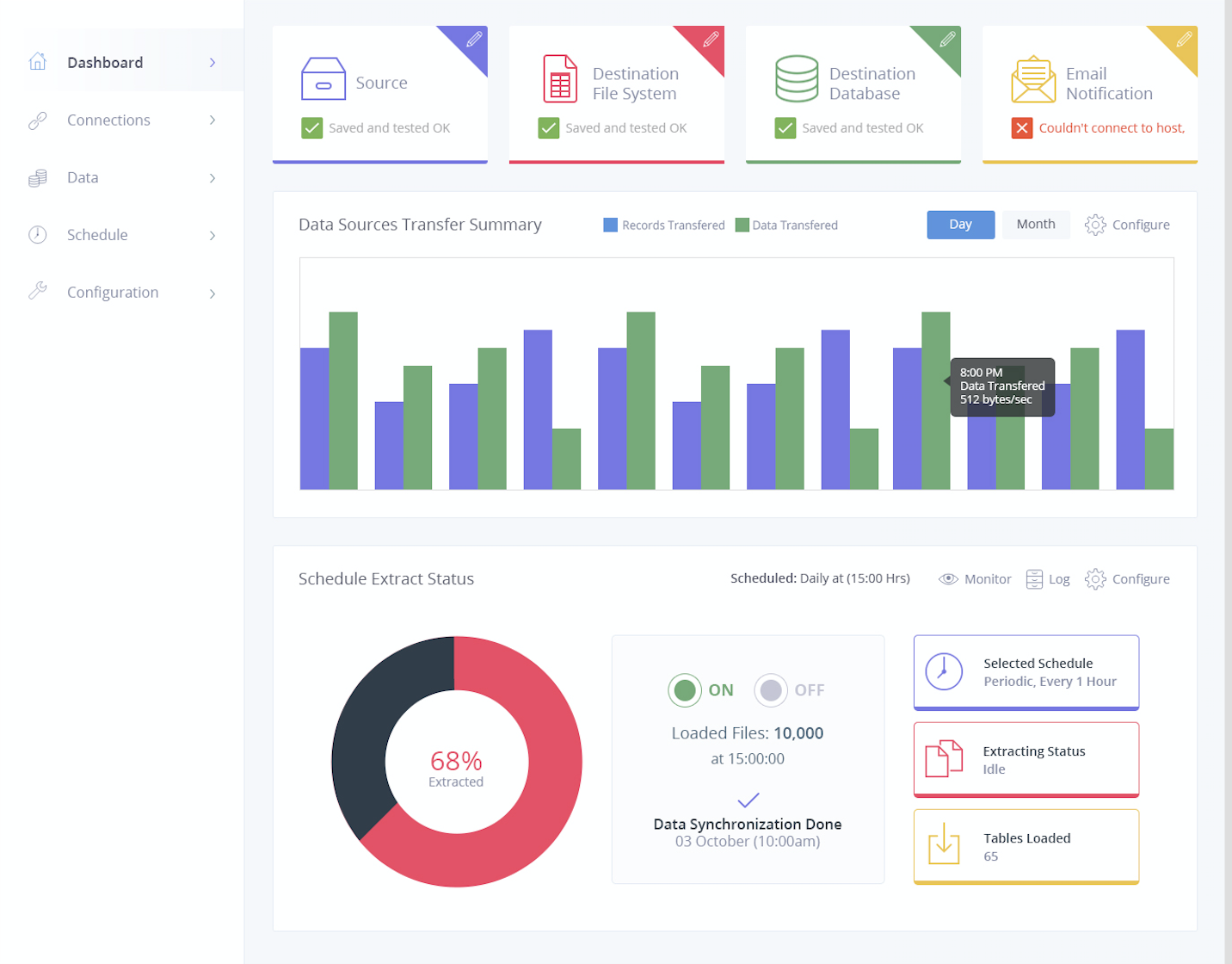

After repeated frustrations with existing tools and hand coded solutions, the team at Bryte decided to develop Bryteflow – the only out-of-the-box application designed specifically for efficient, secure and stream based data ingestion to Amazon S3. Bryteflow automatically encrypts data in transit with SSE encryption and provides users with a single click option to mask sensitive data fields. With Data Masking, certain fields are not extracted from the underlying source system at all. Included below is a screenshot from BryteFlow’s click based UI to configure secure data ingestion streams without having to write any code.

Data storage security measures

BryteFlow automatically integrates Amazon KMS encryption into data ingestion workflows. This provides a fully managed service for clients to create and control the keys used to encrypt or decrypt data.

Once raw data has been ingested and secured, our team works with clients to split or filter the raw data into multiple different Amazon S3 folders based on security groupings and classifications.

Once raw data has been ingested and secured, our team works with clients to split or filter the raw data into multiple different Amazon S3 folders based on security groupings and classifications.

Each security classification is configured as a customizable job using standard SQL code on S3 with BryteFlow’s data management workbench.

In the following example, raw data from 6 datasets in S3 is filtered using the ‘Model Tasks Data Asset’ business logic.

This job will output a new file in a separate Amazon S3 folder, which will be visible to a specific class of users with appropriate privileges.

The user group will not have access to any other fields from the 6 underlying data sets.

Data access security measures

Access security is configured by using Amazon IAM policies at the S3 file or folder level. These policies enable granular security controls to limit the files that users can access and when they can access them. These IAM policies are often integrated with existing corporate directories like Microsoft Active Directory.

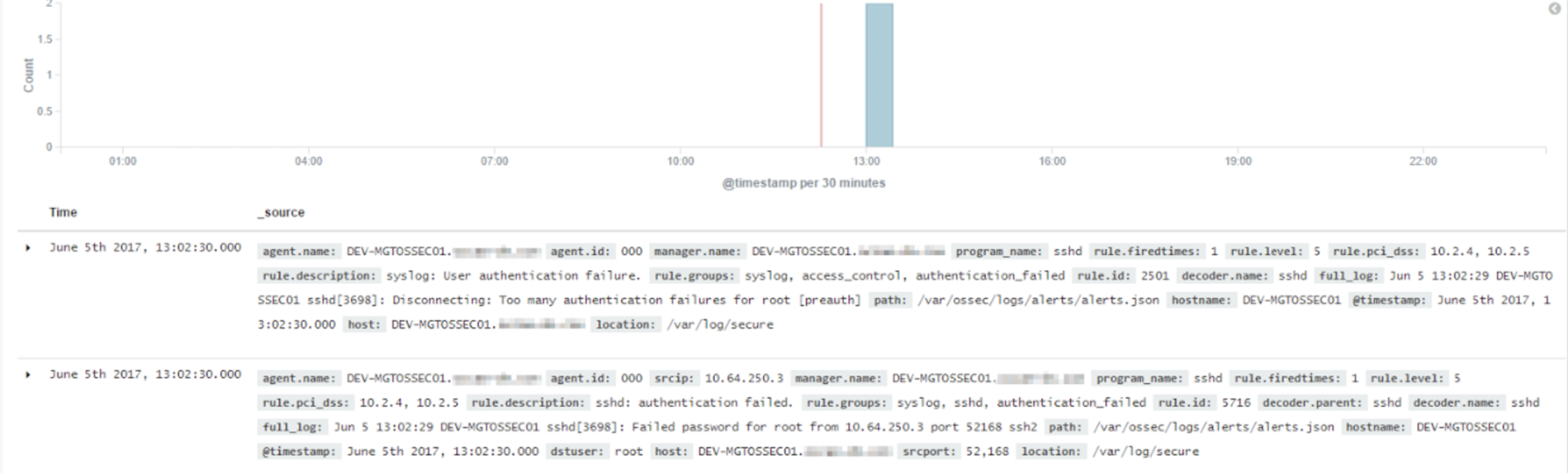

As per the following example, these IAM policies can be further complemented with Metadata solutions using a combination of tools like AWS Lambda, Elasticsearch and Kibana. This enables clients to have real time visibility and alerts across Amazon S3 data search and access activity. In the example below, a client has visibility across the list of users that failed authentication using a filter on the agentname, agentid and ruledescription fields.